Team

The most significant risks that we face are not finding a reliable implementation of an algorithm that can detect poses in real time. Thought we think we have found something reliable, we still need to test in the upcoming week whether or not it will be sufficient. In order to manage this, we are thinking up an alternate plan that will instead of processing in “real time”, allow for a buffer of a couple of seconds for the processing to complete. It will only be a slight alteration though, and seems to be something we can deal with pretty easily. The main change that was made this week was the shift from mobile to web platforms. As I mentioned, there may be slight alterations coming later when it comes to the “real time” aspect of our project, but both of these changes were things that we had mentally accounted for earlier, and therefore will not change the overall trajectory of our project. The schedule has not changed, except for a slight shift due to unexpected errors that occurred during the installation of the pose detection algorithm.

Kristina

This week, I tried getting OpenPose set up on my personal laptop and did some research into how to use OpenCV/OpenPose on mobile. Through reading different articles and projects, and testing out some trial apps, I didn’t find a solution to use OpenPose with even close to real-time latency on mobile, so we all decided to just try getting OpenPose running on our laptops first. Since I have a PC and a possible Ubuntu VM to use while Brian and Umang have Macs, we tried different setups, but all ran into the problem of not having a Nvidia graphics card (my one screenshot just shows one of the error messages while trying to run a simple OpenPose Windows demo). We realized we would have to spend more time next week looking into other options, possibly changing the scope of our project, so we had to change the schedule a bit. We still need to do more work in figuring out what joint detection we want to use in order to determine whether we can still make a mobile app or if we should make a web application. I was supposed to start with the UI design at the end of this week, but I couldn’t due to OpenPose problems we encountered. The goal is that next week we’ll have this figured out and a simple UI design.

Brian

This week I focused on getting any implementation of a program to obtain poses from images running. At first we wanted to have a program that was able to run on mobile. However, after facing problems with that, we decided to work on finding something to run as a webapp. We had settled on openpose, but after a couple hours of troubleshooting installation errors, I found out that the devices we have access to do not have the capabilities to run or even install the program. Also I didn’t have the necessary permissions on the external devices to download the requirements. After failing for a bit, I was able to find another implementation that was able to run with pretty good framerate (~15-18) on my laptop.

They say that there is an implementation for mobile, so for next week, I would like to have this running there. Otherwise, we will proceed with a webapp. I would also like to get access to the point data from this app, and start playing around with comparing poses in different images.

Umang

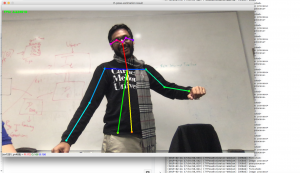

This week I worked on getting a tensorflow implementation of openpose running. I quickly learned that the framerate of mobilenet (https://arxiv.org/pdf/1704.04861.pdf) would not be conducive to dance pose estimation on mobile devices: 3.23 fps is too slow for real time processing. See figure at the end of the document for me attempting to dance. Mobilenet_fast may provide us with the latency if we run it on a NVIDIA Jetson TX2. I started the rank aggregation framework we need to aggregate the poses Kristina gathers (this does not need to happen in real-time but we need a prioritization framework to pick the joints to correct).

In the coming week, I hope to assess the pretrained estimation networks we can use and begin to attempt naive aggregation. Then, I also hope to also work through the rank aggregation framework for the ground truth such that when Kristina has the data, my framework can be applied.