Team

After succeeding in finishing most of the work for the video corrections aspect of project, we decided that it was time to start integrating what we had done so far in order to get a better picture of how our project was going to look. Additionally, we realized that the amount of time that our corrections were taking was way too much for a user to justify. Therefore we wanted to find ways to speed up the pose processing. In order to do this, this we focused on:

- Looking into AWS as a way to boost our processing speeds

- Merging the existing pipelines with the UI and making it look decent

It’s also our in-lab demo next week, so we had to spend some time polishing up what our demo would look like. Since we only started integration this week, we still have problems to work through, so our in-lab demo will most likely not be fully connected or fully functional.

Kristina

This week I spent more time polishing up the UI and editing the implementation to show the recent changes made to our system. This involved being able to save a video or picture to files that could then be accessed by a script, being able to display the taken video or picture, being able to redo movement, and ensuring that the visualization on the screen acted as a mirror facing the user. The latter part was an important aspect that actually seems so small unless it doesn’t work and that we didn’t even realize until now. It’s so natural to look at a mirror; that’s how a dance class is conducted, and even more importantly, that’s how we’re used to seeing ourselves. Since our application is replacing dance class in a dance studio, it was important that the mirrored aspect of video and pictures worked. Also, because of our underestimate of the time necessary for each task, we realized that adding a text-to-speech element for the correction wasn’t the most necessary and we could replace it with a visualization, which would probably be more effective for the user since dance is a very visual art to learn.

Brian

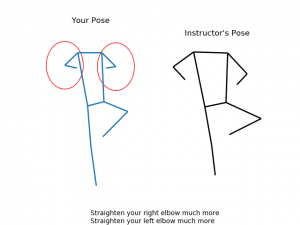

Since I finished up on creating the frame matching algorithm, as well as helping with the pipelining last week, I decided to fine tune some of the things that we did to make it work more smoothly. Since we were only printing corrections on the terminal last week, I wanted to find a way to visualize the corrections in a way that made it apparent what the user needed to fix. In order to do this, I created functions to graph the user poses, and put them next to the instructor pose, with red circles highlighting the necessary correction. I also displayed the correction text in the same frame. I figured this would be an easy method to show all of the corrections in a way that would be easy to translate to the UI.

Umang

This week was all about speed up. Unfortunately, pose estimation using CPUs is horridly slow. We needed to explore ways to get our estimation under our desired metric of doing the pose estimation on a video in under 30 seconds. As such, I decided to explore running our pose estimation on a GPU where we would get the speedup we need to meet the metric. I worked on getting an initial pose estimation implementation of AlphaPose up on AWS. Similar to when AlphaPose runs locally, I run AlphaPose over a set of frames and give the resulting jsons to Brian to visualize as a graph. I also refactored a portion of the pipeline from last week to make it easier to ping the results from the json. The conflicting local file systems made this messy. I hope to compare pose estimation techniques (from GPUs) and continue to refactor code this next week.