Team

With the midpoint demo next week, this week was focused on getting our final elements that we want to show finished. We worked on the correction algorithm for stills, which will be run through a script for the demo, and on creating a UI to show what our final project will look like. In the upcoming weeks, we will work on fully connecting the front and back ends for a good user experience and a working project.

Kristina

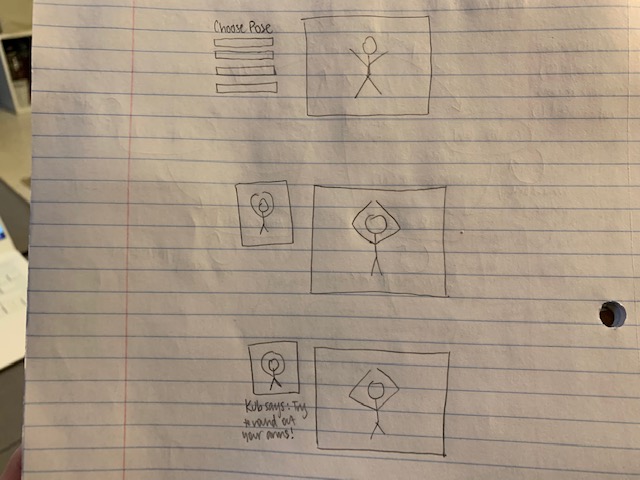

Since this week was so busy for me, most of my work will be front-loaded into the upcoming week to get it done by our actual midpoint demo. I’m working on creating a UI skeleton and a starting portion of the connection between the front end and back end. After the demo, I will start fully connecting the application together and integrating the text to speech element.

Brian

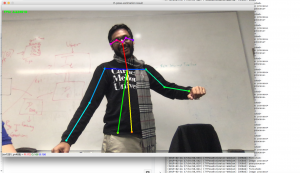

This week I worked on completing the necessary items for the initial demo. I was able to create a foundation for the pipeline that is able to take an image and output a correction within a couple of seconds. You can customize what moves you are trying to work on, as well as how many corrections you would like to receive at a time. The program will spit out the top things you need to work on in a text format. It will also draw a diagram of what your pose looked like, with circles over the areas that you need to correct. For next week, I would like to start working on corrections for videos, and work with the movement data that we will be collecting soon.

Umang

This week I worked with Brian to complete the demo; particularly, I worked on an end to end script that would run our demo from the command line, take a user captured image, give the necessary corrections to deviate back to the mean ground truth example. Based on the command entered, the user can denote which dance move they would like (and which version of the pretrained model — fast or not). Next week, I will be traveling to London for my final grad school visit, but I will be thinking about the linear interpolation of how we will frame match for videos of dance moves. I also hope to leverage the increased training data to run the current pipeline with more fidelity.