Week 3 Report

Team CB

This week Elias has been working on vision tracking part. Based on last week’s presentation, professor Savvides suggested another way of tracking – track the whole person instead of a sticker on shoe heel. Elias tried to mount the camera onto a suitcase with different angles to see what is a desired angle and distance to track the entire body of a person. He found that instead of put the camera parallel to ground, it is better to make it vertical and the range of image frame will be longer, which will result in a larger frame to do the detection work. Elias was also deciding which tracking and locating methods to use. Since we changed to track the entire person, we can stick an April tag to the back of the user, and use python(open CV) apriltags package to do the tracking instead of have taken multiple images of the same person and train them with YOLO. The comparison is still in process, and Elias haven’t made the final decision of what to use.

Next week Elias will finalize the algorithm of detecting April tag and see the accuracy of that. If the accuracy is great(larger than 95%), we will not be using the YOLO.

This week Zhiqi has been working on the inertial sensing and PID control. Based on the block diagram we have right now, Zhiqi has been working on the code of the controller in Matlab. Recall from last week, we have the design of the PID controller

P_motor = K/((J*s+b)*(L*s+R)+K^2).

This week he started with a simple proportional controller by simply setting the gain of this loop is 100. The output has a large overshoot and steady state error. By adding I and D control, we will tune the parameter to figure out an optimized solution to the speed of the motor controller.

Next week Zhiqi will be working on integral and derivative controller which will help us reduce the steady-state error and the overshoot. After implementing the remaining the proportional controller, we will focus on parameter tuning.

Last week, we received our IMU parts. We bought a 9DoF Razor IMU M0 from Sparkfun. Zhiqi also worked on this IMU parts. Zhiqi read the documents and installed the library and ran some tests to figure out how this work. So far we can tell that the IMU gives us a accurate readings of acceleration and direction. By calculating the integral and direction, we could install this part and ran the calibration to determine the physical status of the robot.

Next week we will focus on the algorithms that determine the velocity of the IMU and how to calibrate the IMU. Our schedule remains unchanged.

-Zhiqi Gong

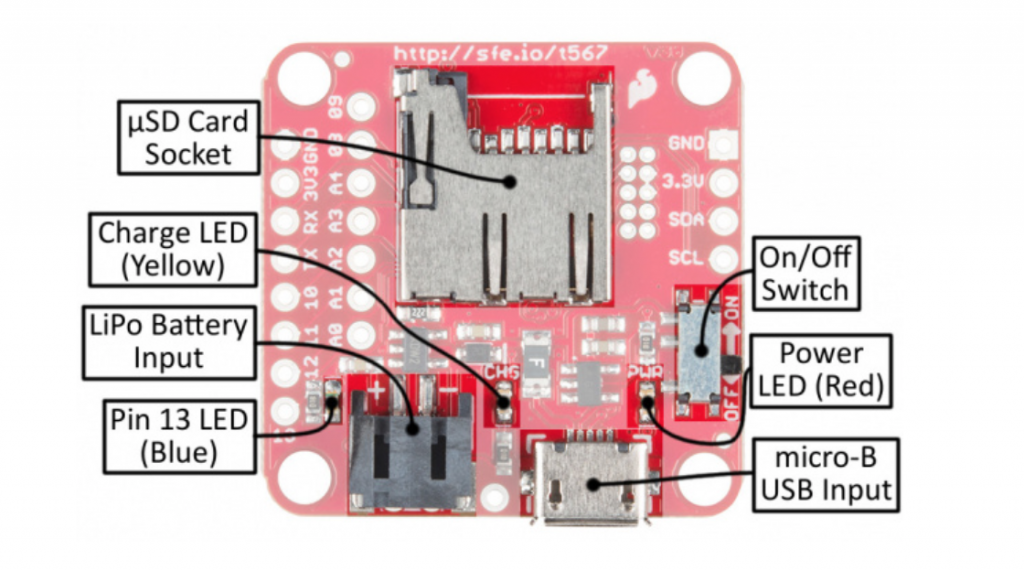

This week I have focused on IMU. We bough the the 9DoF Razor IMU M0 from Sparkfun. This is a integrated sensor that combines a SAMD21 microprocessor with an MPU-9250 9 degrees of freedom sensor to create a portable inertial measurement unit (IMU). The goal of this sensor in our project is to help the robot determine the direction and acceleration, and by integrating them we could calculate the velocity and distance. The roll, pitch and yaw could give us the direction. This is called inertial sensing.

The 9DoF Razor IMU M0 has a µSD card socket, LiPo battery charger, power-control switch, and a host of I/O break-outs for project expansion. We can read the data from the board through a USB connect to the Micro-B USB Input. We could also extend its battery life by connecting extra Li-on battery. Besides, the 9DoF Razor IMU M0 is an open-source hardware design. We could download the example firmware from the SparkFun MPU-9250 Digital Motion Processing (DMP) library. This gives us the ability to read and write to the board. See attached file for readings. The IMU will give us accelerometer, gyroscope, and magnetometer readings.

<timeMS>, <accelX>, <accelY>, <accelZ>, <gyroX>, <gyroY>, <gyroZ>, <magX>, <magY>, <magZ>

We can also modify the readings by setting up some flags. Currently we have been done with getting the IMU working and figuring out how to mount with the right direction. I will keep working on the algorithms, calibration and compatibility with the middleware.

Another part that I am working on is the PID controller. Recall from last week we have the transfer function of the PID controller

This week I began testing the PID controller in the Matlab.

P_motor = K/((J*s+b)*(L*s+R)+K^2);

I started by implementing the Proportional controller by setting the gain of the system as 100. With the help of the signal tool box and control system designer, we can see a large error and overshoot from the output. Theoretically, it appears that none of our design requirements can be met with a simple proportional controller.This is the start point where we want to lower certain errors based on the feedback. We will also work on the integral controller and derivative controller to lower the error. By tuning the parameter of the controller, we could always reach the set points of the speed by changing the output of the torque. In the next week, I will add these two controllers and run the simulation before we actually download the code onto the Jetson board.

-Elias Lu

Based on the feedback from Monday’s presentation, we began to consider another way of tracking. Instead of only track the shoe heels with stickers attached, we can track the whole person. There are two main reasons. Firstly, heels looks all alike, but an entire person can have different points of interest to track on. Secondly, heels move faster, and one can hide the other behind, so it is harder to maintain the tracking process.

Therefore I tried to mount the camera onto a suitcase with different angles to see what is the desired angle and distance of tracking. After few tests, I found out that instead of laying the camera parallel to ground, it is better to make it vertical so the range of image frame will be larger, which will be better for tracking. Also, since we changed to track the entire person, we can stick an April tag to the back of the user, and use python(open CV) apriltags package to do the tracking instead of have taken multiple images of the same person and train them with YOLO.

I am on the schedule, but we also need to extend one more week of doing the tracking since this is the most important part. Next week I will compare the accuracy of two different methods of tracking: the algorithm of detecting April tag and YOLO detection. If one of the accuracy is larger than 95%, we will use that one as our primary method of tracking.

0 Comments