Team CB Report

Week 2

Zhiqi Gong & Zixu Lu

This week, most of our ordered parts have arrived, including two Pololu encoded motors, Intel Realsense Depth Camera, Mecanum Wheels and IMU component.

Elias has been working on the computer vision part since last week. This week, we have the chance to take a look at the camera integration based on the results of the actual depth camera. We first tried to build up the ubuntu interface between jetson board and our realsense camera, including a camera calibration with Matlab. The depth information is not very accurate but it is acceptable for the use in our project. The next big chunk of our project is to make the machine learning tracking to work. We have different ideas about the tag design, either with april tags or concentric rings with different colors. If we use april tags, it might be hard to be downsized to stick on to heels, but concentric rings may not be unique enough to apply in variant environment. We might want to design some unique tags that are splitted into different shapes with different color coding, and we can use the YOLO api to train for detecting. Next week, we will try to finalize the tag and start the training process.

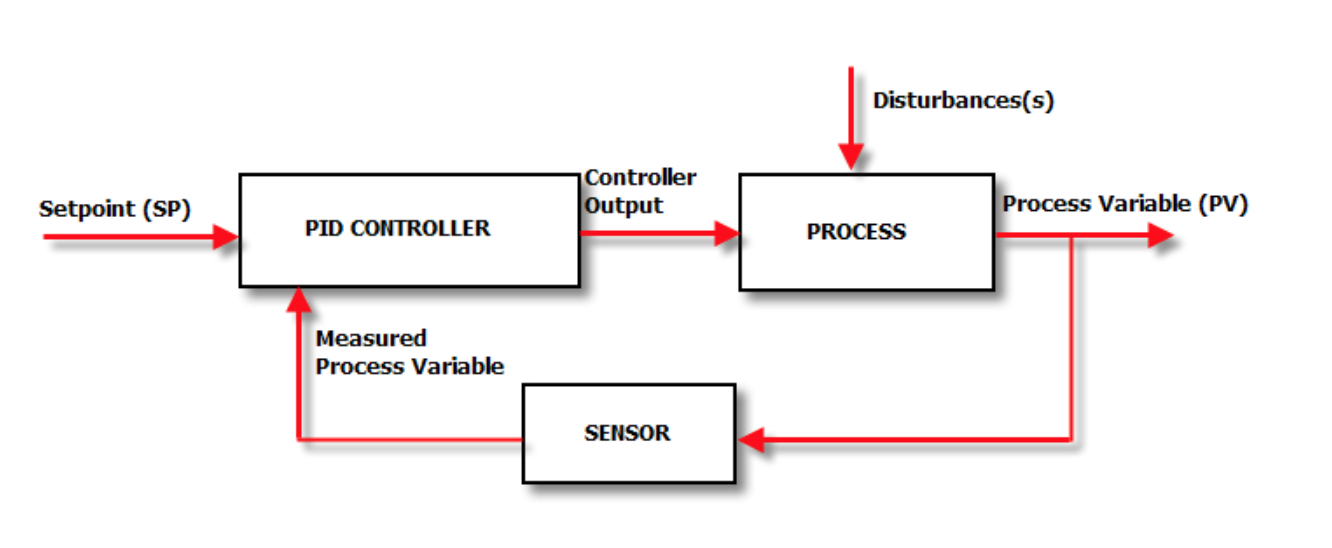

Zhiqi has been working on the PID module since last week. We have discussed about which of the physical factors we would like to take into consideration. Basic PID control will be a perfect use case in our robot. We are now computing and estimating the weight from all of the components and the suitcase and how much torque we have from the motor. The key to this PID control is the feedback. There are certain types of sensors data we need to give it back to the controller so that controller could adjust itself based on the disturbance. Attached is a block diagram for basic PID controller. We will soon implement the module based on the block diagram. Besides, Zhiqi also began to take a look at the IMU component that came in this past week. We have been doing some testing on the arduino platform and write code for the IMU so that we can read different degree of freedom and results from the component. We also need to consider how to calibrate the IMU and recalibrate based on the results from computer vision.

One problem we have faced right now is the power issue. Previously we intended to implement the power system by a power bank. The power bank, according to our original plan, But after looking at the documents and fact sheet of Nvidia Jetson board, we found out that the jetson board will shut down if there were a voltage drop from the power bank. It was usual to see a voltage drop when we ran the motor. We are thinking of using two power sources. We are aware of this issue and may consult Professors and TAs for solutions.

-Zhiqi Gong

Weekly Report 2

Team CB

Zhiqi Gong

I have been mainly working on the PID module since last week. Basic PID control is a perfect use case in our robot. PID controller is a control loop feedback mechanism that takes in set point and reads data from sensors to adjust itself in order to make the output close to the set point. The keys to this PID control are set point and the feedback. Since the PID module is a module used for the motor that mainly outputs the torque. We are now computing and estimating the weight from all of the components and the suitcase and how much torque we have from the motor. Besides there are certain types of sensors data we need to give it back to the controller so that controller could adjust itself based on the disturbance.

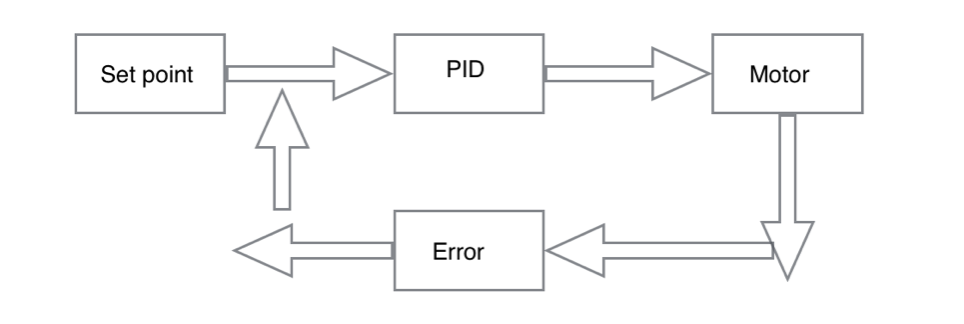

This week I designed the first version of the PID controller. Since the controller is driving two wheels, the set point will always be a wanted speed. The speed command then goes to the motor. Based on the encoded motor, we could read the data from the speed sensor integrated into the motor. The difference between the actual speed and the wanted speed will be the error of the system. The is error goes back to the controller as a feedback. Attached is a block diagram.

I have also working on the actual P function, I function and D function.

Besides I also began to take a look at the IMU component that came in this past week. We have been doing some testing on the arduino platform and write code for the IMU so that we can read different degree of freedom and results from the component. We also need to consider how to calibrate the IMU and recalibrate based on the results from computer vision.

Next week, according to the schedule I will continue to work on building the module and calibration algorithms. I will have more sophisticated structure of the PID module. And the basic IMU reading function will be done by next week.

-Zixu Lu

All of our parts arrived on Tuesday, and I started to build up the Mecanum Wheels and setup integration of Jetson board with camera. I have been working on the computer vision part since last week. Started from Wednesday, I have the chance to take a look at the camera integration based on the results of the actual depth camera. I tested the depth information given by the camera and compared my own depth algorithm with stereo vision and they are not very far from each other, so I guess this is enough for this project. I also tried to build up the ubuntu interface between jetson board and our realsense camera, and I also made a camera calibration using Matlab.

The next big issue of our project is to make the tracking to work. We primarily choose to use the machine learning method with YOLO. We have different ideas about the tag design, either with april tags or concentric rings with different colors. Each method has its advantages and disadvantages: if we use april tags, it might be hard to be downsized to stick on to heels, but concentric rings may not be unique enough to apply in variant environment. We might want to design some unique tags that are splitted into different shapes with different color coding, and we can use the YOLO api to train for detecting.

Next week, we will try to finalize the tag and start the training process. We are on schedule about the tracking part and it is one of the most important part in our project. We also changed the schedule a little bit to reflect the extension of this portion.

0 Comments