We have no significant risks other than unpredictable bugs or extraneous events that occur before our demo.

No changes were made to our existing design.

We have no significant risks other than unpredictable bugs or extraneous events that occur before our demo.

No changes were made to our existing design.

This week we finished our poster board. We also finished our new gui design. We spent most of the week debugging the new interface and repeatedly testing our system.

When testing our system in the gym, Suann and I found that the projected gui was difficult to see due to the very bright ambient lighting. We borrowed a projector from the library. This projector works much better in daylight so we decided to use that for our final demo.

There were some issues with one of our piezo sensors. We were reading a very high value from one particular sensor, which led to several false positives detected from our tap detection subsystem. We were able to bypass this issue by creating separate thresholds for each sensor. Now, the first four seconds on startup of the system are used to determine the average value read by each sensor when the system is at rest. This value is used to determine the threshold value for identifying a tap.

We also worked on our final paper this week.

Next Steps:

Finish the final paper.

We are on schedule.

Tanu and I soldered four more piezo sensor circuits so that we could reduce the number of taps that were detected as false negatives. This was necessary since we found through testing that our accuracy rate is better when tapping the poster close to the piezo sensors. We are able to achieve 100% accuracy with the new sensor configuration.

We also brainstormed new ideas to make our gui more intuitive and engaging. We then outlined a story board that Suann can use for reference when drawing our final gui designs.

We took data with our working system and compiled our four metrics cohesively. We then worked on the slides for our final presentation.

Next Steps:

We will finish polishing our improved gui, implementing our new design, and complete our poster for the final demo.

We are on schedule.

What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We need to improve our tap response rate. We will manage this by soldering more piezo sensors to cover a larger surface area of the poster board.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

No system changes were made.

Provide an updated schedule if changes have occurred.

No schedule changes were made.

This week I have been debugging the system with Tanu and Suann. We found that most of the latency issues we were seeing were due to using the Mac OS platform. When we switched to using the Ubuntu platform on my laptop, these issues went away. To make this switch, we needed a new way of zooming in and turning off autofocus on the Logitech webcam since this webcam has no Logitech software support for Ubuntu. We were able to bypass zooming in by changing the positioning of our camera and projector. This also helps cut down on a step needed with the initial system setup.

Next Steps:

The main issues we are still facing are the sensor’s rate of response to tapping on the board. After receiving feedback from some peers, we are also prioritizing making the system more intuitive and visually appealing. We will solder together more sensor circuits to expand the range of tap detection on the poster board and work on improving our gui this next week.

We are on schedule.

Thresholding for our tap detection system is currently incorrect. This can be fixed with more testing, especially in our final demo environment. A large risk is the latency of our system, calibration takes a long time and so does modal loading. This will be fixed by trying different QtWidget classes and possibly working on optimizing our detection algorithm.

No changes were made to the system.

We finished integrating our third subsystem last Sunday. So this week we worked as a group to identify and fix several bugs with the system. The system works, but it is slow due to various inefficiencies. Response time after the initial integration was on average several seconds.

Our progress is on schedule.

Next Steps:

Continue working as a group to fix bugs and optimize performance

We decided to do our real time testing with the second classifier, which takes about 0.8 seconds on average to finish computations. I tried a few different methods of classification: svm.LinearSVC and the SGD classifier since they are supposed to scale better. However, the results of our original classifier remain significantly better so we decided that these methods are not worth pursuing further.

Tanu and I finished debugging our detection and gui integration on 3/31. Our system now works in real time. We added buttons on the screens as a placeholder for a tap to trigger color detection.

The first iteration of the calibration system that I wrote last week is working. For this system, we detect the coordinate of the top left and lower right corner of the gui screen using our existing color detection. After repeatedly testing in real time, we realized that since a user has to position the red dot at the corner of the screen for calibration, there is significant variability in detected coordinates. Thus the mapping of coordinates from detection to gui is not accurate. Each mapped coordinate is consistently a fixed distance away from the target coordinate.

To adjust for this error, I wrote a second iteration of calibration. The second version of calibration consists of a splash screen that appears on loading the program as shown below. A third coordinate is detected in the center of the screen to adjust for differences in the detected coordinates of the two corners of the gui.

Next steps:

I am on schedule.

On the 24th, Suann and I took a set of 100 test images that I used to compile more training data.

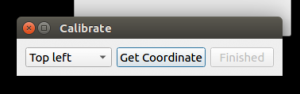

I wrote a script to detect the green border around the perimeter of our gui screen. Tanu is using these border coordinates to calculate the identified coordinate of the red dot on the gui. This coordinate is proportional to the detected coordinate of a red dot in an image taken with the Logitech webcam. However, when we tested this script in the demo environment, we realized that the green color that was projected was much lighter and more variable than expected. So we decided to change our calibration function so that we utilize our red color detection. A user now calibrates the screen by placing a red dot on the upper left corner of the projected gui and clicking the “Get Coordinate” button that will calculate the dot’s coordinates. The process is repeated with the dot placed at the lower right corner of the screen. These coordinates are then passed to Tanu’s calibrate() function. The gui interface for the calibration is show below.

Calibration GUI:

Tanu and I have integrated our components of the project: gui and detection. I have added two buttons to the top left of the gui as placeholders for input from the piezo sensors. One button will detect the coordinates of a user’s finger and the other will open the dialog box to calibrate the screen.

GUI:

I have also compiled more data from the set of test images that we took on the 27th and added the data to our csv file. Now that we have more than 30 million datapoints, I had to leave the program running overnight to train the classifier. I will work on possibly combing through the data to reduce the number of datapoints and I will try to train with alternative svm classifiers to cut down on this time. I have found some possible solutions in this post. Our previous classifier had a response time of about 0.33 seconds on average. The newest one has a response time of on average 3.26 seconds. This is a huge difference. We may have to make a tradeoff on whether we want more accurate results or a faster response.

My progress is on schedule.

Next Steps:

1. Try to train using alternative methods to reduce training time.

2. Test the calibration function and detection in real time and compile accuracy results.

What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We are concerned about our planned method of mapping the detected coordinate in an image to the gui. We are not sure if in practice our design will be accurate. Our plan is to detect the coordinates of a green border along the perimeter of our gui. We will use these coordinates to find the relative distance of the button coordinates to the border. In the case that these detected coordinates are not precise enough for this application, we will place four distinctly colored stickers at the edges of the gui and will use these as reference.

We are also concerned about using the raspberry pi and Python for the piezo sensor circuit. We are prototyping with an Arduino for now and if we are unable to use the raspberry pi, we will continue to use the Arduino and send data to our Python color_detection.py script through a serial port. In this case, we will have to deal with any possible delays in sending a message to the python script.

We also need to spend a significant amount of time taking more test images to train our SVM. Currently, we have 26 test images with our poster board setup. We will work on taking more test images this upcoming week.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

No new design changes were made this week.