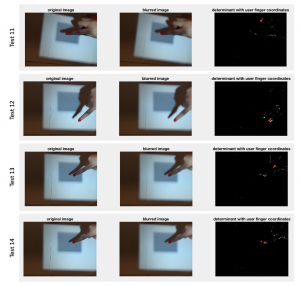

This week I continued to work on the Determinant of Hessian (DoH) blob detection algorithm. After talking to Prof. Savvides, I realized that I could fix the scale of the blur level, i.e, fix the sigma of the gaussian kernel used to blur the image. Thus, the algorithm I have implemented can be considered more of a “circle” detector rather than a true blob detection algorithm. Regardless, it suits our purposes.

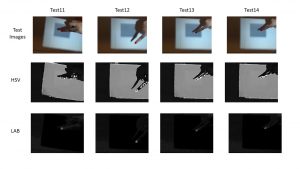

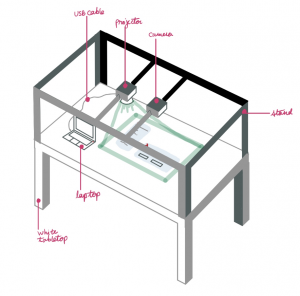

As has been mentioned in earlier posts, Isha and I are using the same set of images to test our algorithms. These test images were taken in an environment that closely represents our demo setup. Below are the results:

Currently, my algorithm works for some images (test 12, 14) and not for others (test 11, 13). Following this, one of the design decisions we have made is to color the buttons of our GUI white and keep the background darker. This seems to be a sound decision given the fact that we will only want to detect the user’s finger when he/she taps on a button.

Apart from this, I also helped my team setup the camera and projector in order to obtain the above-mentioned test images. Further, we all worked on the creating the slides for the design review.

Next week I will continue to test my algorithm on different images to see where it breaks and tune parameters accordingly. I will also start working on K-means clustering that will make use of both color detection and blob detection. We hope that this clustering algorithm will provide us with robust results.

I am currently on schedule.