I finished writing the code for the first iteration of the GUI using PyQt. I have implemented the following functionalities:

1. The GUI application launches in full-screen mode by default and uses the dimensions of the user’s screen to provide the baseline for all calculations regarding button sizes and placement. This allows the GUI to be adaptable to any screen size, making it scalable, thus, portable.

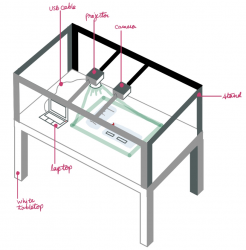

2. Since we plan on demonstrating our project as an intractable poster board, on receiving the coordinates where the tap occurred, the application correctly identifies which button was tapped, if at all, and creates a modal displaying information from the appropriate text file corresponding to the topic associated with the clicked button. Once the user is done reading this information, he/she can press the return button to go back to the original screen.

Currently, the GUI has been designed such that there are six buttons relating to six different headings. The background has been set to black, while the buttons are a dark grey. And the buttons have been spaced out enough to help us achieve a better accuracy when detecting which button was tapped. These are open to changes as we keep testing for the next few weeks.

I am currently on schedule. My next tasks include:

1. creating a function that would help map the coordinates detected by the color detection algorithm to the coordinates in the GUI, using the green border coordinates that Isha will be detecting.

2. find a way to pass the detected coordinates to the main GUI application after it has been executed. I will look into some PyQt built-in functions that allow to do that.