Team Status

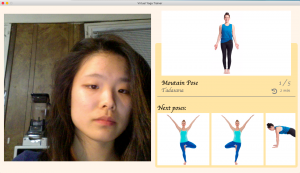

This week we have completed an initial integration of the verbal instruction generator, UI, speech synthesis, and webcam.

Risks and Contingency Plans

We found one risk with our UI compatibility among different computers. The UI was developed with Tkinter, which is known for having UI sizing issues. The overall functionality of the buttons and other widgets still work, however, the overall look is less appealing on another device. We are going to look into maybe transitioning this to pygame after the first demo, but it is not a priority currently.

Another risk is the way optical flow might handle pose transitions. We have not tested this feature yet, thus we will have to robustly check to make sure it limits the number of unnecessary instructions.

Changes and Schedule Updates

We have updated the schedule to include the optical flow testing as well as UI pygame transition feasibility. Attached is our updated Gnatt chart.

B7 Gantt Chart – 3_30 Gantt Chart

Sandra’s Weekly Progress

Accomplishments

This week I integrated the UI with our controller. In addition, I found and instantiated a speech synthesis library. I implemented threading on our application to separate pose correction, UI, and the speech synthesis module. The UI and speech synthesis library both require dedicated threads. This helps improve our application because we can better test and identify errors in this distributed structure.

Next Week Deliverables

This next week I will be creating tests to ensure that Optical flow is robust as we move transition through poses. I might need to implement additional functions that improve our optical flow algorithm, such as changing the initial params or dynamically incorporating a delay for pose transitions.

Tian’s Weekly Progress

Accomplishments

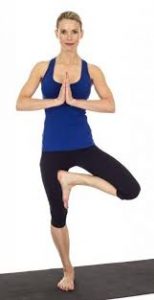

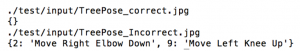

- Integrated Instruction Generator with Webcam and UI (with Sandra, Chelsea): This week I worked with my teammates to integrate our updated instruction generator and optical flow algorithms. We also tested our integration with webcam on Tree Pose, Mountain Pose and Warrior 1.

- Implemented Priority Function for Instruction Generator: I refactored the instruction generation code and added a more structured Template Instruction class. Using this class, I implemented the priority analysis function that decides which instruction to give the user first when multiple instructions are generated for the same frame. Currently the program uses the following priority:

- Instructions describing the user’s general performance: “Good job! You got Mountain Pose correctly!”

- Instructions related to body parts fixed on the ground: “Bend Your Left Knee”

- Instructions related to middle body parts including back / torso: “Straighten Your Back”

- Other instructions: “Move Your Arms Up Towards the Sky”

- Added Warrior 2 Pose: I Added Warrior 2 Pose to our MVP integration by collecting images online, generating standard metrics for Warrior 2, and refining the instruction generator for this new pose.

Next Week Deliverables

I hope to make some progress on inverted poses next week. Our program works pretty well with normal poses so far, but we have encountered some issues with inverted poses such as longer runtime and lack of accuracy in OpenPose estimation.

I will perform more experiments on the performance of OpenPose on inverted poses, and design more robust handler when OpenPose failed to estimate some of the keypoints. I will start with DownDog Pose and continue working on other inverted poses if time permits.

Chelsea’s Weekly Progress

Accomplishments

- My teammates and I mostly worked together on integration of all the components this past week. Specifically I worked on integrating the UI component and making modifications/additions in the UI class so that it’s compatible with the other components.

- I added a routine class because both the controller of the entire application and the UI need information about the routines. Therefore, I created the routine class that takes care of handling the transitions through a routine, passing out information of the current and next few poses, and sizing images based on the pose types.

Deliverables I hope to achieve next week

Since how nicely the UI display is is not of the priority for our upcoming demo, I did not try to add the features I wanted to last week. Additionally, as it turns out, the Tkinter displays differently on my laptop and on my teammates’. Therefore, my priority for next week would be to find a solution to eliminate the display discrepancies on different laptops. Next, I can work on the UI features I wanted to add.