Team Status

Risks and Contingency Plans

Since we have been working on our parts separately we do not yet know how well they come together. We would be using a separate thread for the backend computations so that it doesn’t interfere with the frontend video update. Below are some of the potential challenges we might have to overcome after the integration:

- Technology incompatibility

- Runtime for each correction cycle, from triggering the correction to uploading feedback to the UI, could be longer than expected.

- An entire routine duration could be too long or too short, given that how much correction a user needs is nondeterministic

- Miscellaneous bugs

To address the above potential issues, we have thought of the below plans:

- We have programmed our parts in Python. As for incompatibilities because of different versions of python and libraries, we could easily fix this, though the process might be tedious.

- We could find out where the bottleneck is and see if we can cut down the time there.

- We can fine-tune parameters, such as the minimum time required and the maximum time limit spent on each pose, to ensure the routine doesn’t run for too long or too short.

- We could collaboratively find out and fix the bugs, given that we still have a lot of time before the demo and our program is relatively short such that the bugs are probably not impossible to track down.

Changes and Schedule Updates

This week we all worked on and refined our individual parts and will be integrating them into a single application tomorrow (03/24). Our current plan is to have all the components working together by the end of this week. After the assurance of our program’s functionality, we will start to make improvements and conduct user testing.

Sandra’s Weekly Progress

Accomplishments

This week I implemented calibration for the webcam and did some small testing. Calibration is our 5-10 second period where the user stands in front of the webcam for initial pose estimation. The results of pose estimation are then fed into Optical Flow when we initialize it. I faced one major challenge in implementing this function in the past week. Though I had previously tested optical flow, it was on more static videos. I faced new issues with optical flow when integrating with the live webcam. For instance, Optical flow kept exiting early because it lost (stopped tracking_ the key points on the body. This could be occurring because Lucas Kanade is not invariant to light or occlusions. To help improve the tracker, I wrote a function to augment the number of points based on body pairs. In addition, I reinitialized pose estimation, when we have lost too many points from the Lucas Kanade tracker.

Next Week Deliverables

This next week I will work on integrating the basic App class and webcam functionality with the UI and Verbal Instruction generator that Tian and Chelsea have been working on. I hope to also accomplish some basic user testing before our demo.

Tian’s Weekly Progress

Accomplished Tasks

- Implemented “Align” and “Bend” instructions: This week I implemented the logic to generate “Align” instructions when the user is practicing a symmetric pose such as Mountain Pose. Instead of giving two instructions “Move Left Elbow Down Towards Mid Chest” and “Move Right Elbow Down Towards Mid Chest”, the program will only give one instruction “Move Your Elbows Towards Mid Chest”. This is more concise and easier to understand. I also implemented “Bend” instructions based on our discussion last week. We will use “Bend” instead of “Move” when the user’s endpoint (i.e. hands or feet) is fixed on the ground or on the wall.

- Implemented instruction generation logic for Warrior 1 and DownDog: Since both Warrior 1 and DownDog require the instruction generator to consider both side-view angles and front-view angles, I refined the function for calculating front-view angles to compute standard side-view angles for these two poses. I also implemented the logic to generate side-view instructions such as “Straighten Your Back”.

- Thoroughly Tested Mountain Pose and Tree Pose: I did thorough testing on our basic template instruction generator for Tree Pose and Mountain Pose. In order to simulate real user behaviors, I collected images of incorrect poses for the following different scenarios including user doing an entirely different pose, user doing a similar pose and user is doing the same pose but needs minor improvements. These scenarios helped me tune the appropriate thresholds for different angles.

Deliverables I hope to complete next week

- Test Warrior 1 and DownDog: I will test these two poses as I did for Tree Pose and Mountain Pose. Currently my implementation of generating instructions for these two poses are quite simple and does not take into consideration rare mistakes. I will find more static images of incorrect user pose and perform thorough testing.

- Integrate Instruction Generator with Webcam and UI: I will work with Sandra and Chelsea next week to integrate our MVP for the demo on April 1st. This may involve substantial code refactoring and webcam testing on the instruction generator.

Chelsea’s Weekly Progress

Accomplished Tasks

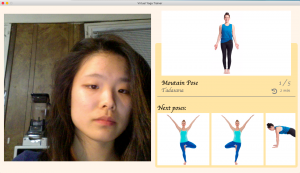

I finished implementing the UI components following the designs we wanted for our application as well as integrating the different parts of the UI component. I have also implemented functions for the buttons and timer events.

Below are screenshots of the landing page and the routine page.

Deliverables I hope to accomplish next week

After integrating the UI part with the rest of the application I hope to fully define the timer events for changing poses. I also want to add more elements into the app, such as tabs that show the user which page he/she is one, a back button to allow the user to exit the routine, and a progress bar that shows how closely his/her pose is compared to the standard pose.