Team

Changes to schedule:

Ilan is extending his memory interface work by at least 1 week.

Brandon is extending his sending video stream over sockets work by at least 1 week.

Edric is pushing back the edge detection implementation by at least 1 week.

Major project changes:

Our Wi-Fi quality issues have posed a problem that we intend to temporarily circumvent by lowering the video stream bitrate. Once we have more of the project’s functionality working, we’ll try to look back at the Wi-Fi quality issues so we can increase the bitrate.

On the compute side, we are basically decided on moving forward with Vivado’s HLS tool.

Brandon

For the sixth week of work on the project, I was able to successfully boot up and configure the Pis! It took me a decent amount of extra work outside of lab, but after receiving the correct USB cables, I was able to boot into the Pis and connect to the CMU DEVICE network. To simplify usage, we’re just using mini HDMI cables to navigate through the Pis with a monitor rather than SSHing in. After I finished initial setup, I moved on to camera functionality and networking over UDP. I was able to display a video feed from the camera, and convert a video frame to an RGB array, and then to a grayscale array, but when I began working on the networking portion of the project, I ran into some issues. The biggest issue is that we are achieving a significantly lower bandwidth than expected for the Pis (~5 Mbps) and for the ARM core (~20 Mbps). Thus, we made the decision to revert back to my original plan, which was utilizing H264 compression to match the appropriate bandwidth for the Pis. Unfortunately, we haven’t yet been able to send the video over the network using UDP, but we plan on working throughout the weekend to hopefully be ready for our interim demo by Monday.

The Pi setup completion was a big step in the right direction in terms of our scheduling, but this new bandwidth issue that’s preventing us from sending the video is worrying. However, if we’re able to successfully send the video stream over the network by the demo, we will definitely be right on schedule if not ahead of schedule.

Ilan

Personal accomplishments this week:

- Did some testing of Wi-Fi on ARM core

- Had to configure Wi-Fi to re-enable on boot, since it kept turning off. Also saw some slowness and freezing over SSH, which is a concern once we start using the ARM core for more intense processing.

- Found that current bandwidth is ~20 Mbps, which is too low for what we need. Initially we’re going to try to lower the bitrate as a temporary way to keep moving forward, and later we’ll try either changing the driver or looking into other tweaks or possibly ordering an antenna to get better performance.

- Continued to work on memory interface, but wasn’t able to get full setup finalized. Going to work on this more tomorrow (3/31) to have something for the demo, but I focused on helping Brandon and Edric starting Wednesday so we have more tangible and visual results for our demo. Brandon and I worked on getting the Pis up and running, and I helped him out with some of the initial camera setup. I also looked into and set him up with a start on how to get a lower bitrate out of the camera so we can still send video over Wi-Fi and how to pipe it into UDP connections in Python. I helped Edric set up HLS and get started on the actual implementation of the Gaussian filter. We were able to get an implementation working and Edric is going to do more tweaking to improve performance. Tomorrow (3/31), he and I are going to work to try to connect the Gaussian and the Intensity gradient blocks (we’re going to try to implement this tomorrow beforehand) and then I’ll continue working on the memory interface. The memory interface’s PL input is defined by Edric’s final Gaussian filter input needs, so my work will change a bit and so I’ve reprioritized to help him finalize first.

Progress on schedule:

- I’m a little behind where I would like to be, and the Wi-Fi issues we’ve experienced on both the ARM core have been a bit of a setback. My goal toward the second half of the week was to help Brandon and Edric so we can have more of the functional part of the system ready for the demo. I’ll likely be extending my schedule at least 1 week to finalize the memory interface between PS and PL.

Deliverables next week:

- Memory interface prototype using unit test to verify functionality.

Edric

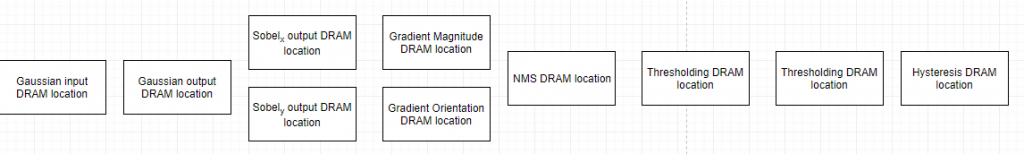

After researching different possibilities for implementing the Canny algorithm, I’ve decided on going through with Vivado’s High Level Synthesis (HLS) tools. The motivation for this decision is while the initial stages (simple 2D convolution for Gaussian filters) isn’t particularly intense in hard Verilog, the later steps involving trigonometry will prove to be more complex. HLS will allow us to keep the actual algorithm simple, yet customizable enough via HLS’s pragmas.

So far I have an implementation for the Gaussian blur which both simulates and synthesizes to a Zynq IP block. Preliminary analysis shows that the latency is quite high, but the DSP slices used is quite minimal. More tweaking will have to be done to lower the latency, however since current testing is done on 1080p images, lowering this down to the target 720p will definitely make up for the majority of the speedup.

For the demo, I aim to implement the next two stages of Canny (applying the Sobel filter for both the X and Y domain, then combining the two). Along with this I’d like to see if I can get a software benchmark to compare the HLS output with (ideally something that is done using a library like OpenCV). Thankfully using HLS gives us access to a simulator which we can use to compare images.

I’m a little behind with regards to the actual implementation of Canny, but now that HLS is (kind of) working the implementation in terms of code will be quite easy. The difficult part will be configuring the pragmas to get the compute blocks to meet our requirements.