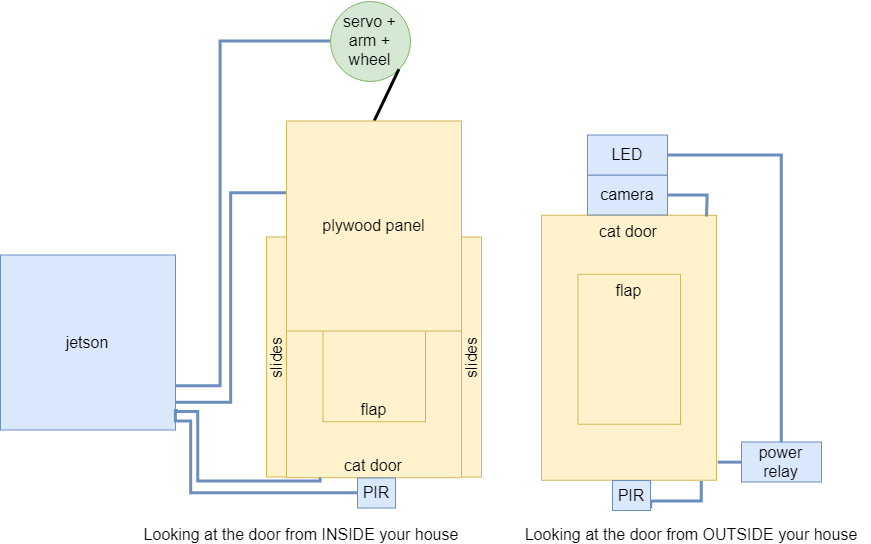

In preparation for the design review, I did a dry run for Sam. Jing and I went to Home Depot to pick up the plywood and a few other small hardware parts. I wrote the abstract, intro, requirements, architecture overview, and future work sections of the project paper. I ordered the remaining parts.

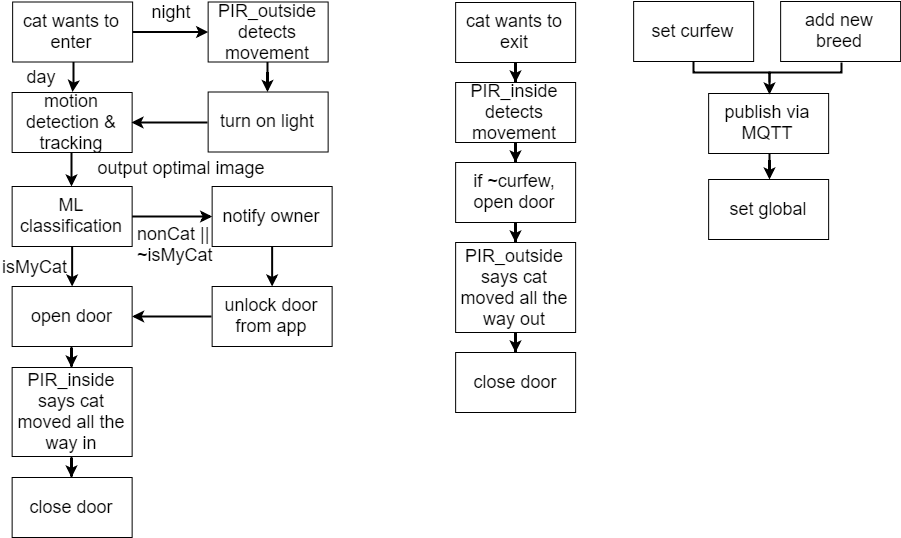

I responded to the design review feedback in the design document. After consulting a few instructors and people more knowledgeable than us in the machine vision field, I learned the following. In order of preference, our testing options are as follows: live animals, taxidermy, video feeds, printed high resolution pictures, and stuffed animals. My friend has a cat and Jing’s friend has a cat, but animals aren’t allowed on campus. Thus we will record footage of said cats interacting with the system and the system responding appropriately. For raccoons, we won’t be able to find live raccoons to test our system on. A taxidermy raccoon is as close as we could get to a live raccoon, but they are expensive. We have decided to look for videos similar to footage the camera would have actually captured of a raccoon. This is better than printed high resolution photos because the footage animal would replicate reality more closely than a video of a printed picture. Stuffed animals are not a good test of our machine learning algorithm because they don’t represent actual animals. A machine learning model that classifies stuffed cats as real cats would be considered a poorly train classifier.

There is no power metric because the device will be plugged into a wall outlet.

The next large milestone is to have version 1 of the door finished by March 22. I am a little anxious about finding power tools and small metal parts to construct the door. To alleviate that, I am proactively working on obtaining the right hardware from home depot and getting the plywood laser cut first. Whenever there is a delay in the construction of the door due to waiting on parts, I can context switch to writing the computer vision python program for motion detection and tracking.

I will have the door parts laser cut by the end of next week.