This week I began testing our training data and the data that we are getting from our microphone. I performed cosine similarity on the frequency magnitude of the two sound files and found that their frequency spectrum are nearly identical with a cosine similarity of .87. I also began to determine our testing and validation setup to try to mimic a human heart. I found that we would not need a gel and that playing the sounds through a speaker would be sufficient.

Team – Week 3 Status Report

What are the most significant risks that could jeopardize the success of the project?

For this week, the most significant risk found was whether or not the filtering techniques being used were efficient enough to classify the sound data. After classification, if the results are bad, then it could either be a problem with our classification method or our filtering method not being good enough.

How are these risks being managed? What contingency plans are ready?

Eri and Ryan are meeting frequently and working on everything together for classification once again. There are certain errors such as the CNN not reaching a final accuracy rating that points to the structure of the CNN not being good for the data being inputted.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

We did not make any changes to the requirements/block diagram/system spec this week.

Provide an updated schedule if changes have occurred.

There have been no changes.

Ryan – Week 3 Status Report

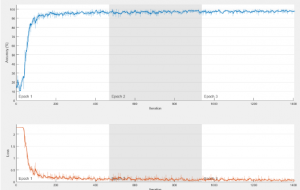

This week I worked on classifying the testing heart sound data as abnormal vs normal using a Convolutional Neural Network. The design for the CNN is as follows:

Since we are no longer using an external cloud service to run our analysis, I have to decrease the size of the data using MaxPool Layer, which transforms the original data file by ignoring the local minimum values and only extracting the maximum values in a local area. The ReLU layer is mainly used to make the data non-linear, but it also reduces the computation time because it sets all negative values to be zero and computations with values of zero are much easier on a system than doing computations on also negative values. This structure was successful for me when classifying images of handwritten digits, so I was curious whether it would also be successful for audio data. The input audio data for the neural network was the filtered training sound files from week 2. If unsuccessful, I have to either improve the filtering technique using Eri’s research or change the structure of the CNN.

This is what the results looked like, but it never reached a final accuracy rating since it kept jumping around for the final iterations, so the structure of the CNN has to be changed for the next few weeks.

I am on schedule with the classification.

Ari’s Week Progress Status 3

This week I accomplished many things. First off, I was able to transfer the signal from our stethoscope to a computer. I was also able to visualize the signal using a oscilloscope and was able to create recordings of the sounds the microphone produced.

My goal for next week is to apply the algorithm that Eri and Ryan create to see how it compares to our testing data. I also want to think more about our testing process and see how we can make it better.

Journal Entry Week 3: Eri

- What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

- This week, I finished removing the noise of the signal. I used what I did last week, but instead of just working with the amplitude I found peaks of the Shannon entropy, since it emphasizes the average intensity signals more efficiently, while also attenuating the high and low energy signals.

- I was also able to classify each peak as S1 or S2, since S1 heart beats have a lower frequency signal than that of S2.

- One problem I ran into using the Shannon energy was that it sometimes just completely removed some low intensity signals that were not actually noise.

- Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Progress is slightly behind schedule, since I was hoping that by the end of this week I could have a clean data that is segmented and filtered to work with. So far my algorithm can define where S1 and S2 is, but it does not segment five full beats yet. I believe this next step will not be that difficult since it just needs to start collecting information at the first S1 and end at the fifth S2, so I will finish working on that by the end of tomorrow, and our team will hopefully be back on schedule. - What deliverables do you hope to complete in the next week?

- Realistically, since I have a lot of midterms next week, I do not think I will be able to have a working classification algorithm that determines whether the heart sound is normal or abnormal yet, but this week I will do further research on SVM algorithm and start using the segmented and filtered clean data to experiment with the SVM classification algorithm.