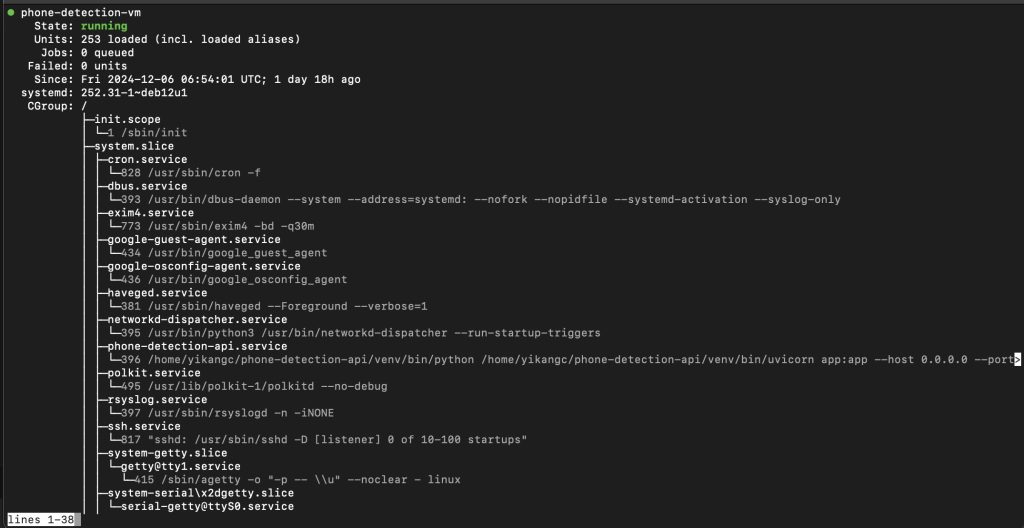

This week, I optimized the YOLO-based object detection system by migrating it to a cloud-based solution using the free tier. I constructed an API endpoint, which allow the Raspberry Pi to send images to the cloud for processing and receive responses. The reason for this change is because we run 20 tests on the pi, the yolo itself works pretty great, but when we incorporate other tasks like cv for feedback control, the computational power of pi seems insufficient to do it, and the yolo itself running on pi can cause some computational delay. Therefore, after I switch the yolo to cloud, the pi could distribute more computational power to other tasks, like feedback control for charging pad and gantry system. The cloud running app is registered as services to make sure it is always available, and I test for 30 times, the average detection time is 1.3s, which is below our requirement, and the accuracy is still around 90 percent as we are using the same model.

(yolo service on cloud || endpoint: http://35.196.159.246:8000/detect_phone)

I also improved the vision detection workflow by modifying how images are processed. Instead of sending the entire picture for detection, the Raspberry Pi now sends cropped images representing only the table area to cloud. This change reduced data size and processing time and also improve the accuracy. Additionally, I updated the gantry system’s coordinate calculations to match the revised coordinate system, and we tested for about 20 times, each time the alignment will be within 1.5cm.

What’s more, in terms of the feedback control, I use computer vision to detect the light indicator on the charging pad as a real-time feedback mechanism. This approach provides immediate feedback on whether a device is charging, which is faster and more reliable than relying on the app. I initially try to read the cloud database from the app, but the app-based feedback system suffers from delayed polling rates, especially when running in the background. Therefore, Incorporating the light-based feedback is a better way to do this. I tested on about 10 images, and there is only 1 time it did not correctly identify the blue light on back of the charging pad(which indicate it is charging), so I may need to still update the HSV threshold value.

As mentioned before, I conducted several unit and system-level tests to evaluate performance and identify areas for improvement. Unit tests included verifying API response times for YOLO processing, ensuring coordinate adjustments matched the new cropped image detection system, and validating the detection of the charging pad’s light under different lighting conditions.

I am currently on schedule, and next week is basically just fixing small bugs and incorporate everything.