The first task I completed was finalizing the system implementation and testing plan in our design report. This is a crucial document that will guide the integration and verification processes for the project’s three core subsystems: the gantry system, vision system, and charging system. The plan outlines detailed steps for ensuring that each subsystem functions as expected and interacts seamlessly with the others. For instance, the testing plan includes scenarios that simulate real-world usage conditions, such as cases where the phone might be placed at varying orientations or positions on the table, or where the gantry system must make rapid adjustments. It also includes edge case testing, ensuring that the system can handle situations like multiple phones or objects being placed on the table simultaneously.

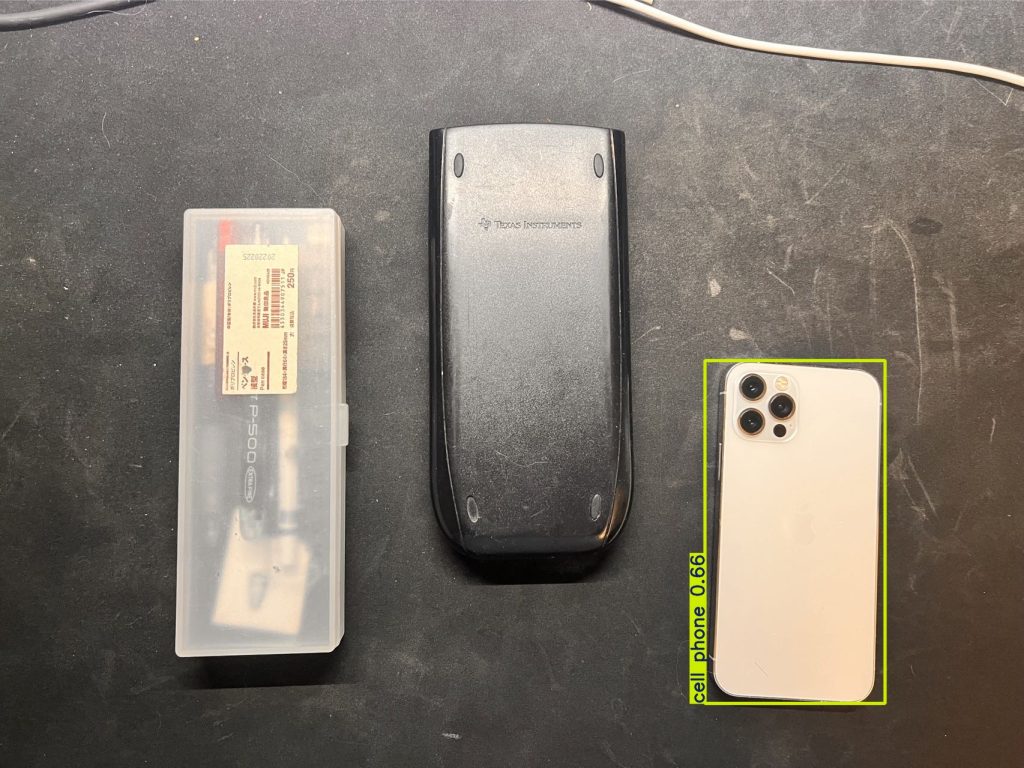

In parallel, I worked extensively on the computer vision system. Using the YOLO (You Only Look Once) object detection algorithm, I developed the first version of our vision system, which is capable of identifying the location of a phone on the table with an accuracy rate exceeding 90%. Since our parts have not arrived yet, I use my own camera to take pictures and detect the existence of the phone. In order to simulate the charging table situation, I intentionally make the phone face down, so the camera is taking pictures of the back of the phones. The first version implementation ensures the system’s ability to detect phones reliably in most situations, which is essential for the proper functioning of the gantry system. The YOLO algorithm, known for its speed and precision in object detection, was trained on a custom dataset that reflects the specific conditions of our table—such as the camera angle, lighting conditions, and various phone models. I dedicated significant time to training the model and testing it against different cases to ensure accuracy, and the results so far have been very promising. The system is able to successfully detect phones in a variety of orientations and under different lighting conditions, which provides confidence in its real-world application. However, there is still room for optimization, particularly in reducing the time it takes for the system to process each frame and communicate the phone’s coordinates to the gantry system. It is also possible to improve the accuracy in some edge cases, such as when the light is not sufficient or too strong.

I have made several iterations of testing, refinement, and retraining to reach the desired accuracy level. I invested time in adjusting hyperparameters and improving the training dataset to enhance the system’s performance. Moreover, I spent time debugging certain cases where the system initially struggled to detect phones placed at extreme angles or partially obscured by other objects. These issues have largely been resolved, and I am confident that the system is now reliable enough for initial integration with the gantry system.

At this stage, I am happy to report that my progress is on schedule. The development of the vision system and completion of the design report are major milestones that were planned for this week, and I am confident that we are moving forward according to the timeline we established. The next step will be to begin the process of developing other systems as soon as our parts arrived. Also, I would continue to improve my algorithm for the vision system and continue developing the app for our smart charging table.

In terms of deliverables for next week, my focus will be on continue to develop the app we have right now. Additionally, I plan to work on optimizing the vision system. While the system currently performs well, there are improvements that can be made in terms of speed and accuracy, particularly in handling more challenging scenarios like detecting phones in low light or at difficult angles. Reducing the latency of the system’s response is also a priority, as faster detection will allow the gantry system to move more quickly and efficiently.