Unfortunately we ended up making the decision to not implement diodes in our project. Devan was having too much difficulty with the implementation. The positive side to this is that our circuit classification accuracies have gone up, because previously there were some cases where switches would be classified as diodes. The component classification accuracy has stayed the same at around 83%, and the circuit classification accuracy is around 86%. Because we removed diodes, we have to redraw test images that were used that contained diodes. We plan on continuing testing a lot tomorrow and next week, just to get plenty of data to use in our report.

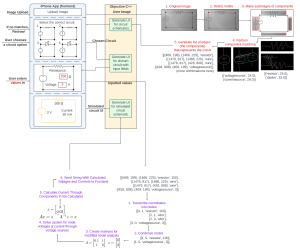

Computer vision unit testing:

- Individual component classification testing

- Tested different image preprocessing algorithms and parameters

- Tested how classification performed with various implementations

- With special casing on wires

- Determined that this was highly effective in classifying wires, which allows us to remove wires from the dataset so we don’t even have false positives of wires

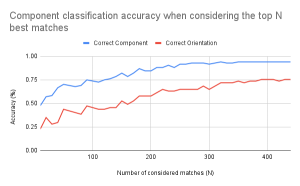

- With varying numbers of matches considered (ex: only considering the top 20 matches of features between the component image and a dataset image)

- Determined that considering all the matches and not leaving any out had the highest classification accuracy

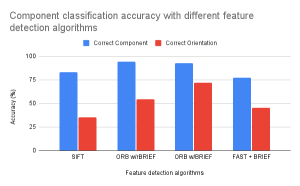

- With different feature matching algorithms

- Determined ORB feature vectors + BRIEF descriptors was the best combination

- With circle detection to separate voltage+current sources and lightbulbs from other components

- Determined that the circle detection was beneficial and worked well in identifying voltage+current sources and lightbulbs

- Interestingly resistors would also get detected to have a circle, but this ended up being fine because in the feature matching there would be a clear difference between the resistors and circular components

- If a component’s best matching score doesn’t reach some sort of satisfactory threshold, rerun the component matching with all component types in consideration (redo without circle detection)

- Determined that a consistent threshold could not be determined and that the circle detection was more accurate

- With special casing on wires

- Tested with various sizes of the dataset

- Notably as long as there is at least one image of a component in each of its possible orientations, the classification accuracy was similar to when having multiple images.

- Need to test more with this

- Node detection testing

- Tested different image preprocessing algorithms and parameters

- Tested images with different lightings and shadows

- Determined that even with improper lighting, the node detection works well as long as the nodes are drawn large enough and are properly filled in

- Full circuit classification testing

- Tested complete computer vision code with circuit images of various number of components (4 to 10)

- Determined that all incorrectly classified circuits were because of poor component classification, not because of failure of node detection

- Tested complete computer vision code with circuit images of various number of components (4 to 10)

Circuit Simulator Testing

- Generate netlist with maximum of 8 components

- Run netlist through existing simulator tool

- CircuitLab

- Compare results of simulation

- Voltage at every node

- Current through each component

- Tested 25 circuits

- Voltage Sources, current sources, resistors, light bulbs

- Current and voltage were correct on every circuit

- 100% simulator accuracy given a netlist

Usability Testing

- Surveyed 7 individuals ages 12-14

- Gave them circuits to draw

- Answered questions on a scale of 1-10

- “How easy was it to upload your circuits?”

- Average score 10/10

- “Was it clear how to input values?”

- Average score 6/10

- Found confusing which component they were inputting values for

- ““How useful were the tips on the home screen when drawing your circuit?”

- Average score 7/10

- Found example drawing of circuit most helpful

- “Were the headers of the page useful when asked to do complete some task”

- Average score 9/10

- “Do you think adding more tips and headers would make it more clear”

- Average score 7/10

- “How clear were the schematics of all the circuits displayed?”

- Average score 9/10

- “How easy was it to recognize what you needed to redraw with your circuit if it wasn’t an option?”

- Average score 7/10

- Average score of survey 7.85

- Working on implementing a more clear way to input values for components