What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours):

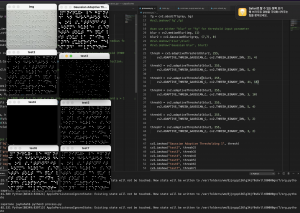

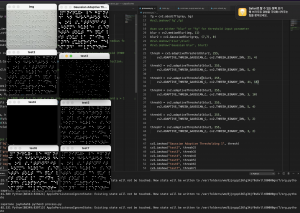

This week, I gave a finishing touch to the current pre-processing filters that will be used to train our ML model. For thresholding, otsu thresholding, median thresholding, and gaussian thresholding with various threshold boundaries have been investigated. Because the thresholded image will then be eroded (to reduce small noises, by shrinking the currently existing dots) and dilated (extended to fill up the spaces to create a more wholesome circle), parameters have been tweaked multiple times and then fed into the erosion and dilation process, and individual results have been visually compared to opt for the better pre-processing results. For now, gaussian adaptive threshold with upper and lower boundaries as 21,4 exhibit preliminary thresholding. Below is the image of various different thresholding parameters and their corresponding result images.

(please zoom in using ctrl + / – to view the images here and below)

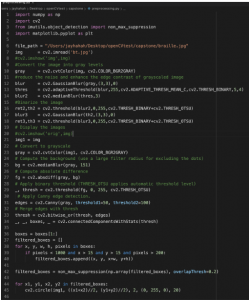

Similarly, canny edge filters, erosion, and dilations were all tested with various parameters to reach reasonable pre-processing results. Below is the code and corresponding comparison image that also includes the original braille image (img) as well as the final processed images(final1,2).

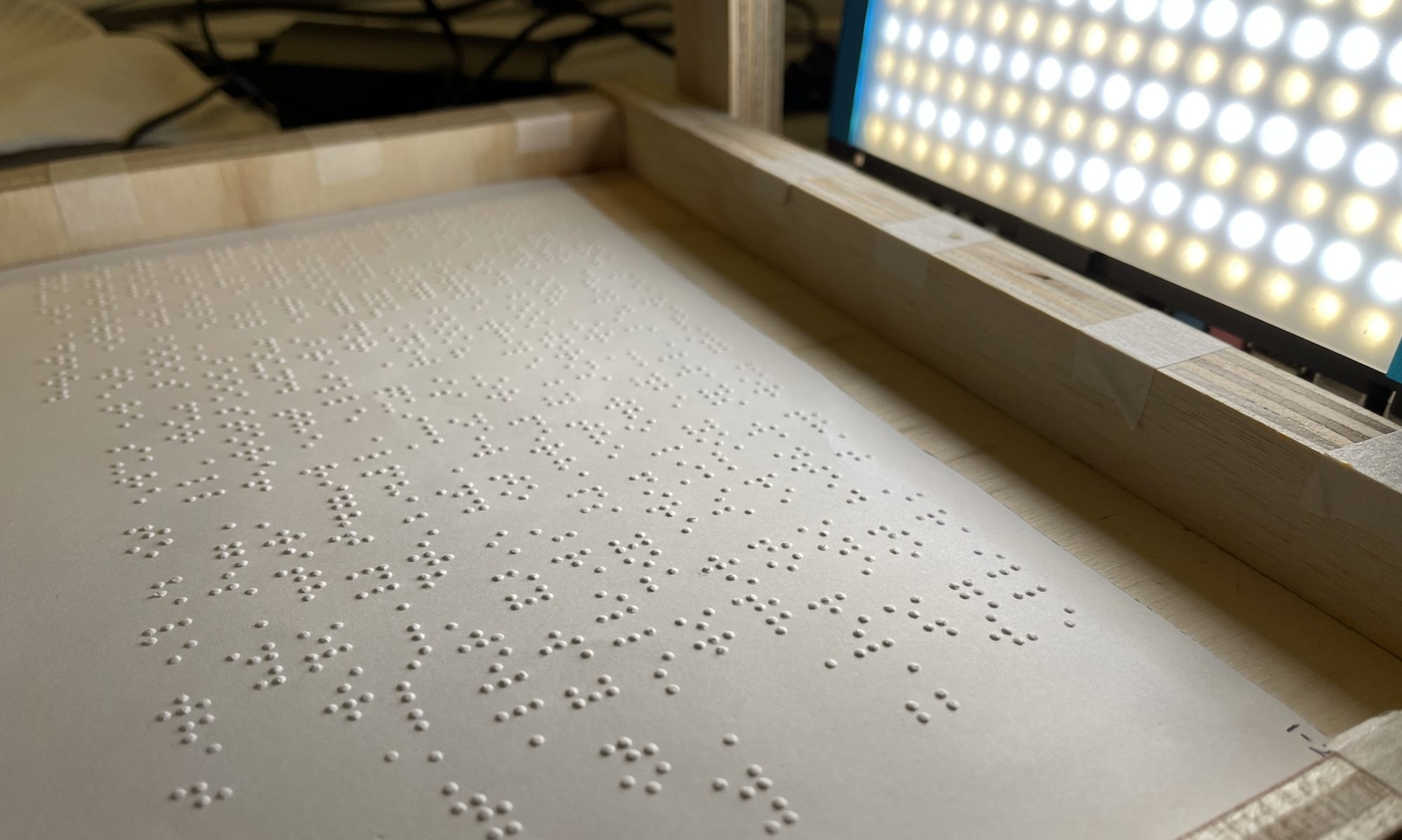

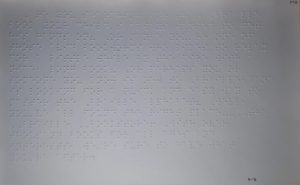

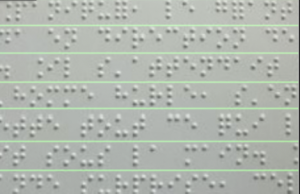

Furthermore, camera is integrated this week, and due to the resolution or lighting variations, the masking filters will need to be tweaked correspondingly to continuously produce reasonable pre-processing results. Below are the initially captured images with various colored contrast.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?:

Progress is on schedule, and the upcoming week will be focused primarily on finishing vertical and horizontal segmentations that would lead into final cropping.

What deliverables do you hope to complete in the next week?:

I hope to refine and finish the current horizontal segmentation, finish the remaining vertical segmentation, and lead into cropping.