This week was Interim Demo week. I spent some time this week bootstrapping an integrated demo of all our individual parts, which was fairly simple because of the detached and parallel nature of our pipeline. As part of this task, I built a wrapper class for making predictions on a directory of files using the classifier I trained on AWS. Since last week, the mxnet docs have luckily been restored, making this task substantially less confusing.

While the resulting software worked well on my local Ubuntu system, it was quite difficult getting all the dependencies working on the Jetson Nano, given that it is a legacy device with limited support from NVIDIA. Specifically, the Jetson’s hardware platform and older OS meant that package managers like pip rarely offered pre-built wheels for a quick and easy install. As a result, libraries such as mxnet had to be built locally, which took around a day given the Jetson Nano’s computing power. The alternative option would have been to cross-compile the package on a more powerful computer. However, I had trouble getting the dockerfiles provided to accomplish this working. There are still quite a few problems with the hardware that I will have to troubleshoot in the coming weeks.

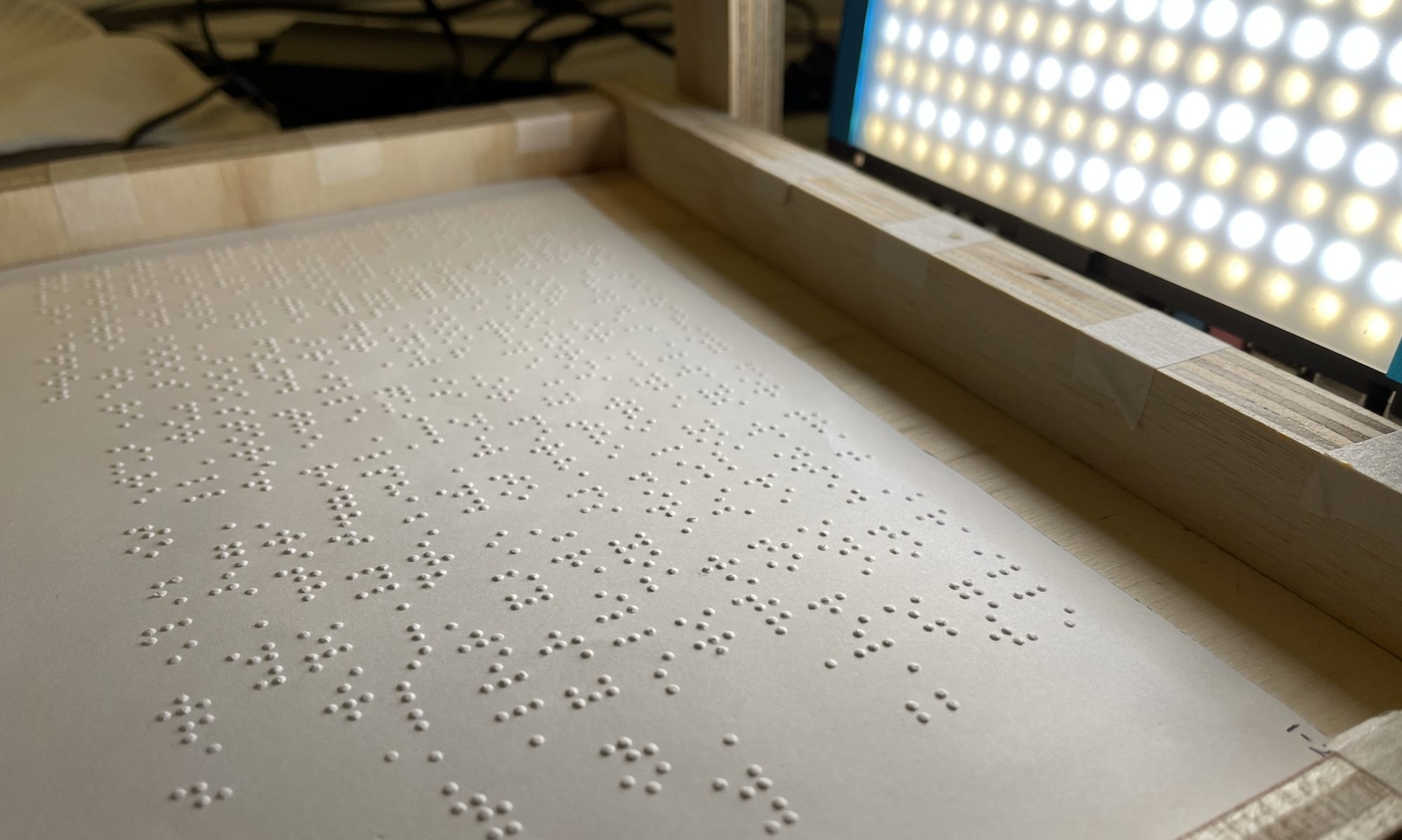

This week I also used Jay’s pre-processing pipeline to create a second dataset for training my model. Next week, I hope to continue iterating on the existing model on AWS to make it more accurate and reliable for our use case. Furthermore, while per-character inference on the Jetson is fairly fast at around ~0.1s, when processing words by character, this can add up to significant latency. As a result, I will be working on converting the mxnet model to tensorrt, which uses the Nano’s tensor cores to parallelize batch inference. This should also remove some of the difficulty of working with mxnet.