This post contains a link to our final video and report

Team_A2_Pareek_Savkur_Tayal_final Capstone_Design_Report-2-compressed

Team A2: PARROT (Parallel Asynchronous Robots, Robustly Organizing Trucks)

Carnegie Mellon ECE Capstone, Fall 2022: Prithu Pareek, Omkar Savkur, Saral Tayal

This post contains a link to our final video and report

Team_A2_Pareek_Savkur_Tayal_final Capstone_Design_Report-2-compressed

For the final push on our project, I worked with the team to get the final items off our checklist in making our system as robust as possible. More specifically, Omkar and I worked on tweaking the electromagnet circuit to de-magnetize it more effectively during drop offs (using resistors with a flyback diode), we also added bus capacitance on the regulator output to help with the inrush current spike during the rising edge of enabling the electromagnet, we worked on improving the visualizer to color code each of the robot paths to make the visualizer more usable.

I also worked on minor fixes with the rest of the team like edge case handling for the robots, and also filming videos for our final demos in case we have issues at the presentations.

This week I focused on getting all the final loose ends tied. Things like charging all the robots, improving the robot’s bugs like weak wheels, dying voltage regulators etc. I also focused on getting the performance of the sense-plan-act loop improved by optimizing the computer-vision visualizer

This week I spent my time helping robustly our robot system to work well with numerous robots. Specifically, focused on making better, larger laminated goal drop off markers, scaling the visualizer to work with 4 fiducials, work on the reversing of the robots after a drop off, etc.

We’re almost on the validation phase and ready to collect metrics on the robot’s pickup and drop off based on our design goals and quantitive goal metrics.

(Disclaimer: This was a quieter week for capstone progress due to my hands being tied with a lot of other classes that had major deadlines due around this time. )

This week I focused on improving reliability with the pallet pickup especially in the weight transfer and poor traction of the robot after it picked up the pallet. Specifically, I learnt how to use the Waterjet in techspark and cut a bunch of prototype pallet pieces for us to try and pick up. We intend to do integration on this tomorrow (Sunday) morning.

I also focused on making the codebase parallel robot friendly as a couple of the functions were only optimized for handling one robot and we’re now scaling to multiple bots.

Since the computer vision has been pretty much done and stable, I focused my efforts this week on improving various smaller odds-and-ends to improve the group’s standing and progress.

Some of the things I worked on was adding safety features around the velocity control and also scaling the velocities appropriately when the thresholds were overshot.

I also helped work on manufacturing 2 pallets for use to use in our testing.

I also helped out with some of the debugging around cubic-spline generation to smoothen out the path and make it easier for the robot to follow.

This week I spent a lot of time on robustifying the computer vision algorithm to perform well in various lighting conditions and with blocked LEDs used for localization. In parallel I brought up a fiducial based robot localization system that seems to be far more reliable than the LED based approach. Since we have 2 working approaches, we might be able to perform some sort of sensor fusion on them too.

Additionally, we met as a team to perform integration and managed to sort a lot of bugs in the interface between the computer vision and the rest of the robot system.

This week, I worked on robust-ifying the Computer vision LED-Robot detection.

As a quick recap, the way the computer vision localization works is that the program applies an image mask to the image captured to isolate the LEDs from the appropriate robot. After this, the algorithm does the inverse affine transform, scaling etc.

However, due to manufacturing inaccuracies in our LEDs, changing ambient lighting conditions, camera inaccuracies, off-axis image shift etc, using a hardcoded LUT for which robot color is which is simply not robust and has been giving us a lot of issues.

As such, I refactored the computer vision algorithms to use K-nearest-neighbors to run an unsupervised clustering classification algorithm at runtime to figure out which LED is which. This adds practically no extra latency/compute overhead during runtime since all the algorithm does is a distance calculation to the centroids of each of the clusters.

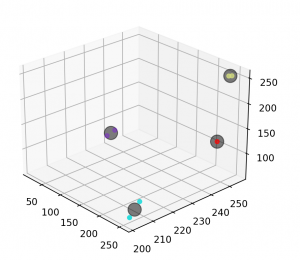

Included below is a visualization of 4 robot colors (Orange, 2 types of blue, Green) and also subsequently the clustering centroids and the detected pixels. As you can see, often 2 types of LEDs with the same color have enough noise/discrepancy in the detection that the clustering approach is definitely the right approach to this problem.

This week I focused on a refactor of the Computer Vision Localizer to bring it from a ‘demo’ state to a clean implementation as outlined by our interface document. This involved redoing the entire code structure, removing a lot of hard-coded variables and exposing the right functions to the rest of the application.

I also worked on creating 8 distinct images for the robots to display and calibrating them for the algorithm via a LUT to account for the errors in the camera and neopixel’s not being perfectly color accurate.

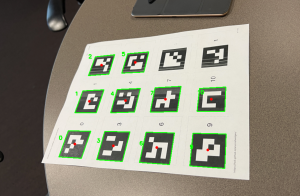

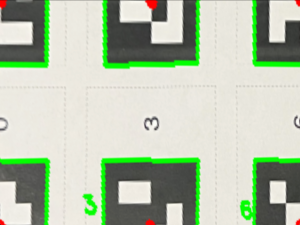

I also worked on making the Computer vision code work with the fiducials. The fiducials are the QR code pieces that allow a human to place them at the edges of a sandbox (table). Based on the fiducial locations, I was able to write an algorithm to detect what fiducials were in what order and appropriately apply a homography to the sandbox and scale it such that each pixel was 1mm wide. This allows us to place the camera anywhere, at any angle and get a consistent transformation of the field for localization, path planning, and error controller! It also allows us to detect the pallets.

Attached below is an example. Pic 1 is a sample view from a camera (the fiducials will be cut out in reality) and Pic 2 is a transformation and scaling of the image between the fiducials [1,7,0,6] as the corner points

This week I worked on making the computer vision algorithm more robust to one or more pixels being blocked. This is quite useful since there is a chance that someone moving their hand over the playing field or an odd camera angle could possibly throw off the computer vision algorithm’s localization. Additionally, I worked on the Design presentation with the rest of the team and worked on solidifying some of our software architecture. I have also started work on building a visualizer for our demo. This visualizer will show exactly where the robots are in the field, and what paths they are following. This will be an invaluable tool for debugging system issues later in the project. Lastly, tomorrow (Sunday), Omkar and I will be working on the closed loop controller to make the robot motions more accurate