This week, I worked on implementing the initial setup phase and off-center screen alignment detection for the nose, and updating the web application for the behavioral interview page. For the initial setup phase, it is similar to the eye contact portion, where the coordinates (X, Y) of the nose are stored into arrays for the first 10 seconds. Then, the average of the coordinates are taken, and that gives us the coordinates that will serve as the frame of reference for what is “center.” For the off-center screen alignment detection for the nose, we check if the current coordinates of the nose for the video frame are within range of the frame of reference coordinates. If they are not, we alert the user to align their face with a pop-up message box.

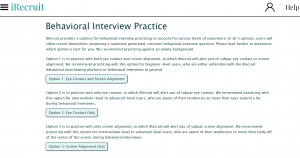

One change that we made this week was that we decided to split up the facial detection portion into three different options. We were thinking about it from the user perspective, and thought that it would be good to account for different levels of experience with behavioral interviewing. The first option is for beginner-level users, who are unfamiliar with the iRecruit behavioral interviewing platform or with behavior interviews in general. It allows for users to practice with both eye contact and screen alignment, so iRecruit will provide real-time feedback for both aspects. The second and third options are for intermediate-level to advanced-level users, who are familiar with behavioral interviewing and know what they would like to improve upon. The second option allows for users to practice with only eye contact and the third option allows for users to practice with only screen alignment. We thought this would be useful if a user knows their strengths and only wants to practice with feedback on one of the interview tactics. I separated these three options into three different code files (facial_detection.py, eye_contact.py, and screen_alignment.py).

I was able to update the web application for the behavioral interview page (see image below) to make the interface more detailed and user-friendly. The page gives an overview and describes the various options available. I was able to learn more about Django, HTML, and CSS from this, which was very helpful! I believe that we are making good progress with the facial detection part. Next week, I plan on working on the initial setup phase and off-center screen alignment detection for the mouth. This will probably wrap up the main technical implementation for the facial landmark detection portion. I also plan on updating the user interface for the dashboard and technical interview pages on the web application.