Week 10 Update

This week, we were away for Thanksgiving and did not get much done on our project. We had planned to leave this week for break, so this does not put us behind schedule.

Our ToDo list for the next two weeks is pretty intense, and we have plans to make everything happen. Our first priority is making sure the camera decoding setup works on the second Raspberry Pi, and as such, we are hoping to get the stand for the Pi camera printed and complete early this week. After that, we will be working through the decoding setup.

Encoding/Decoding:

- Get the pattern decoded in a way that’s easy to process w/ CSV

- Cipher

Decoding Setup:

- Set up the camera at certain height that doesn’t change & adjust focus perfectly

- Set up on loop to send to CV

- Have image in certain position

- Play around with camera

- Which side is up?

- Color recognition

Encoding Setup:

- Make it not slow

Camera situation:

- Test CV on raspberry pi

- Have the encoding rig for the camera made/cut ASAP

- Have it set up on loop

Week 9 Update

Summary

This week, we tested the Pi Camera with the encoded message, and found that the Pi Camera will be high res enough, and we just need to get it to focus. We also found several “to do” items regarding improving our decoding pipeline. We also worked on finishing the decoder, integrating the CV with the decoding algorithm.

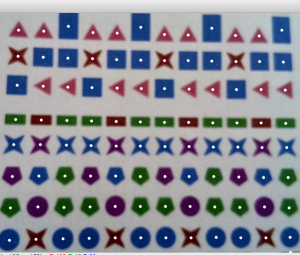

Decoder – Snigdha & Shivani

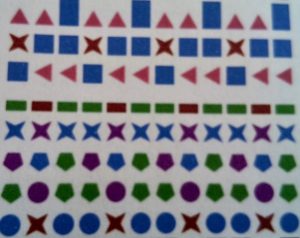

This week we worked on finishing the decoder. We worked closely to tweak the CV and encoding pattern bit by bit in order to make sure that the shapes were being properly detected. From there, Snigdha worked on updating the decoder to work with the modified csv format and make sure that it was able to accurately decode the message. Snigdha also worked on modifying the encoding pattern to make it a better fit for the 4×6 paper when printed.

Pi Camera Tests – Shivani & Caroline

This week, we ran some tests on the Raspberry Pi camera setup together, with Shivani testing the recognition ability with the OpenCV, and Caroline controlling the camera and printer.

Findings

- Pi camera is perfectly high res enough

- Can easily see all 32 characters as small as we have them currently

- Can fit it all onto the card size that we wanted

To Do

- Camera saves large image sizes, need to resize and crop and do slight image processing

- Figure out ordering and alignment

- Determine exact distances and measurements for photos

- Make prototype setup for photo taking so we can start using it for decoding

- Ending line to determine which side is up

- Last row of characters gets slightly cut off for some reason

- Test color recognition and find range

Week 8 Update

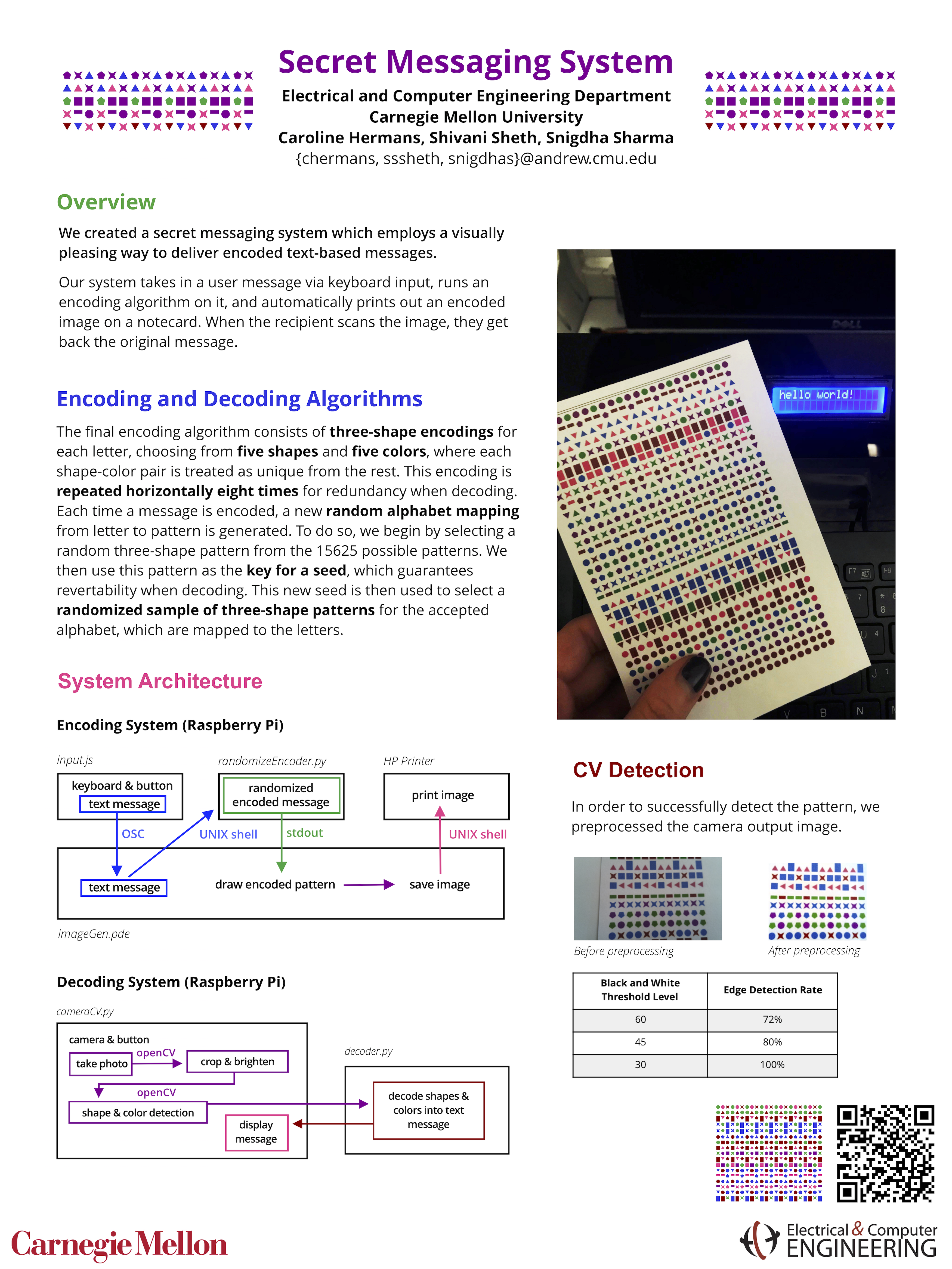

This week, we focused heavily on preparing for our midpoint demo. Caroline and Snigdha worked to integrate the first half of the system with both hardware and software components. We ported our encoding script to Java and are now able to enter a message on the raspberry pi and print out the encoded message directly. During the later half of the week, we focused on catching up on the CV side, working to make sure the CV output was as desired to be able to properly decode the message. We also worked on setting up the second raspberry pi and installing openCV and other necessary software on it.

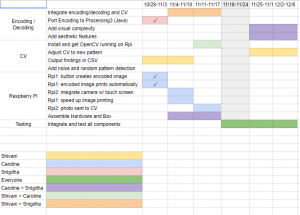

Below is our updated Gantt chart for the midpoint demo.

Caroline

Snigdha

This week, I worked on making sure the encoding algorithm we created would be able to run continuously on the first raspberry pi from the command line. In order to do this, we had to decide between either using JavaScript and web sockets or porting our encoding to Java. In the interest of time and in order to avoid unnecessary complexity, Caroline and I decided switching to Java was the most effective approach since Processing with Java can be run from the command line. After Caroline and I were able to print the image as described above, I worked with Shivani to sync up on the CV and decoder parts. We worked together to tweak the output CSV in order to make the decoding process smoother. Using that, I modified the decoder to properly parse the CSV as it’s read in and use this to decode the message. During the coming week, I’ll be working on this more to get the decoder to correctly output the decoded message.

Shivani

Week 7 Update

Overall, we focused this week on preparing for the midpoint demo, by trying to integrate separate parts into a more functional project. Because of external factors, we were unable to work on the CV this week, so we focused on the integrating the encoding end. We have the encoding system set up such that when someone types a message in and hits a button, it automatically prints out the encoded image. To do this, we rewrote the encoding system in Java so that we could use the Processing desktop app which integrates with the command line. Unfortunately, because of the types of communication we’re using, it’s really slow right now (~20 seconds from entering the text to printing), but we have plans to make it much faster and more polished in the coming week.

a full 20+ seconds… yikes

Caroline

Encoding System

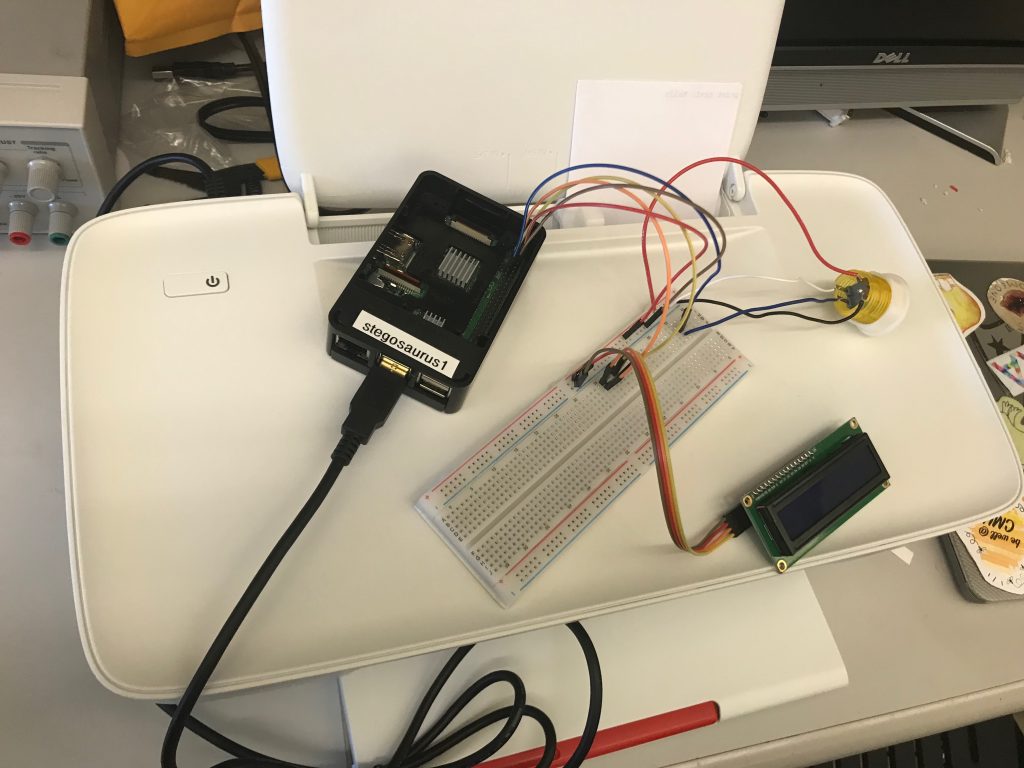

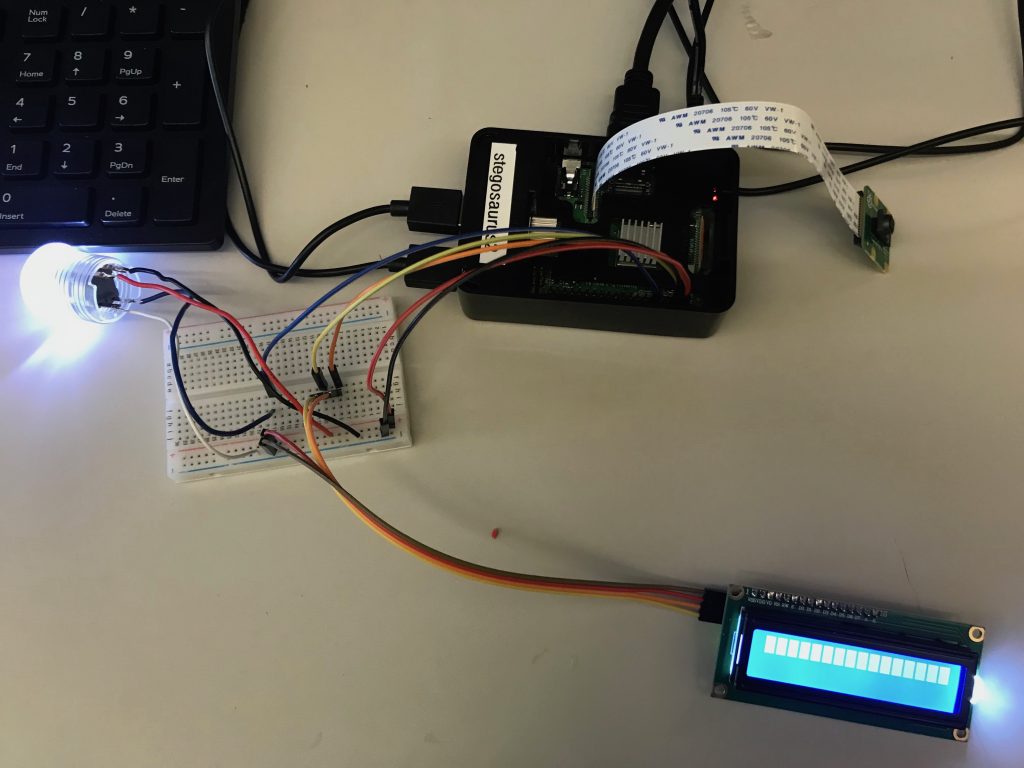

Last week and this week, I set up the first Raspberry Pi with an LCD screen, a keyboard, a button, and a printer. The hardware system is now automated such that the user types in a message into the Pi, they see it on the LCD screen, they hit the button, and the message prints out automatically.

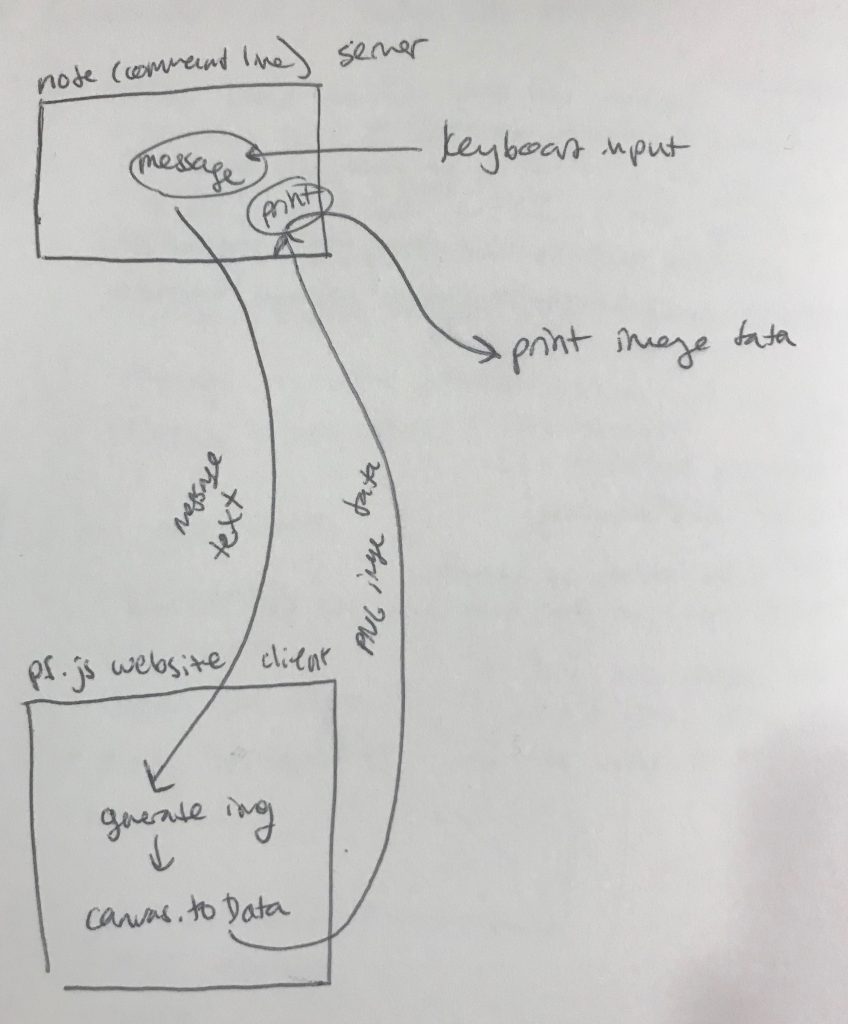

Because Snigdha’s encoding system is written in p5.js for the web, we originally needed to communicate between the web and between a node server in order to actually print out the image on the canvas. We figured that we needed to use web sockets to communicate back and forth between p5.js and node. This came with several more problems involving client-side web programming, and between installing browserify and other packages to attempt to communicate via a web server, it took a big push to get things up and running here. The system I came up with is:

(1) user inputs message (string) via keyboard, which is received by local node server.

(2) local node server broadcasts message via socket.io.

(3) client-side p5.js app hears the message, and updates the image.

(4) p5.js app converts canvas to data image, and sends the data back (string) via socket.io.

(5) local node server receives PNG data, and prints out encoded image.

However: this would have required majorly wrestling with lots of obnoxious web programming. We decided instead to use processing, which has command line functionality available, as it’s written in Java. Snigdha reimplemented her code in Processing Java, and I wrote code to automatically call the printer from within processing, and to automatically call processing from Javascript, which is what our GPIO system is still written in. This worked – we were able to automatically print end-to-end from keyboard input by pushing a button! Major Milestone!

But alas, there is a problem. On average, after measuring four times, it took around 20 seconds to automatically print the image after the button is pressed. This is pretty bad. The reason it takes so long is because calling a processing sketch from the command line takes a long time. So even though my code is optimized to print quickly, the actual rendering of the image is taking a super long time based on the graphical environment we used.

Right now, our main priority is getting everything functional. However, I would really like to spend a few days working on getting this to run much faster. I have three ideas for how to do this. I really want to make sure that the printer is called pretty much instantly after the message is inputted, right now, that is the longest waiting period.

(1) do the awful web server thing. It would be a frustrating experience getting it to work, but web socket communication is a lot faster than calling processing code from the command line.

(2) Use something like OSC to allow javascript and processing to communicate with each other live without having to re-launch the app every time, but I’m not sure how that would work . <- I think this is what I’m gonna go for

(3) implement all of the hardware in processing. It would require me to write my own I2C library for the LCD display but I think it could work

Decoding System

I also set up the second Raspberry Pi with a Pi Camera, an LCD screen, and a button. I wrote python code that takes a photo with the Pi Camera whenever someone presses the button. The next step is to install OpenCV on the Raspberry Pi and use it to automatically process the image that is taken. We’re also planning to add a digital touch screen so that participants can see the camera feed before they take a photo.

Snigdha

This week, I worked on modifying the encoding file to be able to be run from the Raspberry Pi to make it easier to integrate with the hardware. This involves modifying the way input is handled so that we could read an input from the hardware, as well as making sure the file could be continually running instead of having to rely on a human running at each time. While this is still an ongoing process, I also looked at using socket.io to get the input from the hardware into the generateImage file.

Shivani

Week 6 Update

This week’s main goal was to continue integrating the different aspects of our project together. Shivani and Snigdha were able to integrate the image scanning and processing with the decoding algorithm and Caroline was able get the keyboard and printer integrated to the Raspberry Pis. We also started brainstorming about different ways we can make our pattern more complex and aesthetically pleasing.

Caroline

This week I integrated more of our end-to-end sender system on the first Raspberry Pi. Now, typing a message into a keyboard and hitting the button prints out the message automatically. There are two big things I am planning to get done this coming week: (1) hooking up the Raspberry Pi camera to a button on the second Pi, and (2) working to integrate the automatic printing with Snigdha’s encoding code. The biggest challenge here is that the encoding is happening in p5.js, which is browser-based, and does not have an easy way to interact with the command line for automatic printing. My starting point here will be using web sockets to communicate the keyboard input to the encoding algorithm, and then somehow sending the image to the printer, either by saving the image and running command line code, or by hosting the image on a local server and having some other command line code reach out and print it.

Snigdha

This week, I worked with Shivani and Caroline to figure out how to further beautify our encryption. More specifically, we looked at ways that the encryption could be modified to look more visually pleasing and complicated beyond rows of shapes. We also looked at other ways we can encrypt the message before displaying it, or ways to alter the visuals to further obfuscate the message. These are still topics that need a bit more research before we can begin implementing them. Additionally, I continued working with Shivani to integrate the CV with the decryption, as well as talking with Caroline about the encryption and how to intergrate it with hardware.

Shivani

This week, I worked with Snigdha on connecting the computer vision and decoding parts together. I did a lot of testing to ensure that the order that the image was scanned was correct and that the colors are easily scanned. During class on Wednesday, I started looking into getting OpenCV set up on the other Raspberry Pi and porting our scanning mechanism there. For next week, I plan on working with Caroline to continue the set up process for the Raspberry Pi and do more testing using that camera to make sure that everything is able to scan.

Week 5 Update

This week’s main goals were to integrate image scanning with OpenCV, to work on our decoding algorithm, and to get the Raspberry Pi encoding system working. Shivani and Snigdha worked together to get the CV to output data that could easily be processed by the decoding algorithm, which we also developed this week.

Caroline

This week, I set up the first Raspberry Pi with WiFi, git, and javascript libraries. I also installed CUPS to get the Raspberry Pi to work with the HP printer we got, but did not realize that I needed to order a printer cable, so now we are waiting on that. I also got I2C communication working with the LCD screen, and I have the pi running our image encoding system, as well as a button for input. For next week, my goal is to get the system running end-to-end with the button, the screen, the keyboard, and the printer.

Snigdha

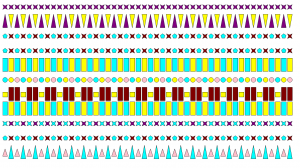

This week, I worked on adding randomization and encryption to our encoding algorithm. I did this by varying the selected shapes for a character such that a pattern that requires a 4 edged shape could randomly pick between squares, rectangles, and diamonds, for example. I also added an encryption algorithm that relies on mapping the alphabet to a qwerty pattern to shuffle around the letters of the message. I also spent time this week writing a decoder that took in the results from the CV detection in a CSV format, and processed them to determine the input message. This requires a bit more integration testing with the CV but works with manual unit tests. I also discussed a couple alternative encoding ideas with Shivani regarding encoding the data in an existing image that was not a direct 1-to-1 mapping, taking advantage of the existing attributes in various images. This idea is something that we will look into a bit more after preparing for the midpoint demo.

Shivani

Week 4 Update

Week 4 Update

This week’s main goals were to integrate the OpenCV detection with the decoding algorithm. We worked on developing a way to refine the CV to lower the processing time and let it detect multiple elements in parallel so it can send the data quicker. We also discussed a way to reduce overhead and duplication of code between the decoding, encoding, and detection parts by using a common dictionary. For this week, we plan on working to integrate the image scanning with the OpenCV, getting the Raspberry Pi set up with a printer and keyboard, and writing the decoding algorithm.

Shivani

This week, after getting feedback from the presentation on Monday, I ran metrics for the CV for a few different patterns to benchmark our progress. After removing the image generation at each step and running the different detections in parallel, I refined some of the CV to reduce the computation time. It currently takes 1.7 seconds to finish processing “Hello World” which is a good place for us and leaves time for printing and other UI features. In addition, I combined all of the different outputs of our CV detection (color, shape, filled/unfilled, order) in a 2D list to export. I met with Snigdha and we decided that the best way to transfer the data to the decoding part of our project was a csv file that she can parse. This upcoming week I am going to be working on exporting the data in a csv file and working on some ordering edge cases that pop up.

Caroline

This week I was away visiting graduate schools, and didn’t have the opportunity to get much done. However, I am looking forward to starting the hardware next week. My goal for next week is to hook up Snigdha’s image generation on a raspberry pi with a button and a printer, so that hitting the button prints out an image. This is going to require hooking up her javascript to save an image rather than draw on an HTML canvas, and then using the image to call the printer directly from javascript or the command line.

Snigdha

This week, I worked with Shivani to figure out an efficient way to combine the results from the CV algorithm with the decoding algorithm. We decided against doing all the decoding processing in the CV file, and instead agreed to export the information in a CSV that could be read in and decoded on the Raspberry Pi. With this system in place, I will spend the next week on developing writing decoding functions. I also spoke with her about the ability to detect our most recent encoded pattern and will be working on refining it further by changing the encoding pattern from six shapes to three, and adding a filled/unfilled feature to the shapes. Lastly, I worked with the team on the Design Document due this Sunday.

Week 3 Update

Week 3 Update

Summary

This week’s main goals were to work on the OpenCV detection and develop key component detection such as shape and color. We worked on a more competent encoding algorithm and the computer generation of the algorithm to allow for further refinement based on the OpenCV results. We also spent some time looking into what the process of scanning and processing the image into a CV-readable format would look like. For next week, we are planning on refining our encoding/decoding algorithm and integrating the algorithm with CV. We also plan on looking at how to get the OpenCV onto the iOS app and whether to scan using a smartphone camera or another Raspberry Pi.

Updated Gantt Chart

Shivani

This week, I made progress on detecting more detailed shapes and images. I worked on color detection for different lighting conditions for maroon, purple, yellow, cyan, and green. In addition, I created a filter to determine if a shape is filled or not. I also refined the initial shape detection from last week so it detects the symbols in a directed order. For this upcoming week, I’ll be working with Snigdha and Caroline to come up with a format to store all of the information about the pattern and finalize everything we need to scan for.

Caroline

This week I got some OpenCV demos running on iOS, and started working on creating our “scanner” app. However, we realized that we may not actually want to use iOS for our scanner, we might want a second Raspberry Pi to scan it back. Next week I will be traveling, but after that I will be working to integrate printing on the Raspberry Pi. We decided that we’d definitely like to print it out, and now it’s a question of whether we’ll be using a raspberry pi with a camera for the scanning as well.

Snigdha

This week, I worked on the encoding/decoding algorithm to generate a visual encoding that was less rudimentary and also more visually pleasing. The algorithm incorporates 4 shapes, 4 colors, and ses of 6 shapes to encode each letter. In addition, the algorithm now includes repetition for more accurate decoding. For next week, in addition to working on the design document, I’ll be working with Shivani to further refine this algorithm and also connect the encoding part to the CV.

Week 2 Update

Week 2 Update

Summary

This week, we were scheduled to further develop the OpenCV detection and start key component detection, finish up the encoding/decoding algorithm, and start developing the iOS app. We were set back a little due to travel and sickness and were not able to coordinate a meeting and completely finish our tasks. For next week, we are planning on getting shape and color detection working in OpenCV, finalize our encoding/decoding algorithm, and play around with the iOS app.

Shivani

I was out this week because I had strep throat. I plan on making up my work this week and putting in extra hours to further develop the key component recognition and build upon the color detection.

Caroline

This week I focused on exploring the integration of OpenCV into our iOS app. I made a simple project in XCode and got OpenCV compiling within an iOS app context. Next week, I plan on continuing with the iOS OpenCV integration, and getting a simple front-end OpenCV camera app running on a phone. I’d also like to get started with getting the Raspberry Pi set up and printing to the printer.

Snigdha

I was out this week attending the Grace Hopper Conference. In order to make up the lost time, I plan on working extra this week to modify the program that generates the encoded message to be more visually pleasing and complex. In order to do so, I will be switching from using Python’s Tkinter to Processing, which allows for better granularity and customization of the shapes.