This week we have all been working on bringing our product up to where we are hoping to be for our midsemester demo. So far we have run into a few issues with some of the technologies, but seems to be moving forward after some minor hiccups. For instance, the Leap Motion controller has difficulty detecting hands while the hands are close to a reflective surface. We are hoping to solve this by covering the work area with a light absorbing material. We will be purchasing and testing Duvetyne for this purpose. We are putting together our presentation, designing the physical frame, and creating the preliminary CV pipeline we will be using. We’ve placed some orders for parts and have been able to use some from the department. So far things seem like they’re on a good path.

Aneek’s Status Update for 2/22

This week I mainly focused on testing the Leap Motion controller in various conditions and exploring the SDKs. It turns out that the company behind the controller has pivoted since I last used the controller and the new SDKs are focused on VR game development purposes, so I installed the original library (last released in 2017) to see if it would still work. It requires Python 2.7, but I was able to get a simple hand and gesture tracker up and running to explore the characteristics of its tracking. I measured that it has an effective range of about 22″ when facing upward, but we found some issues when we flipped it over to track hands while facing downwards. Some more research revealed that the tracking is done through IR LEDs and cameras, so when the controller was oriented downward, the reflection of the IR light off the surface below the hands threw off the software and led to issues. I found a tool from Ultraleap (the company behind the controller) that allowed me to see the actual image view of what the cameras were picking up, and was able to confirm that it was the reflection of the IR light washing out the image that was likely causing this. I worked with Connor to do some research on materials that would reflect less IR light and found a few options, including Duvetyne and Acktar Light Absorbent Foil. Duvetyne was the most affordable one and still promised significant IR absorption, so we are planning on purchasing a sheet of it to lay underneath the frame to assist the downward-facing Leap Motion’s hand-tracking.

I also worked on the system/software architecture, and made some diagrams for the design review slides. Because I didn’t want the old Leap Motion SDK to make all 3 of us have to write in Python 2.7, I came up with a simple client-server model communication protocol so that the other parts of the codebase can be in Python 3 and use sockets to communicate with the hand-tracking code. Since we have strict latency requirements, it is important that this added latency of using sockets, even when it’s completely local, isn’t too high, so I will continue to measure and be thinking about this and minimizing the amount of data that needs to be communicated.

Connor’s Status Update for 2/22

This week, I made a decision on which webcam we should use and I worked on designing the physical frame for our project. Earlier in the week, I ran some tests and it was clear that the pixel count was very important. We chose the Logitech C920 because it is a 15 megapixel camera. As for the frame, I finalized a working prototype.

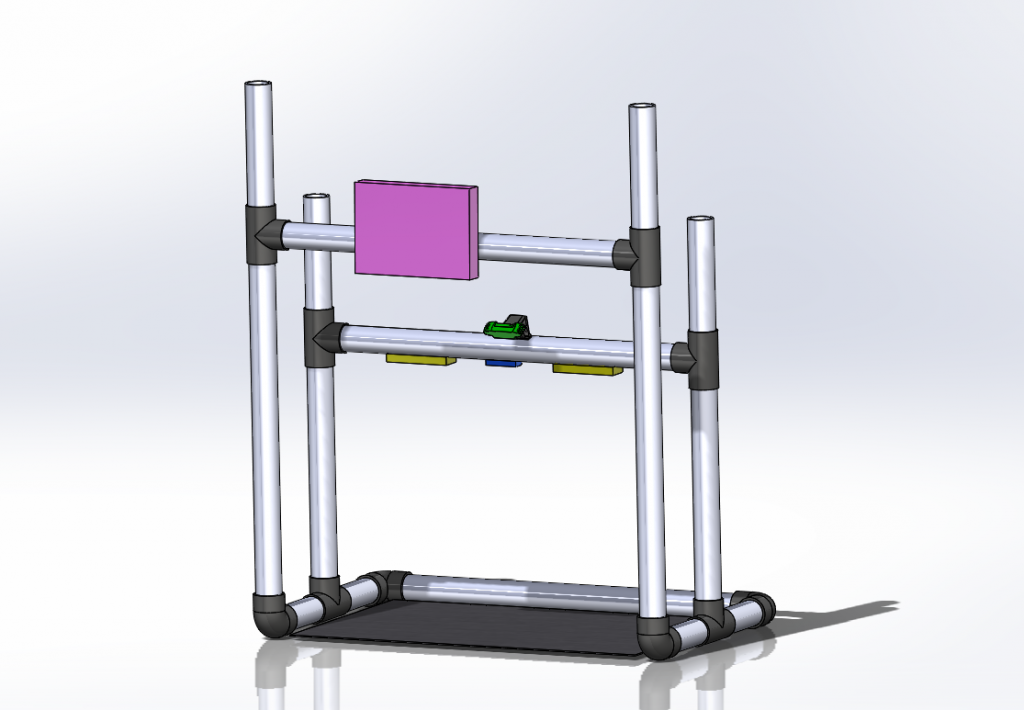

The frame’s purpose is to hold the projector, the webcam, and the leap motion controller at the best height and position above the puzzle. In class, I took measurements to ensure that the projector could cover the entire puzzle and work area. Then, I decided to mock up some designs in Solidworks. The following image shows how our frame will likely end up.

The structure is entirely made out of PVC pipes. For reference the tallest vertical pipe is 4ft tall. The horizontal pipes are attached to the vertical pipe by “slip tee” joints so that we can adjust the heights of the components if need be. The smaller components will be mounted by velcro, while the projector will be screwed in. The platform on the bottom will be a fabric like Duvetyne that is able to absorb a lot of light.

Andrew’s Status Update for 2/22

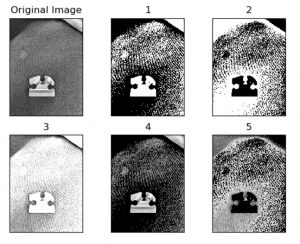

This week has been pretty good! I’ve been playing around with a bunch of different ways of thresholding a puzzle piece using a combination of methods and combinations of methods. These each seem to have their strengths but combining most of these methods with OTSU seems to generate helpful results. These should be solid enough to be able to extract which blob is the actual piece and the next step is to try to match up the piece to where it should fall on the image itself. Furthermore I’ve been playing around with the CLAHE (Contrast Limited AHE) method in order to normalize lighting conditions in the images, as we foresee this potentially being a problem as the user is moving around and depending on how glossy the puzzle ends up being. (we may have to use some light diffusing material like tissue paper to physically create a more even lighting condition on the pieces).

Team Status Update for 2/15

This week, we took some time to do our assigned reading and write-ups on The Pentium Chronicles. We also drafted a more complete system architecture and dove into the software architecture as well. We continued to research camera options and started working with OpenCV to do some performance tests.

Connor’s Status Update for 2/15

This week I continued to do research on what materials we should buy. I created a table listing the projectors that we are considering and their specifications/prices. We are now planning on using the Epson Powerlite 1776W, which is offered by the ECE lending desk. However, there could be an issue as it is not designed to be used pointing down. As for the frame, I believe PVC pipes will be the most sturdy and efficient option. Next week, I will test out our secondary projector option so we have a back up plan. Also, I will begin designing and ordering the materials for the frame.

Andrew’s Status Update for 2/15

Happy Valentine’s Day!

This week I’ve been working on setting up OpenCV as well as going through its documentation for the parts we are intending on using for this project. Furthermore, I was able to get the projector we are intending on using and we did some preliminary testing to ensure that it would be powerful enough for our needs. Furthermore, I have been refining how our algorithm will work as to hopefully be able to optimize responsiveness and help create more fluidity in the use of our project.

Aneek’s Status Update for 2/15

This week I mainly worked on two things. First, I started reading the documentation on the Leap Motion SDK and its gesture detection. We are planning on using the controller in an inverted set up (facing down), and I discovered that it may cause some challenges in detecting hand orientation. Fortunately, that shouldn’t interfere with finger and tap detection but we will need to run some tests once the device is in-hand. I also drafted our system and software architecture and started breaking down the software into individual libraries that we can then divide up and work on. I also recorded our ideas on the initialization and the responsive animations.

Hello world!

Welcome to Carnegie Mellon University: ECE Capstone Projects. This is your first post. Edit or delete it, then start blogging!