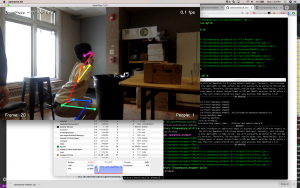

Since my last status report I have made a significant amount of progress. One of the first things that happened was I realized I no longer wanted to use AWS Kinesis Video streams. So now I have a script that runs on the Raspi that takes an image every x seconds, and sends that to a server on the ec2 that contains the openpose library.

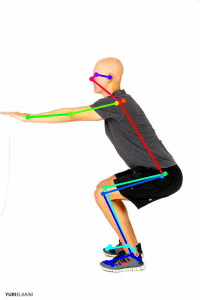

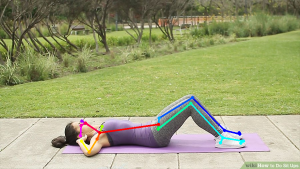

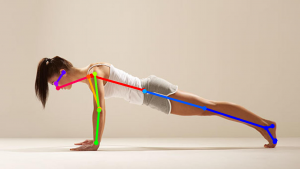

In order to have a robust openpose handling server, I used docker to create an image that contains the library and a python server that I wrote to handle requests. This server takes an image from the raspi, runs it through openpose, and hands it off to Nakul’s backend server via json file containing information on the joints of the user.

I also had the server upload the output images of openpose (picture of user with highlighted joints), to a s3 bucket, where I wrote a simple webpage to display this image as it updates.

Next I want to get the output of Cooper’s workout classifier and display this on the webpage next to the image of the user. This will give us a good idea on how well the classifier is working in telling us what workout the user is doing.

This recent stretch of work was very productive and I am confident that we will deliver a successful and interesting final product.