This Week

User Interface Testing [1][2][7]

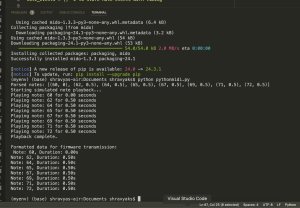

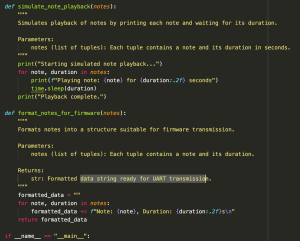

This week, I began by coding a testing program for the user interface. Since the eye-tracking is not ready to be integrated with the application yet, I programmed the backend to respond to commands from the keyboard. Via this code, I was able to test the functionality of the progress bars and the backend that tests consistent command requests.

In order for the user to pick a command with this program, they have to press the corresponding key five times without pressing any other key intermediately, which will reset the progress bar.

It will hopefully be a quick task to integrate the eye-tracking with the application, based on the foundation of this code.

Here is a short video demonstrating some basic functionality of the program, including canceling commands: https://drive.google.com/file/d/16NyUo_lRSzgLYIwVOHCEuoKJ6CYjiKip/view?usp=sharing.

Secondary UI [3][4][5][6][7]

After that, I also made the integration between the primary and secondary UI smoother by writing a secondary window with the sheet music that updates as necessary.

In the interim, I also included a note about where the cursor is in the message box, since I haven’t yet figured out how to implement that on the image of the sheet music.

Pre-Interim Demo

On Wednesday, I demonstrated my current working code to Professor Bain and Joshna, and they had some suggestions on how to improve my implementation:

- Highlighting the note length/pitch on the UI after it’s been selected.

- Electronically playing the note while the user is looking at it (for real-time feedback, makes the program more accessible for those without perfect pitch).

- Set up a file-getting window within the program for more accessible use.

I implemented the first functionality into my code [3][4][6], and attempted to implement the second, but ran into some issues with the python sound libraries. I will continue working on that issue.

I also decided to leave the third idea for later, because that is a more dense block of programming to do, but it will improve the accessibility of the system so it is important.

(With the new updates to the code, the system currently looks slightly different from the video above, but is mostly the same.)

Eye-Tracking

On Thursday, Peter and I also met with Magesh Kannan to discuss the use of eye-tracking in our project. He suggested we use the MediaPipe library to implement eye-tracking, so Peter is working on that. Magesh offered his help if we need to optimize our eye-tracking later, so we will reach out to him if necessary.

Testing

There are no quantitative requirements that involve only my subsystem; the latency requirements, for example, involve both my application and the eye-tracking, so I will have to wait until the subsystems are all integrated to start those tests.

However, I have been doing more qualitative testing as I’ve been writing the program. I’ve tested various sequences of key presses to view the output of the system and these tests have revealed several gaps in my program’s design. For example, I realized after running a larger MIDI file from the internet through my program that I had not created the logic to handle more than one page of sheet music. My testing has also revealed some bugs having to do with rests and chords that I am still working on.

Another thing I have been considering in my testing is accessibility. Although our official testing won’t happen until after integrating with the eye-tracking, I have been attempting to make my application as accessible as possible during design so we don’t reveal any major problems during testing. Right now, the accessibility issue I need to work on next is opening files from within the program, because using an exterior file pop-up necessitates a mouse press.

Next Week

The main task for next week is the interim demo. On Sunday, I will continue working to prepare my subsystem (the application) for the demo, and then on Monday and Wednesday during class, we will present our project together.

The main tasks after that on my end will be continuing to work on integrating my application with Shravya’s and Peter’s work, and also to start working on testing the system, which will be a large undertaking.

There are also some bug fixes and further implementation I need to keep working on, such as the issues with rests and chords in the MIDI file and displaying error messages.

References

[1] tkinter.tkk – Tk themed widgets. Python documentation. (n.d.). https://docs.python.org/3.13/library/tkinter.ttk.html

[2] Keyboard Events. Tkinter Examples. (n.d.). https://tkinterexamples.com/events/keyboard/

[3] Overview. Pillow Documentation. (n.d. ). https://pillow.readthedocs.io/en/stable/

[4] Python PIL | Image.thumnail() Method. Geeks for Geeks. (2019, July 19). https://www.geeksforgeeks.org/python-pil-image-thumbnail-method/

[5] Tkinter Toplevel. Python Tutorial. https://www.pythontutorial.net/tkinter/tkinter-toplevel/

[6] Python tkinter GUI dynamically changes images. php. (2024, Feb 9). https://www.php.cn/faq/671894.html

[7] Shipman, J.W. (2013, Dec 31). Tkinter 8.5 reference: a GUI for Python. tkdocs. https://tkdocs.com/shipman/tkinter.pdf

I also identified some errors with the logic having to do with the rest-notes in the composition, but I wasn’t able to identify what the issue was after some debugging, so I will have to continue working on that next week.

I also identified some errors with the logic having to do with the rest-notes in the composition, but I wasn’t able to identify what the issue was after some debugging, so I will have to continue working on that next week.