What did you personally accomplish this week on the project?

This week, had some other responsibilities I had to attend to, meaning this report won’t be as long as last week’s (this may be a relief depending on the reader).

In general, my main focus this week was attempting to implement the inference/memory optimizations that were discussed in previous meetings. These had the goal of

1. Reducing the number of inferences done, by not inferencing on empty/unchanged rooms

2. Not saving irrelevant images, and creating a limit for the maximum number of images that can be stored, so as to not occupy all of the memory in our Raspberry Pi/Server

Here were the steps taken to achieve these goals:

- Recorded a “sample trace”

- In order to test algorithms for the aforementioned observations, we needed to have a “realistic” test trace to run the algorithms on. Unlike our ML model training data, which hopes to capture as many possible diverse scenarios as possible, this trace should take many consecutive similar-looking images, to test our algorithms’ ability to remove irrelevant images.

- To capture a “sample trace”, I simply set up a raspberry pi camera in my room, and set it to take and save an image every 5 seconds, over the course of ~3-4 hours. This time can be broken up into 4 “periods”

- “active”

- This is the time right after I set up the camera. In general, I tried to move things around my room for a few minutes to simulate a period of activity.

- “empty”

- At this point, I left my room to get dinner at Tepper. There was nothing else happening in the room at this time, and we should not have to even do inference on anything in this period.

- “eating”

- For this period, I had brought my food back to my room, and was eating it at my desk. This should be an interesting section to monitor because I was not doing much in terms of moving around, but there was still a person in the room. As such, we will not be able to naively “delete every picture that does not have a person in it” from the database.

- “desk work”

- For this period, I was doing work at my desk. It will be interesting to try to optimize for this period because there are probably relatively large pixel differences in the photos, as my monitors keep flashing between light and dark mode.

- “active”

- Tried different “pixel-difference” optimizations

- Now that I had recorded my photos, I tested different ways to try to remove useless images.

- SSIM Index

- This is a method of calculating image difference which can easily written in python with a simple library call. This metric seeks to find structural differences in images with algorithms like edge detection and certain special filters.

- On my laptop, this method took longer than a YOLO inference to execute per pair of images. As my goal is to save computing power, this was a non-starter.

- Pixel Difference

- This was calculated by simply summing the absolute values of the differences across all the pixels in both images. This was substantially faster than YOLO inference.

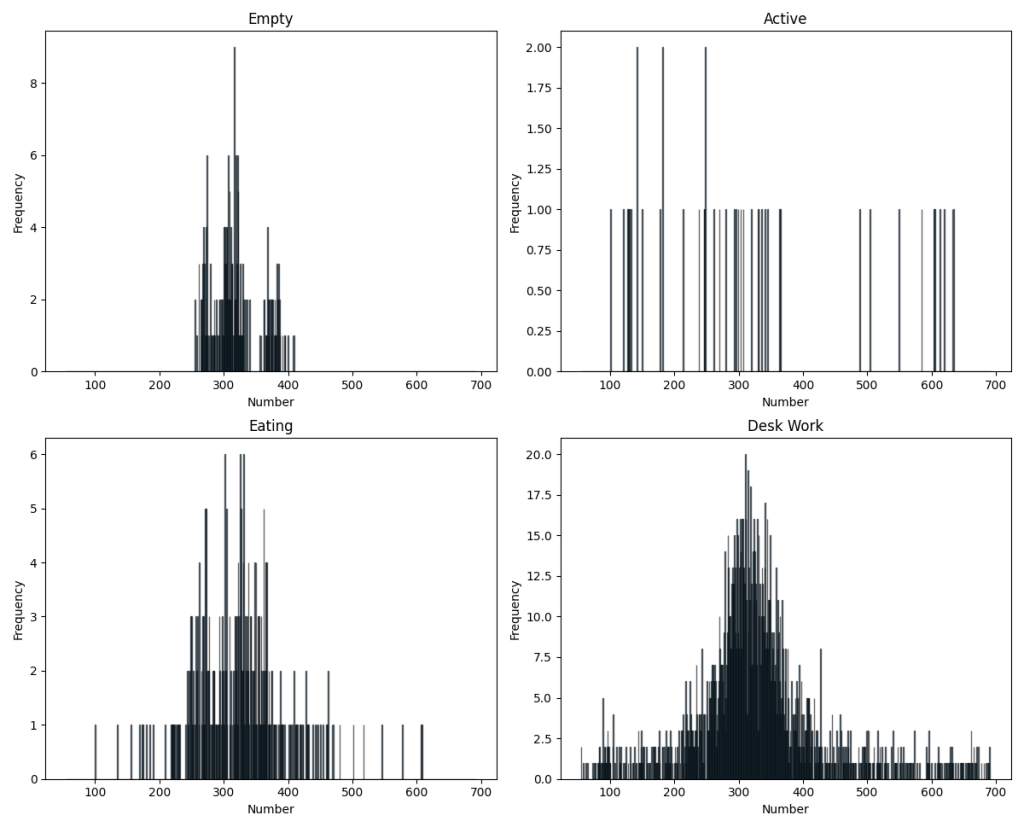

- Here are some charts showcasing the frequency of certain difference values for each of the “periods”:

- This seems useful for finding a threshold, but is still a bit noisy.

- MSE (Mean Squared Error)

- This metric is calculated by taking the average difference squared of each pixel in the two images. This ran at a speed comparable to regular difference.

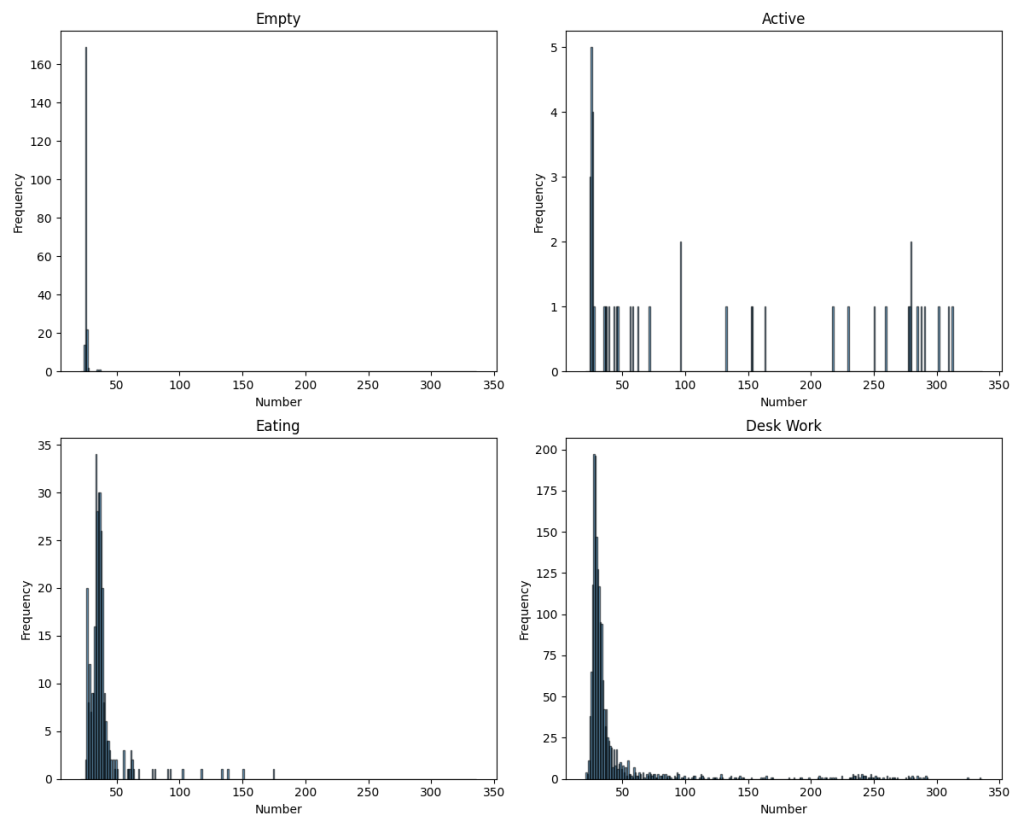

- Here are some charts showcasing the frequency of certain difference values for each of the “periods”:

- This metric seemed to provide a better idea of what threshold value would be effective.

- In the end, I settled for 30 as a good threshold for this trace. Here are the proportions of images that would be sent for inference vs. deleted for each of the “periods”:

-

empty: accepted:2, removed:207

-

active: accepte 31, removed 13

-

eating: accepted 267, removed 49

-

desk_work: accepted 1008, removed 786

-

- This optimization has already been integrated into the server

- SSIM Index

- Now that I had recorded my photos, I tested different ways to try to remove useless images.

- Began working on “detection-based” optimizations

- I also began working on optimizations to determine which image should be the first to be deleted if the server gets full.

- However, I still need to do a bit more work before I have properly presentable results.

- Additionally, work will have to be done to the database to support the added functionality of removing images from the database.

Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule?

I believe my progress is still on-schedule. I still have some work to do, as I will discuss below, but I believe it can be completed by the final presentation/report deadlines.

What deliverables do you hope to complete in the next week?

My primary objectives are listed below, but what I actually work on will still be up to some discussion.

- Finishing adding authentication to the server

- I would like to add the ability to log out and delete users.

- Continue creating optimizations to bound images stored in the database

- Work with teammates to add the necessary database functionality to add this feature to the webserver.

- As a proof of concept, try to get the server running on the cloud

I have left out some features mentioned in previous reports, such as the microphone frontened, as I believe it may be taken over by one of my teammates.

Now that you have some portions of your project built, and entering into the verification and validation phase of your project, provide a comprehensive update on what tests you have run or are planning to run. In particular, how will you analyze the anticipated measured results to verify your contribution to the project meets the engineering design requirements or the use case requirements?

Tests I have run:

Verification tests:

- My verification tests mostly have existed mostly in the form of demonstrating the state of our project to others, and by allowing others to use it. For the time being, this seems to have been enough to have a project that works well. I don’t think we will end up having a proper “continuous integration testing” system of any kind, as it is overkill for the purposes of a basic demonstration.

Validation tests:

- Latency tests

- I have made “sample traces” to test how long each relevant endpoint of our webserver takes. From what I have seen, all results have fallen within our guidelines from our initial report.

- Throughput tests

- As discussed in last week’s report, I have made tests which measure the throughput of the raspberry pi’s, in order to better understand their capability.

- Pixel-difference threshold tests

- As discussed in this report, I have run tests to choose threshold values for the mechanisms we will be using to reduce unnecessary work.

Note: Many of our constraints listed in our design presentation are very flexible with the goal of being feasible at scale, or in the cloud. Since we have transitioned to more of a local approach, our latencies are significantly shorter by comparison.

Tests I hope to fun:

- Image deletion end-to-end test

- I hope to eventually test the functionality of image deletion in a “realistic scenario”. I would want to move some objects around in a room, then leave, and see if the system behaves as I want it to, deleting images that are not relevant, and keeping those with shifted objects.

0 Comments