Summary

Our group completed:

- Trying to get the NVIDIA Jetson to work

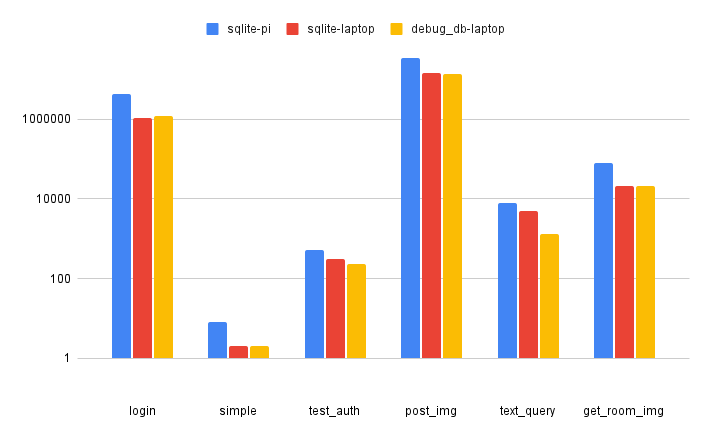

- Fully integrating sqlite database code into webserver

- Implementing performance monitoring framework for webserver

- Began testing the performance of our webserver

- Finished 3d-printing all files for proposed Rpi case

- Continued working on ML model performance improvement

What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

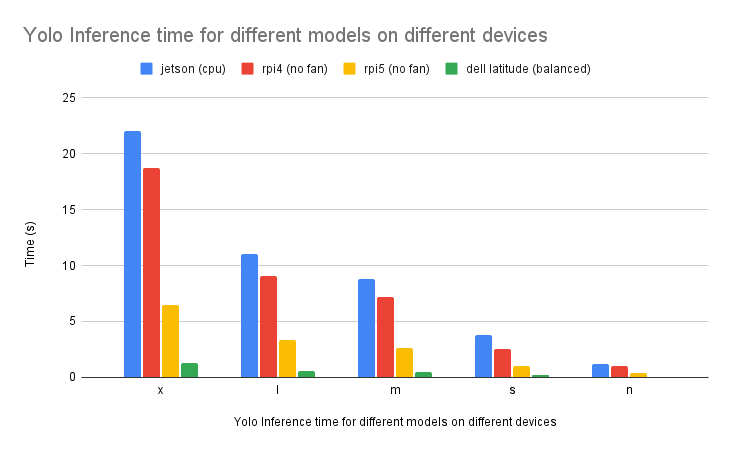

Currently we still have a few significant risks for our project success. I think the primary risk is still related to the performance of the ML object detection model, but we are seeing some promising signs. Currently, the accuracy of our customized model is nearing the accuracy of stock YOLO, which is promising, and we have some new insight into a new suite of image-to-text models which stand to be potentially very useful, either for our usual inference tasks, or for dataset creation. Our risk mitigation plan for this remains the same, though we may not have to rely as much on manual casing as we have previously mentioned.

A second risk is that we still have yet to implement our microphone frontend, or our cloud backend to any major capacity. We may have to limit our scope in such cases to prioritize delivering our preferred features in high-quality, but our group should discuss accordingly.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

There were a few relevant semi-changes made to our plans this week.

Firstly, we were able to essentially rule out the Jetson as a platform on which to run the inference server, primarily due to issues getting modern software to run on old hardware.

Secondly, we added performance monitoring to the system. Our system spec initially focused on the actual functionality that had to be implemented by the server, and as this was not a part of what the end user will see, we did not include it. However, we did have to slightly change the structure of the webserver to allow for better observability.

Provide an updated schedule if changes have occurred.

No schedule changes are currently set in stone, but we will have to evaluate our priorities next week. In particular, we will want to discuss making better benchmarks for objectives we must test, and creating our final website.

As discussed above, there may be a few other small initiatives we may plan on investing time in.

This is also the place to put some photos of your progress or to brag about a

component you got working.

Here are some graphs we were able to capture using our new benchmarking system. For more information on what they mean, see Giancarlo’s weekly status report.

0 Comments