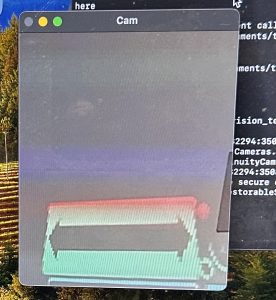

This week, I worked on integrating the homography logic into the overall Flutter UI with Caroline. This required me to make some changes to the process of calibration and the input of images. Furthermore, I added more error handling methods to make sure the program does not just crash on usage (when more than 4 corners are detected). A problem I have been working on is the light gradient in various light settings. As the light disperses with the angle of the projector, the light is considerably dimmer and faded out. To fix this, I may have to change the overall projection to be closer to the projector. Furthermore, we standardized the size of the projection space to ensure that we can work perfectly in a set space – but also remain flexible to tuning to other spaces.

This coming week, I will be spending a lot of time completing the final report and filling out the poster. Additionally, I will be adding wire management and aesthetic factors to the setup (specifically the camera mount). As needed, I will continue tuning the calibration and overall system display.