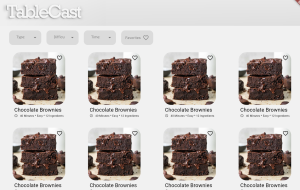

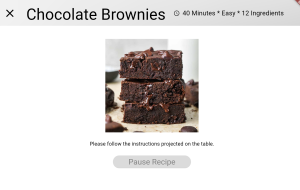

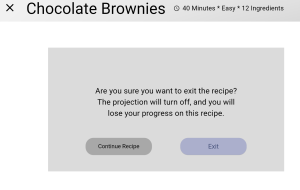

I continued to work on both the web application and projector interface. Both components are mostly functional, but they need some more work. For example, the web application works with hard coded data, but I am working on making it so that recipe data can be dynamically taken from files on the device. I was also working on integrating the calibration step into the user flow. For demo, we just assumed that there is an old calibration step saved, so now I am working on giving the user the option to start a new calibration and wait for the script to finish in the backend.

I am on schedule.

This upcoming week, I will install the wifi card on the AGX and make sure that the network interface is set up properly. I also try to reduce the latency for the running processes that we noticed during demo. I want to focus on these things this coming week and then work on the rest of the UI if I have time.

Now that you have some portions of your project built, and entering into the verification and validation phase of your project, provide a comprehensive update on what tests you have run or are planning to run. In particular, how will you analyze the anticipated measured results to verify your contribution to the project meets the engineering design requirements or the use case requirements?

One module that I am in charge of is the voice commands module. In the design review, we had outlined tests that I was planning on doing to verify that the voice commands work. One aspect of the latency of the voice commands. During demo, I noticed that there was a higher latency than expected, so we will run tests where we were say each command and measure both how long it takes for the command to be recognized in the script. It should only take 2-3 seconds to register the command. This is important to test to figure out if the latency is more of a problem within the voice command module or when it is integrated. Another test is checking the accuracy of the commands themselves. We will say commands and take a count of how many it correctly identifies. Additionally, we will try to do it with people talking in the background to see the accuracy in that environment.

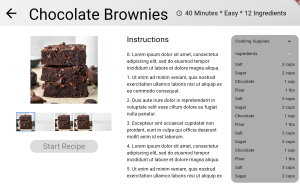

Another module that I am in charge of is the web application. I will make sure to test each possible path of navigation and make sure that there are no errors while a user traverses the website. I will also make sure to test the display on different devices by physically testing and simulating to make sure that the interface spacing and size of components is styled accurately on different screen sizes.

To verify the projector interface, I will make sure that all the required elements are there and function correctly together. I will make sure that different components work simultaneously, such as the video and the timer.