Progress Update:

This week I made progress on gesture recognition using MediaPipe. I had already tested MediaPipe using the web browser demo, but this past week I worked on writing a python script to make the Gesture Recognition model work with my laptop camera. The script was able to recognize all the gestures that MediaPipe was originally trained for.

https://drive.google.com/file/d/1Xvm71s50BpO0O9d-hPm9-XWkQNBlrgQR/view?usp=share_link

Above is the link to a video demonstrating MediaPipe on my laptop. Angle of gesture is very important (notice how thumbs down was hard to recognize due to poor wrist position/angle).

The following are the gestures we decided we will need through out the program:

- Open Palm (right hand)

- Open Palm (left hand)

- Swipe (left to right)

- Swipe (right to left)

The first two gestures are already trained for in the model. For the Swipe gestures, I learned how to access the 21 hand landmarks and their properties such as the x, y, and z coordinates. This had originally proved to be difficult because the documentation was not easily accessible. Since a swipe is a translation on the x axis, I plan to simply calculate the difference in the x-coordinate over a set of frames to determine a Swipe.

https://drive.google.com/file/d/15Q_YZcS0Vv8EEd6kOf7mQT8irsR37j3Y/view?usp=share_link

Above video shows what the Swipe Gesture looks like from right to left.

Schedule Status:

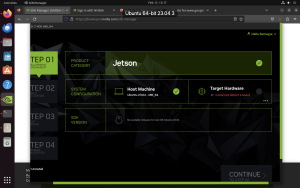

I am on track with my gesture recognition and tracking schedule. But I am behind with flashing the AGX as I still have not been able to get an external PC. There have been slow communications with Cylab. I plan to talk to the professor next week and find a quick solution. But I am not too concerned at the moment, as much of my testing with MediaPipe can be done on my laptop.

Next Week Plans:

- Complete Swipe Gesture Recognition

- Research algorithms for object tracking

- Start implementing at least one algorithm for object tracking

- Get a PC for flashing AGX and flash the AGX