- This week, I spent a lot of time finalizing the final project presentation slides, as well as help my teammate Doreen prepare for her presentation.

- In the previous week, I finished the essential hardware components of the project so I moved over to the software side to help Surafel implement some aspects of the software. The team found a new Python library called facial_recognition that seems to have a much higher accuracy than our current code. Surafel worked on initial testing of the library and I worked on adding code to check users in and saving their face if they were not in the system. I also implemented checkout and the deletion of their face after checkout.

- Afterwards, I worked on the CSS of the web application, cleaning it up for a smoother looking final interface.

- I am slightly ahead of schedule for my own personal work of working on the hardware components. But, I am now pivoting to help with software. I would like to start completely wrapping the entire project up within a day or two, but I am not completely sure if its possible because we might need 3-4 days for finalizing software. The base functionality of the project is finished and I think most of the stuff we are doing now is extra (such as a possible buzzer on the hardware side, or user check-in/check-out logs with manual checkout on the software side). At this point a lot of work will have to be put in to make sure it gets done on time!

- Next week I hope to clean up the entire project, finish the final deliverables like the video, and poster, and be done with the semester (and my time at CMU!)

Team Status Report for 4/27/24

- Currently, we still have to finish polishing the web application and facial recognition part of the project. The basic functionality of the web app – showing a camera feed and running the facial recognition system – is working pretty well. We still have to finalize things such as displaying the check-in and check-out logs, along with manual checkout (in case the system cannot detect a checked-in user). The other risk is racial recognition. Throughout our testing in the last 2 days, it is highly effective at distinguishing between diverse populations, but when the testing set contains many very similar faces, the facial recognition system fails to differentiate them. The new facial recognition library we are using is definitely much better than the old one though. We will try our best to flesh out these issues before the final deliverables.

- The only change we have really made this week is switching to a new facial recognition library, which drastically improves the accuracy and performance of the facial recognition part of the project. This change was necessary because the old facial recognition code was not accurate enough for our metrics. This change did not incur and costs, except perhaps time.

- There is no change to our schedule at this time (and there can’t really be because it is the last week).

UNIT TESTING:

Item Stand Integrity Tests:

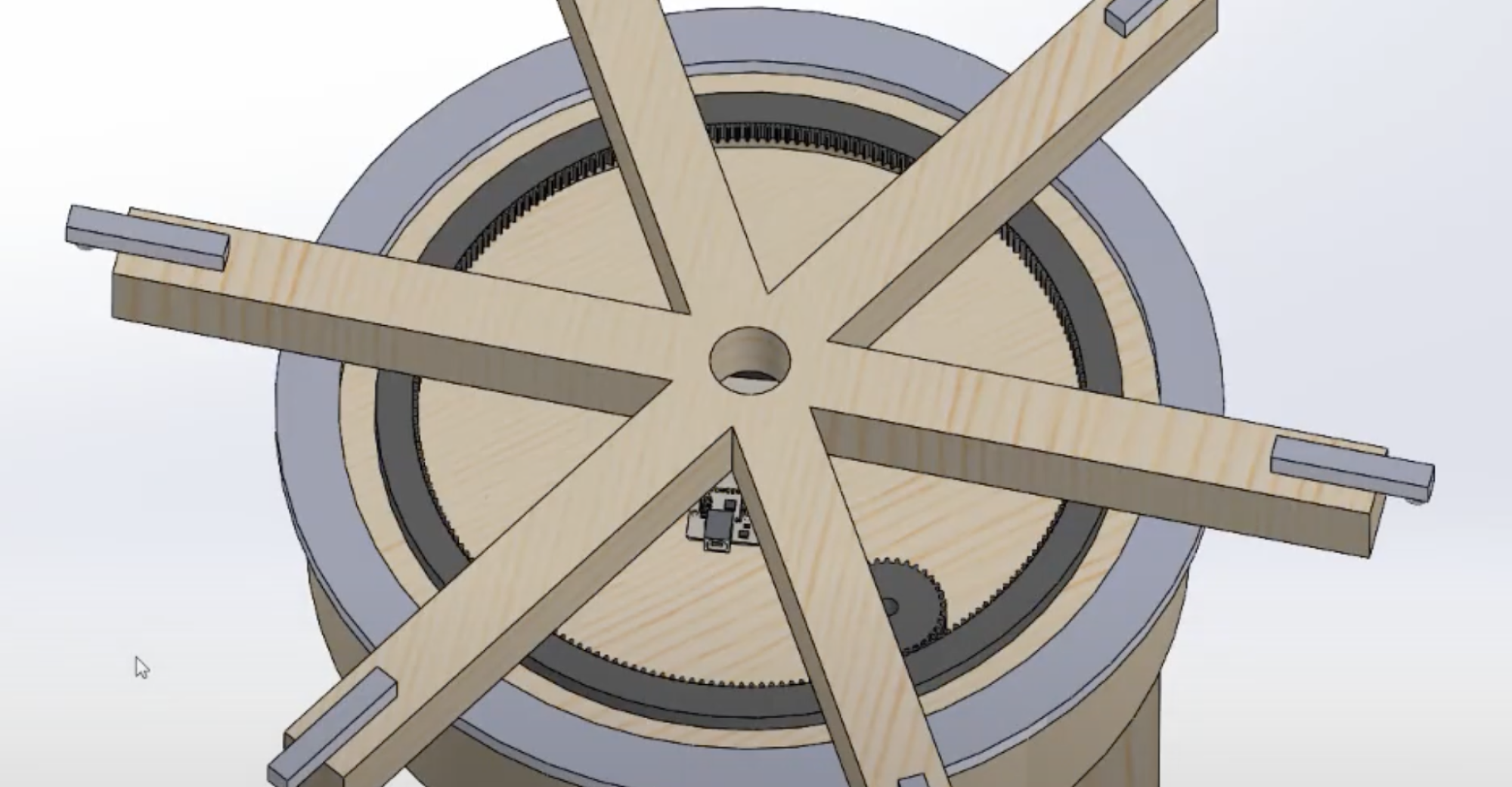

- Hook Robustness: Placing 20 pounds on each of the 6 hooks at a time and making one full rotation

- Rack Imbalance: Placing 60 pounds on 3 hooks on one side of the rack and making a full rotation

- Max Weight: Gradually place more and more weight on a rotating rack until the max weight of 120 pounds is reached

- RESULTS: From these tests, we determined that our item stand was robust enough for our use cases. We found that though some of the wood and electronic components did flex (expected), it still held up great. The hooks were able to handle repeated deposit and removal of items, the rack did not tip over from imbalance, and even with a max weight of 120 pounds, the rack rotated continuously.

Integration Tests:

- Item Placement/Removal: Placing and removing items from load cells and measuring the time it takes for the web app to receive information about it.

- User Position Finding: Check-in and check-out users and measure the time it takes for the rack to rotate to a certain position.

- RESULTS: We found that the detection of placing and removal of items from the item stand was propagated throughout the system very quickly, less than our design requirements. So no design changes were needed there. On the other hand, when we tested the ability of the rack to provide users with a new position or their check-in positions, we found that the time it took went way above our design requirements of 1 second. We did not think about the significant time it takes for the motor to rotate safety to the target location, and thus adjusted our design requirements to 7 seconds in the final presentation.

Facial Recognition Tests:

- Distance Test: Stand at various distances away from the camera, and see if facial recognition starts recognizing faces.

- Face Recognition Time Test: Once close enough to camera, time the time it takes for the face to be seen as new or already in the system.

- Accuracy Test: Check-in various faces and check-out, while measuring if the system accurate maps users to their stored faces.

- RESULTS: Spoiler: we switched to a new facial recognition library which was a big improvement over the old one. However, our old algorithm with the SVM classifier was adequate at recognizing people at the correct distance of 0.5 meters and within the time of 5 seconds. Accuracy though, took a hit. On very diverse facial datasets, our old model hit 95% accuracy during pure software testing. While this is good on paper, our integration tests involving this system found in real life that in a high percentage of the time, its was just wrong, sometimes up to 20%. With this data from our testing, we decided to switch to a new model using the facial_recognition Python library, which reportedly has a 99% facial accuracy rate. We recently also conducted extensive testing and found that its accuracy rate was well above 90-95% on diverse facial data. It still has some issues when everyone checked into the system look very similar, but we believe this might be unavoidable and thus want to build in some extra safeguards such as manual checkout in our web application (still a work in progress).

Doreen’s Status Report for 4/27/24

- This week I mainly worked on the final presentation and doing end-to-end testing. I worked with Surafel in completing speed and distance tests to ensure that only users within 0.5 meters of the camera would be recognized and that they would be recognized within 5 seconds. This involved doing various tests by changing our distances from the camera and fine tuning the facial recognition algorithm with any results were unexpected. For testing the entire system, I asked several volunteers to check in and check out items on the rack and ensured that the rack successfully displayed their items upon checkout. Lastly, I prepared for the final presentation since I would be the speaker. I spent some time practicing delivering the presentation, ensuring I was well informed about all components of our project and that the design trade-offs and testing procedures were clearly explained.

- My progress is on schedule as I have completed all the parts of the project initially assigned to me, including unit and integration testing.

- Next week, I hope to work with the other members of the team to wrap up the project. I will mainly work on completing the facial recognition and the web application. If there is time, I will also work on implementing the bonus feature, the buzzer alert, along with various tests to ensure this feature works as intended with the rest of the system.

Surafel’s Status Report for 4/27/24

- This week I worked with my teammates to finalize the final software components and testing for the final presentation and beyond. We found a new face recognition library that has much higher accuracy with some smaller trade offs, but it is great for what we need. On top of the new face recognition library, I worked with Ryan to get a large portion of the web app done. While Ryan worked on all of the CSS, I worked on the backend and client-side JS code for the different functionalities of our web app (logs, allowing for transitions, manual checkout, User model saving). For testing I worked with Doreen to get the final software tests done for the final presentation (also obtained testing data with the new face recognition library that wasn’t present during the presentation but needed for the final deliverables later on).

- I am currently on schedule and on track to finish with the rest of the team

- Next week I plan on working extensively with both Ryan and Doreen to get the final deliverables done and ready for the final showcase!

Doreen’s Status Report for 4/20/24

- These past 2 weeks, I mainly focused on doing testing. This involved testing the integrity of the item stand, determine if our system met our timing requirements, and doing end-to-end testing with the entire system (including the item stand and facial recognition). For testing the integrity of the item stand, I wanted to ensure that the stand could withstand a large amount of weight placed on it. Each hook should be able to hold up to 20 pounds and the Nema 34 motor should be able to rotate the maximum weight of 120 pounds that we expect. In addition to this, I tested if our system could quickly recognize users, and rotate to a specific user’s position within 5 second. I worked with my team on fixing the motor speed and acceleration as well as looking more into the blocking behavior of our wireless transmission. We ensured that the speed at which the motor rotated would be safe for users and we ensured that the entire check-in and check-out process occurred relatively quickly. I also helped with testing the facial recognition with my team members, helping to develop new approaches and implementations to improve the accuracy closer to our target of 95%. Other than testing, I worked on adding code to determine if an attacker attempted to steal user belongings from the item stand. This involved introducing a buzzer and writing code to make it alert user if anything was removed from the stand while not in a check-out process. The implementation of the buzzer does not work yet, but in further weeks, I hope to further test it.

- My progress is slightly behind. This week, after introducing the buzzed to our system, our system unfortunately broke. As a result, we had to spend time to determine which component led to the failure. With my team members, we determined that the wireless transceiver were not working, so we had to get new ones. The additional time to debug this issue and receive new parts delayed out end-to-end testing, causing us to have less time to tune our algorithms. I plan to do more testing next week to prepare for the final demo, ensuring that our facial recognition system can accurately detect new faces and that our item stand can correctly find good positions for users to place their items.

- I plan to spend some time next week to further test the system and try to satisfy any design requirements that were not met. This involves doing more end-to-end testing, and improving the speed at which users can complete the check-in and check-out processes. I also plan to re-introduce the buzzer code so that we can detect if attackers have stolen items on the stand.

- As I’ve debugged the project, I found it necessary to learn more about facial recognition. I have had to look into the differences between SVM versus euclidean distance classifiers, and I’ve learned about ways to normalize faces. In terms of hardware, I’ve had the opportunity to learn about how to calibrate load cells and do wireless transmission to communicate between two Arduinos. I also have written more code in Arduino, so I improved my skills in this aspect. In terms of debugging, I have learned to do unit testing on individual components to locate which parts of the system led to failure. I have also relied on online tutorial and forums to learn about new approaches to solving problems dealing with both hardware and software components of our system, like load cells, motor, and facial recognition.

Team Status Report for 4/20/24

- There are a couple of significant risks that could jeopardize the success of the project. One risk is the stability of our hardware components. Through our long testing, we discovered that our NRF24L01 wireless transceivers were not reliable 100% of the time. A majority of the time transmission from the web app side of the system to the hardware side of the system worked, and other times the signal don’t go through, which definitely is not ideal when the system hinges on the transceivers to be the bridge between our 2 major subsystems.

- As a contingency plan, we purchased extra NRF24L01 transceivers that can be swapped out if something is wrong with the system. As a last resort, our web app keeps track of item stand transactions, which allows the customer to easily find user items (which is just a normal coat checking system).

- The second risk is facial recognition. This is also another very important component to our system. Without very high accuracy facial recognition, the system could prove to be frustrating to users who may not be able to retrieve their items easily. It is also a very hard thing to perfect and there is not much time left.

- As a contingency plan for the facial recognition system, we can allow the user to enter a name or identifier in place of scanning their face. Any other kind of identification method could work.

- Through our end to end testing, we discovered that some of our design requirements were not realistic. For example, we initially required that once a user’s face was matched, the stand should display the user’s position on the rack with an LED within 1 second. This did not account for the time it would take for the motor to rotate from it’s current position to the user’s position after they had checked in. Therefore, we changed our requirement to 7 seconds to allow the motor to rotate the the target position.

- Overall, there is not much change to the schedule.

Ryan’s Status Report for 4/20/24

- The past 2 weeks, I have been finalizing the hardware for the item stand and working on the final presentation. I have also been conducting some end to end testing.

- One main challenge I faced was the breaking of our system upon the addition of a new buzzer. We decided to add a new feature to the system by adding a buzzer and writing code that would allow the buzzer and our red LED to flash when an unauthorized change of weight was detected. Upon adding this functionality though, our wireless transceivers stopped working, essentially halting the functionality and progress of our project. Doreen and I spent several long days attempting to fix this problem and eventually managed to restore the item stand back to a previous state. Unfortunately we do not believe we have the time to implement this new buzzer feature without risking our system breaking again.

- This week, I worked on the final presentation and running some testing on the hardware. I conducted some item stand integrity tests, which consisted of adding weights to each of the load cells, adding weights on the item stand to make it imbalanced, and adding many weights to test the max weight bearing capacity of the item stand.

- Currently, I believe I am right on schedule. There were some hiccups in the past 2 weeks but I believe the preallocated slack time made up for that. I plan to continue testing, but start to focus my work on helping improve the software portion of the project.

- Next week, I hope to finish testing and wrapping up the project, as well as start on the final report.

WHAT I HAVE LEARNED THIS SEMESTER:

- This semester, I learned a lot about every aspect of the project I am doing, especially on the hardware end. Even in the construction of the item stand, I learned how to use a jigsaw, hammer and chisel, a sander, various drills, and other wood shop tools. It was also my first time assembling a large wood item and spray painting. I learned most of these things through online searching and lessons provided by some wood shop employees.

- On the electronics side, I learned a lot about the various components that went into the system. I learned about load cells, their calibrations and how they measure, I learned about how to use an Arduino microcontroller, I learned how to use wireless transceivers, I learned how to choose the right stepper motor for my project and which components are enough to power it, and more. Most of the knowledge I got to use these components came from the many tutorials I found online.

- Lastly, I dabbled in a bit of software. Before this class, the last time I touched Arduino code was in 18-100, which I took in my first semester at CMU (talk about full circle). I also spent a lot of time with various Arduino libraries, which I learned about through their documentation and other online resources. I learned a bit about how facial recognition and what algorithms go into it from talking to Surafel.

Surafel’s Status Report for 4/20/24

- This week, I focused on getting the web app to communicate with the project’s other components and conducting tests of the facial recognition system to see if it meets our design requirements. Once I was able to get facial recognition to work using the web app, I added the code that would allow it to communicate with the rack and complete the process. There were some issues with the wireless transceivers that postponed testing the web app, but it will happen next week. On top of testing the design requirements, I tested both the SVM classifier implementation we had previously with the current Euclidean Distance implementation to see what performed better. Testing shows that the SVM classifier produces a higher accuracy, so I want to put it back in with the main recognition implementation.

- With the issues around the transceivers, Carnival, and increased coursework in other classes I have fallen behind, but plan to use the upcoming week to get fully back on track.

- Next week I want to make sure the web app works well with the rest of the project and iron out any bugs that may come about from that, and fully complete the testing for the facial recognition system. I also want to reintroduce the SVM classifier back into the recognition system, which produces higher accuracies as seen from testing.

- My part of the project involved facial recognition, so I had to familiarize myself with not only OpenCV but with computer vision as a whole. These topics and technologies were completely new to me coming into this project so I had to learn them to implement this part of the project. To learn these new tools and technologies, I mostly read articles and watched videos that described what was going on under the hood of all of the OpenCV methods I used, and I looked at simple examples of what I wanted to do with the recognition system and used that knowledge to help implement the facial recognition system.

Team Status Report for 4/6/2024

RISKS AND MITIGATION

After testing most of our components and the entire system this week, we determined 2 big risks that could jeopardize the success of the project. One risk is wireless communication. In our system, we leverage NRF24L01 wireless transceivers to communicate wirelessly between our facial recognition system and item stand. Sometimes the communication is inconsistent. Data could be send from one transceiver but not received by another. This may be because we are toggling both transceivers between a receive and transmit state. We are planning on testing this more next week. Currently, we put a big delay between toggling the receive and transmit state, so the component has enough time to adapt.

The second risk is the accuracy of the facial recognition system. The facial recognition system is not as accurate as we would like at this moment in time. This is a big deal in our system because inaccurate face detection could result in the wrong user receiving their items. We plan to continue testing and refining the algorithm, especially since the team is wrapping up on the hardware component of the project.

DESIGN CHANGES

From the stand integrity part of our design requirements, we stated that each load cell should be able to hold 25 pounds. However, we have realized that the load cell we have purchased can only detect up to 22 pounds in weight. Additionally, when doing end to end testing this past week with items of various weights, the heaviest item that was placed on the rack was only 10-15 pounds. As a result, we are decreasing the maximum weight for each load cell from 25 pounds to 20 pounds. This would mean the the maximum total weight that can ever be on the item stand at once is 120 pounds. This modification does not incur any costs. It is simply more realistic with the weights for the types of items we expect users to place on the rack. For the other design requirements (detect an item has been added or removed within 1 second, 95% accuracy for facial recognition, etc), we will do further testing and make necessary modifications to attempt to satisfy them.

SCHEDULE

The schedule remains the same. However, we are utilizing some time in our slack time to fix any unforeseen issues and ensure our use case and design requirements are meant. Not considering these minor modifications, all members have completed their required tasks, and what remains is further testing.

VERIFICATION/VALIDATION

As a team, we plan to combine all the components into one system before running our tests. For facial recognition, we want to mostly implement the tests we described during the design proposal. We will test with users standing at various distances from the camera, with the ideal result being that users can only interact with the system within 0.5 meters. We also want to maintain accuracy, so we are planning to test our facial recognition accuracy with at least 20 different faces, with an ideal result of at least 95% accuracy. To test the integration between our hardware and software components, we plan to run tests that model real life scenarios, in addition to the specific tests we listed in our design proposal. For example, we can have several people check in and out of the system in various orders in order to determine if the system is able to accurately keep track of user belongings. For the item stand, we will be using various weights and seeing if the item stand is damaged or can rotate. We will also be introducing weight imbalance- putting a very heavy weight one side and rotating the item stand. Lastly, we want to time how long the process of checking-in our checking-out takes. We want to ensure that the motor can rotate the the correct position and that updates between the facial recognition system and item stand do not take long. This will mean making modification to our code to lessen certain delays or increasing the acceleration/speed of the motor.

Doreen’s Status Report for 4/6/24

- This week, I mainly worked on preparing for the interim demo. This involved doing some end-to-end testing with the hardware components and the facial recognition system. There were issues relating to wireless transmission between the Python code for the facial recognition and the Arduino code, so I worked with my team members to fix those issues. We realized that the readline function that we utilized would block on many trials, so we had to deduce where to problem was and how to fix it. We thought about various solutions like flushing input and output buffers, but ultimately realized we had to add some delays in various locations of our programs to ensure that the subsystems were in sync. In addition on this integration, I tested the Nema 34 motor and helped write code to control the motor upon an check-in or check-out process. This involved spending time in the TechSpark wood shop to carve a hole in the rack to place the motor and mounting the motor sturdily to the rack using brackets and nails. Additionally, I worked on code to control the motor, allowing it to rotate to a user’s position on the rack.

- My progress is slightly behind schedule. I have worked on testing various components of our system, including the load cells, motors, and wireless transmission. However, the complete system has not been tested together thoroughly, with members outside of our group. In addition, the LEDs which indicate to the user whether they have successfully placed their items on the rack has not been permanently installed, so a bit more time in the wood shop is required to do that. In order to catch up, I will need to add the LED and do further testing using volunteers to ensure that the system works as a whole.

- Next week, I will work on adding the LEDs to the rack. I will also work on doing end-to-end testing. Further details are provided above.

- Although we notice that our system works mostly as intended, I would like to further optimize delays for the check-in and check-out process. The delays added for transmitting information make it so that our timing requirements (taking less than 5 seconds for a using to be recognizes) are not meant. As a result, I would like to find the smallest delays that allows message transmission without blocking behavior. Secondly, I would like to test if each hook on the rack can withstand the maximum weight ( 20-25 pounds) previously set in our use case requirements. This will involve place 20 pound backpacks on our rack and seeing if the motor can successfully rotate that much weight. In a similar regard, I would like to ensure that even with weight imbalances, the motor can successfully rotate the items on the rack. I will do this by placing a large weight (20 pounds) on one side and nothing on the other. I will also check-in 6 times to fill the entire rack, then remove all items on one side, to ensure that the motor successfully rotates the weight and that the thread on the gears are not impacted.