Team Status Report

What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

We believe that mounting the Jetson components could cause some unforeseen issues. We need to design hardware to hold the Jetson camera, and we may need to create another mount for the Jetson computer. The challenges we are facing include creating a system which is stable, yet does not inhibit access to any part of the computer or the camera. In addition, the computer mount should not cause the Jetson to overheat in any capacity; this adds a constraint on the mount design to ensure that the heatsink is exposed to the air and has good airflow.

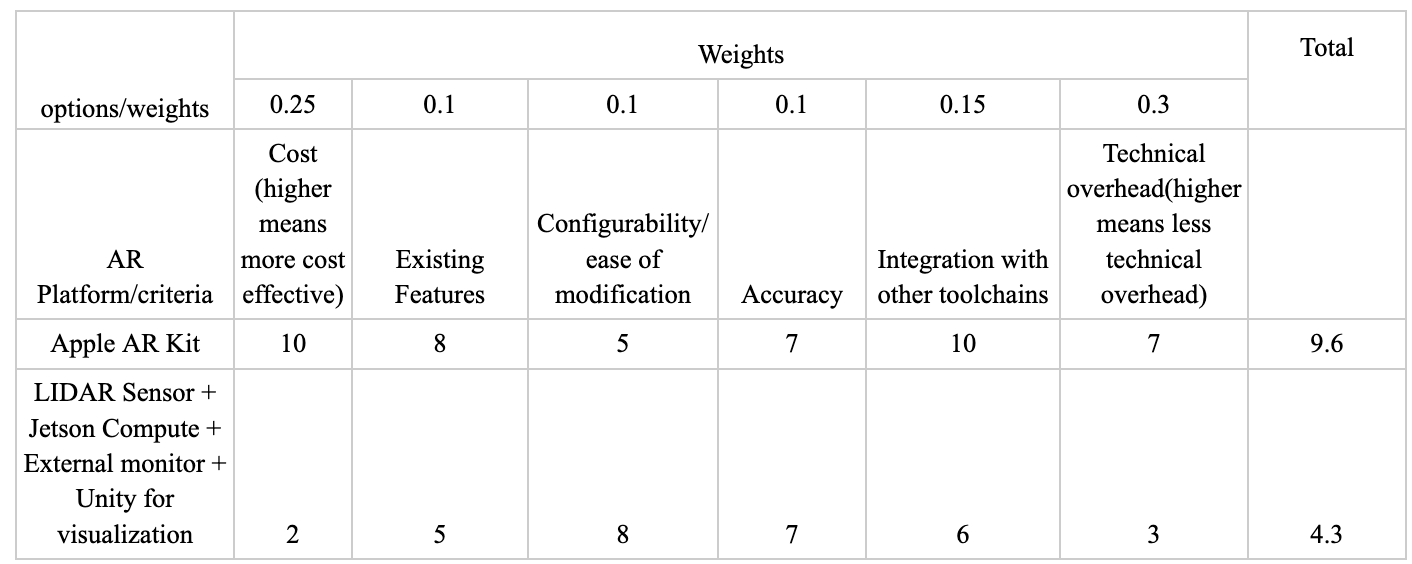

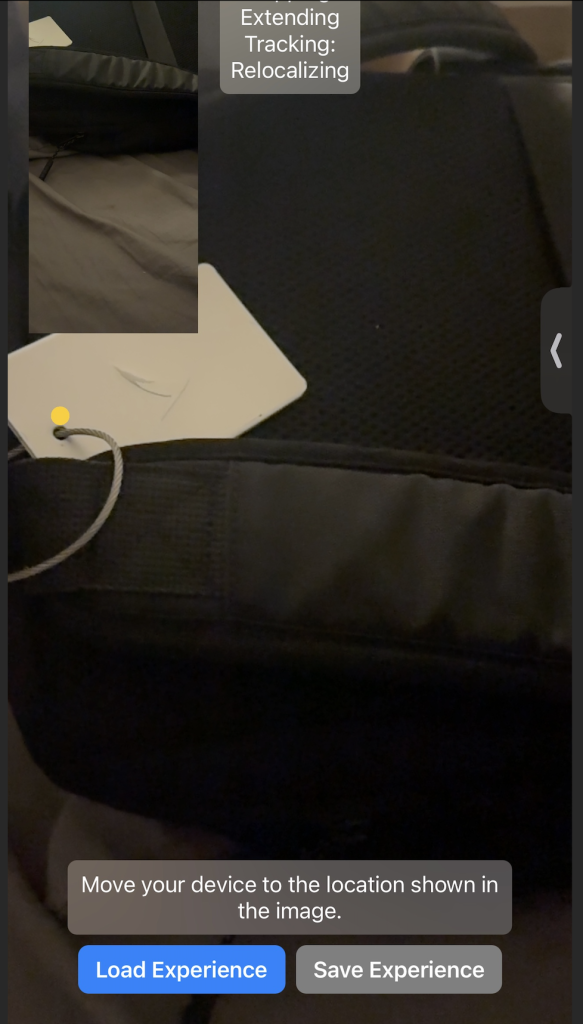

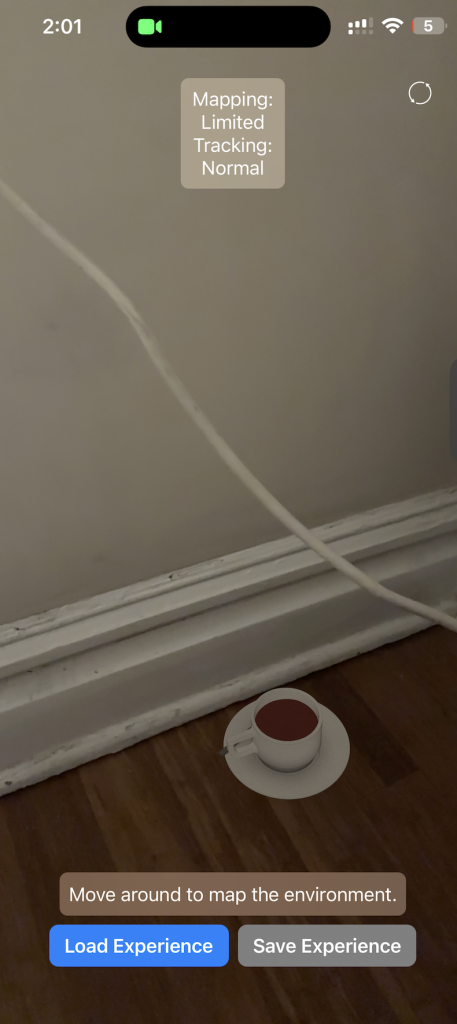

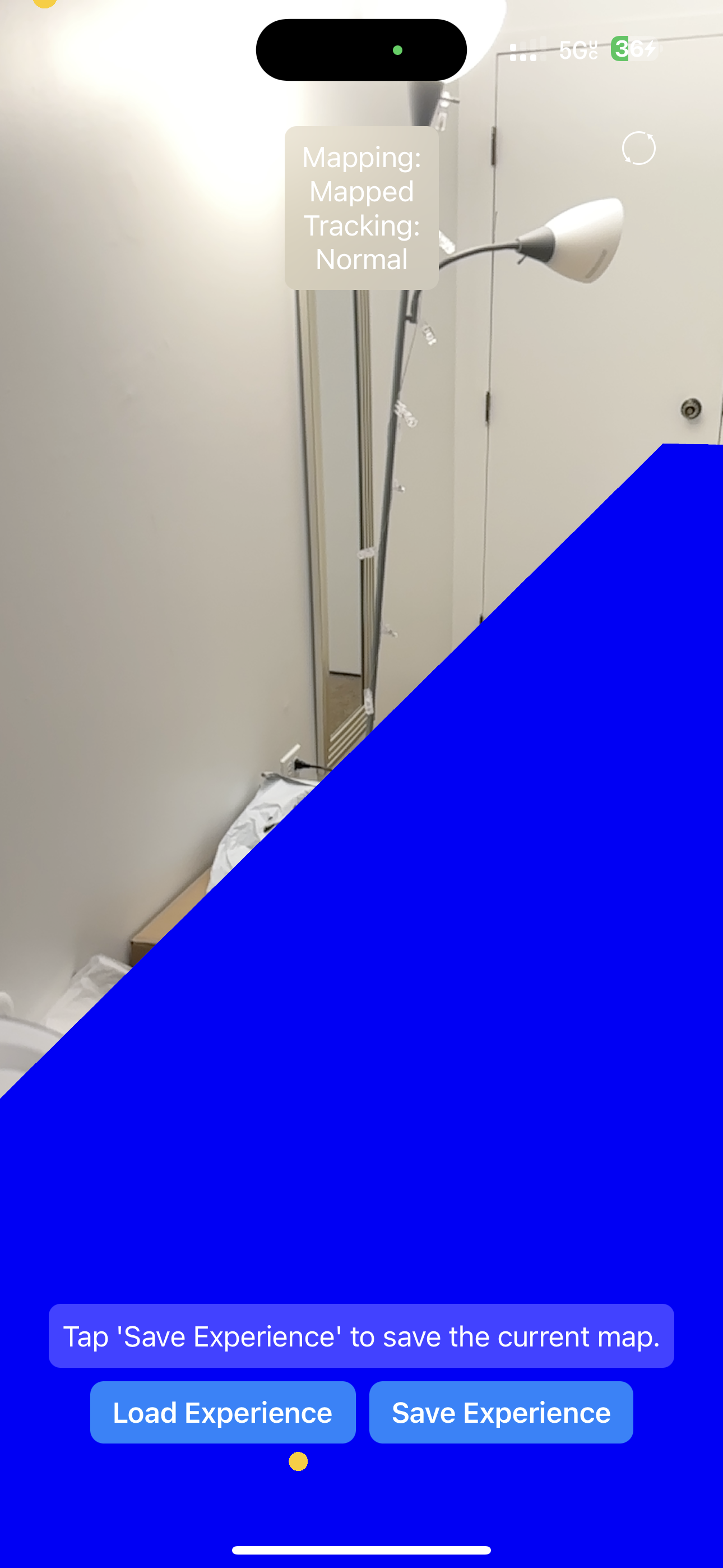

The proof of concepts have covered a good amount of our feature-set that we need but a key one that has not been accomplished yet is projecting a texture onto a surface and modifying the texture. We have made progress with mapping but the next step this coming week is to work on projecting a texture onto the floor plane and also exploring how we can modify the texture as we move. To mitigate this risk of the complexity of this task we have researched that there are many candidate approaches that we can experiment to find one that best fits our needs. We have 2 AR api’s from apple SceneKit and RealityKit and they both have support for projecting textures.We can create a shader, we could modify the texture in real time or we could create another scene node to occlude the existing texture on the plane. This will be a key action item going forward.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

We had not previously considered mounting the Jetson computer at a higher point on the vacuum. This alteration will incur an extra cost to cover the extension cable for the Jetson camera. In addition, we previously only planned on using one of the active illumination light components that we purchased, but we have reconsidered using two active illumination lights. This will not induce any additional cost for us, as we had purchased two units to start.

Schedule

The schedule has not changed from prior weeks. Our subtasks remain assigned as follows: Erin on dirt detection, Harshul and Nathalie on augmented reality plane mapping & tracking. We are going to sync on progress and reassign tasks in the coming weeks.

ABET Considerations

Part A was written by Harshul, Part B was written by Nathalie Part C was written by Erin

Part A: Global Factors

Some key global factors that we are considering are Human-Centric Design and Technology-Penetration. In order to make this app accessible to the broadest customer base it is important to avoid any unnecessary complexity in the application and ensure that the app is intuitive to users and leverages built in apis for accessibility in different language modalities. Additionally with respect to technology penetration we are keenly aware that AR and XR systems are still in the early stages of the product adoption curve which means that the cost for truly immersive AR solutions like headsets is quite high and not nearly as ubiquitous as technologies like smartphones with Apple having significant market share we felt that this would allow for greater access to and penetration of our app given the much lower barrier to entry. Additionally because our application is designed to use bluetooth and on device capabilities the app’s functionality will not be constrained if deployed in rural regions with inconsistent/reduced wireless connectivity.

Part B: Cultural Factors

When accounting for cultural factors, it’s important to consider what cleanliness means in certain cultures. There are different customs and traditions associated with cleaning, and different times of day + frequency that people perform their vacuuming duties. Further, we are assuming that our users already have access to a vacuum and an outlet, which might not necessarily be the case. For example, based on statistics from Electrolux, Koreans vacuum most frequently while Brazilians and Portuguese people statistically spend the longest time vaccuumming.

Similarly, different cultures have different standards for cleanliness and often hold different decor elements on their floor, which changes the augmented reality mappings in ways that we might not be able to anticipate. Our use case already limits most of these scenarios by specifying simplicity, but ultimately we want to still think about designing products for the practical world.

While our product’s design and use case doesn’t directly deal with religious symbolism or iconography, but we must being considerate of the fact that cleanliness has religious significance to certain cultures so it’s worth being mindful of that in any gamification features that we add to ensure that we are not being insensitive.

Part C: Environmental Factors

Our project takes into account environmental factors as we create only an additive product. All the components that we are building can be integrated into an existing vacuum design, and will not produce a considerable amount of waste product. We initially had intended to create an active illumination module using LEDs, but we decided to forego this idea, as creating a good, vision-safe illumination method would cost raw material—we would have to cycle though multiple iterations of the product, and the final solution we end with may not be as safe as an existing solution. As such, we settled for an already manufactured LED. We also recently discussed a method to mount the Jetson and its corresponding components to the vacuum module. One option that we are heavily exploring is a 3D printed solution. We can opt to use a recycled filament, as this would be more environmentally friendly, compared to some of the other raw material sources. Moreover, our project as a whole aims to aid the user in getting a faster, better, clean. It does not interfere with any other existing environmental factors in a negative way, and the charge needed to power our system is negligible compared to what a typical college student consumes on a daily basis.

.

.