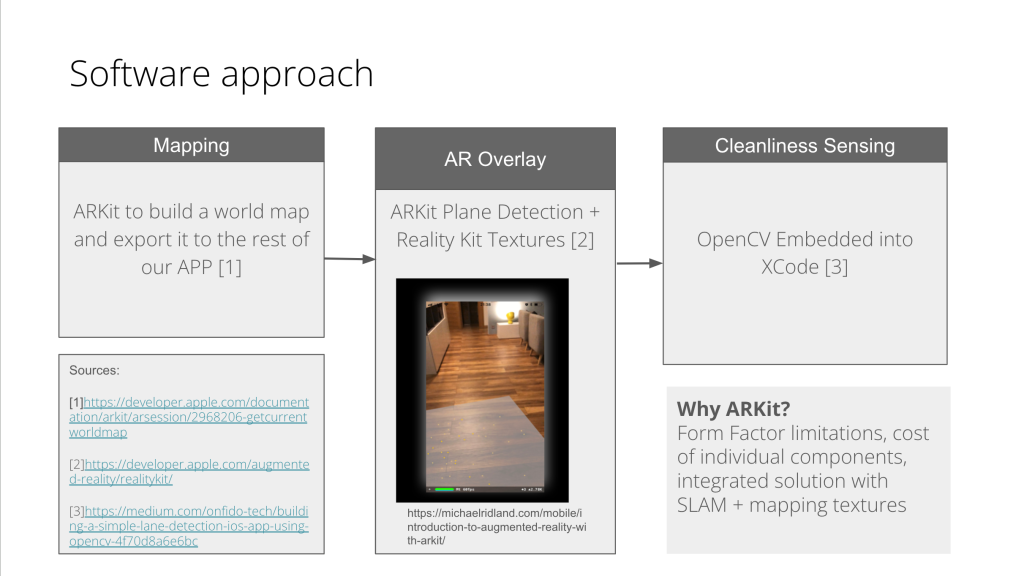

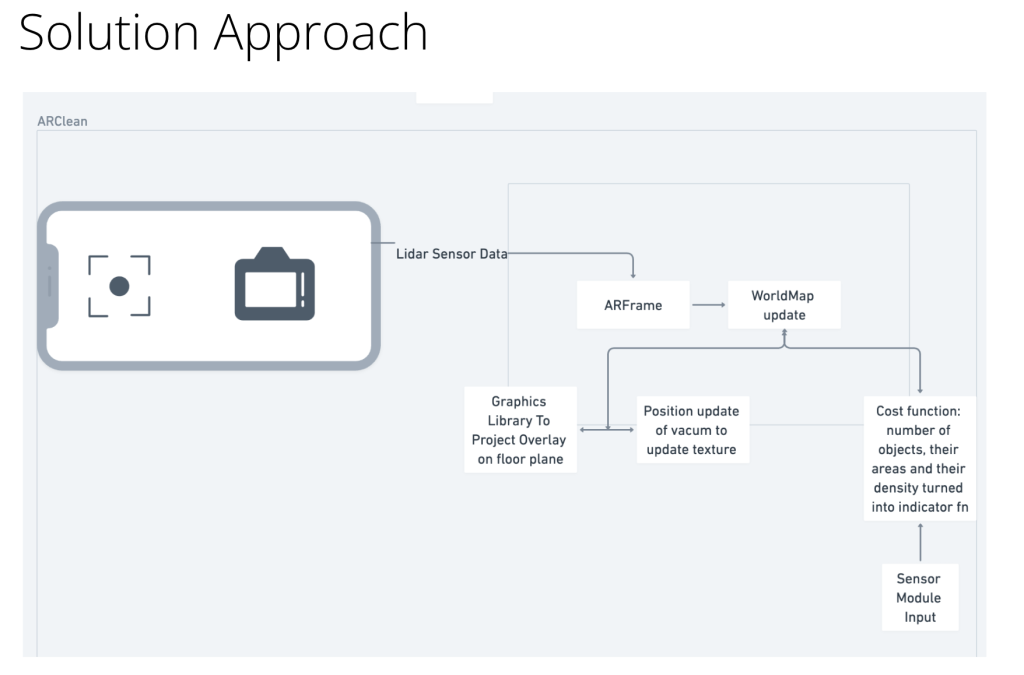

This week I worked on flushing out the technical requirements based on our initial use case requirements. I did research on the accuracy of ARKit and how that would impact our mapping and coverage use case requirements (resources listed below).

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10181530/

- https://ieeexplore.ieee.org/abstract/document/10003046

- https://forums.developer.apple.com/forums/thread/724152

I changed the requirements for dirt detection to measure size instead of being based on a percentage in order to accurately describe what kind of dirt we are detecting. Instead of dirt being on “15% of the visible area”, I figured it was not descriptive enough of the actual metric that we wanted to quantify. From there (and using our experimental CV object detection tests), I decided that we should detect >1mm dirt particles within 10cm of the actual camera. I also did calculations for our new use case requirement on the battery life, specifically that our vacuum should be able to be powered for 4 hours without recharging. In terms of power, this translates to 16,000 maH on 10W mode, 8,000 maH on 5W mode, with the goal of being able to be used for multiple vacuuming instances. Sources include:

- https://forums.developer.nvidia.com/t/current-consumption-of-jetson-nano/83750

- https://www.sciencedirect.com/topics/engineering/battery-capacity

- https://www.youtube.com/watch?v=B4afWen1CsY

I also made sure to connect Xcode to my phone so that I would be able to test my code locally.

Next steps include being able to actually do dummy floor mappings on static rooms and pictures of rooms. I have no exposure to Swift, Xcode, and ARKit technologies so need to become familiar so that I can actually start tracking floor coverage and figuring out the limits of ARKit as a tool. I want to be able to test local programs on my phone, and experiment with the phone’s LIDAR sensor. I think I need to take tutorial lessons on Swift and XCode to start development which I anticipate taking a lot of time. Another task that I need to focus on is how to prevent false positives in the current object detection algorithm, or develop a threshold that’s good enough so that the slight creases on the surfaces are not considered noise that affect the final result.

We are on track but need to make sure that we are able to articulate our ideas in the design report and figure out how the technologies work because they are still relatively unfamiliar with us.