Risks

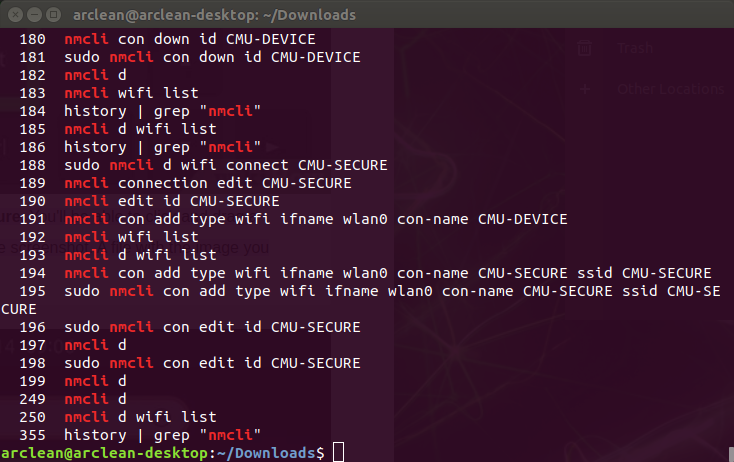

One large risk that our group is currently facing is the fact that Erin is currently dealing with a number of issues regarding the Jetson. This is a large blocker for the entire end-to-end system, as we are unable to demonstrate whether the dirt tracking on the AR application is working properly if the entire Jetson subsystem is still offline. Without the dirt detection functioning, as well as the BLE connection, the AR system does not have the necessary data to determine whether the flooring is clean or dirty, and we will have no way of validating whether the transformation of 3D data points that we have on the AR side is accurate. Moreover, Erin is currently investigating whether it is even possible to speed up the Bluetooth data transmission. Currently, it seems that an async sleep call for around ten seconds is necessary in order to preserve functionality. This, along with the BLE data transmission limit, may force us to readjust our use-case requirements.

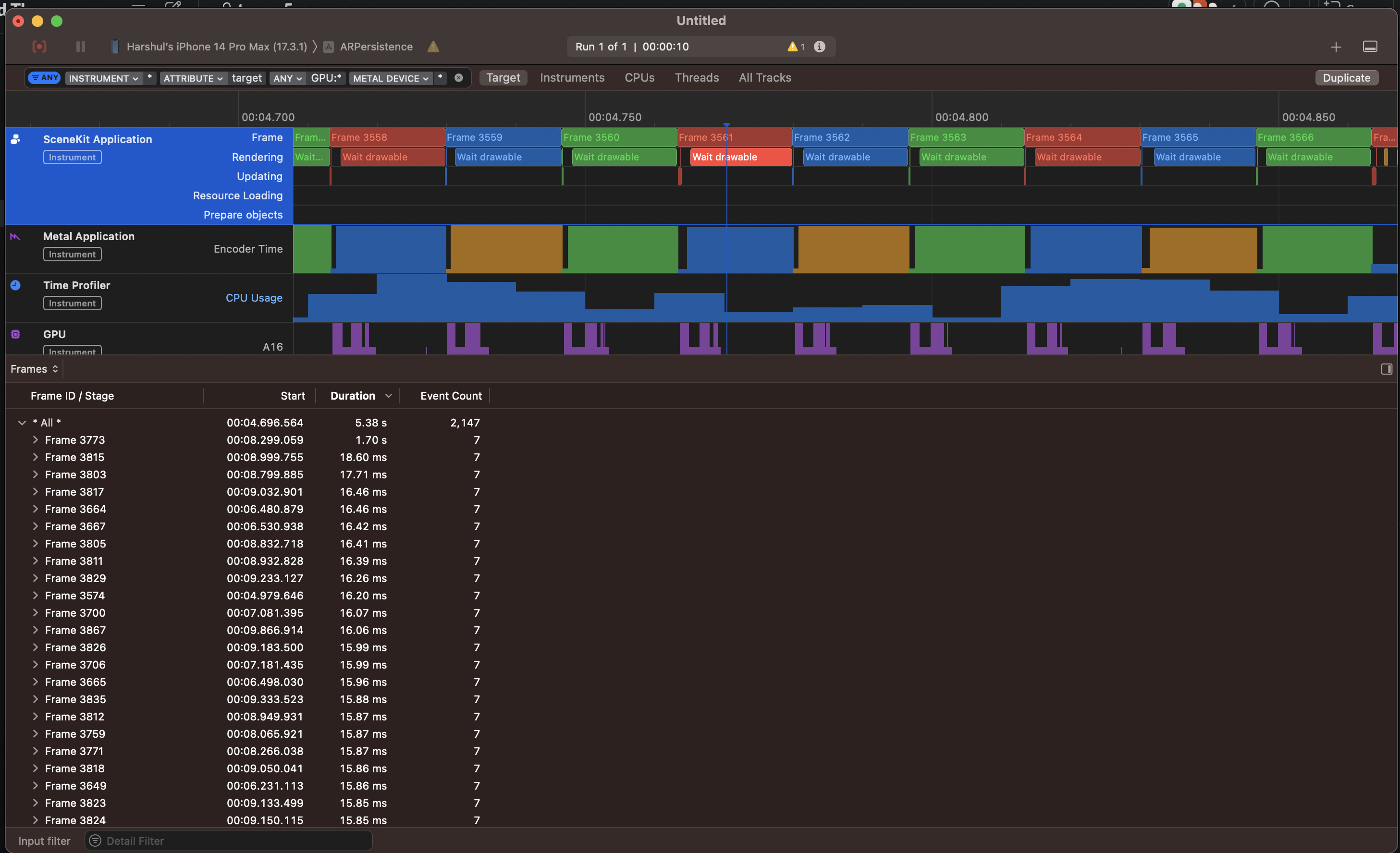

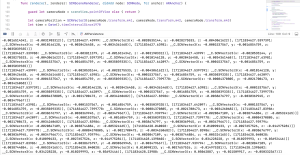

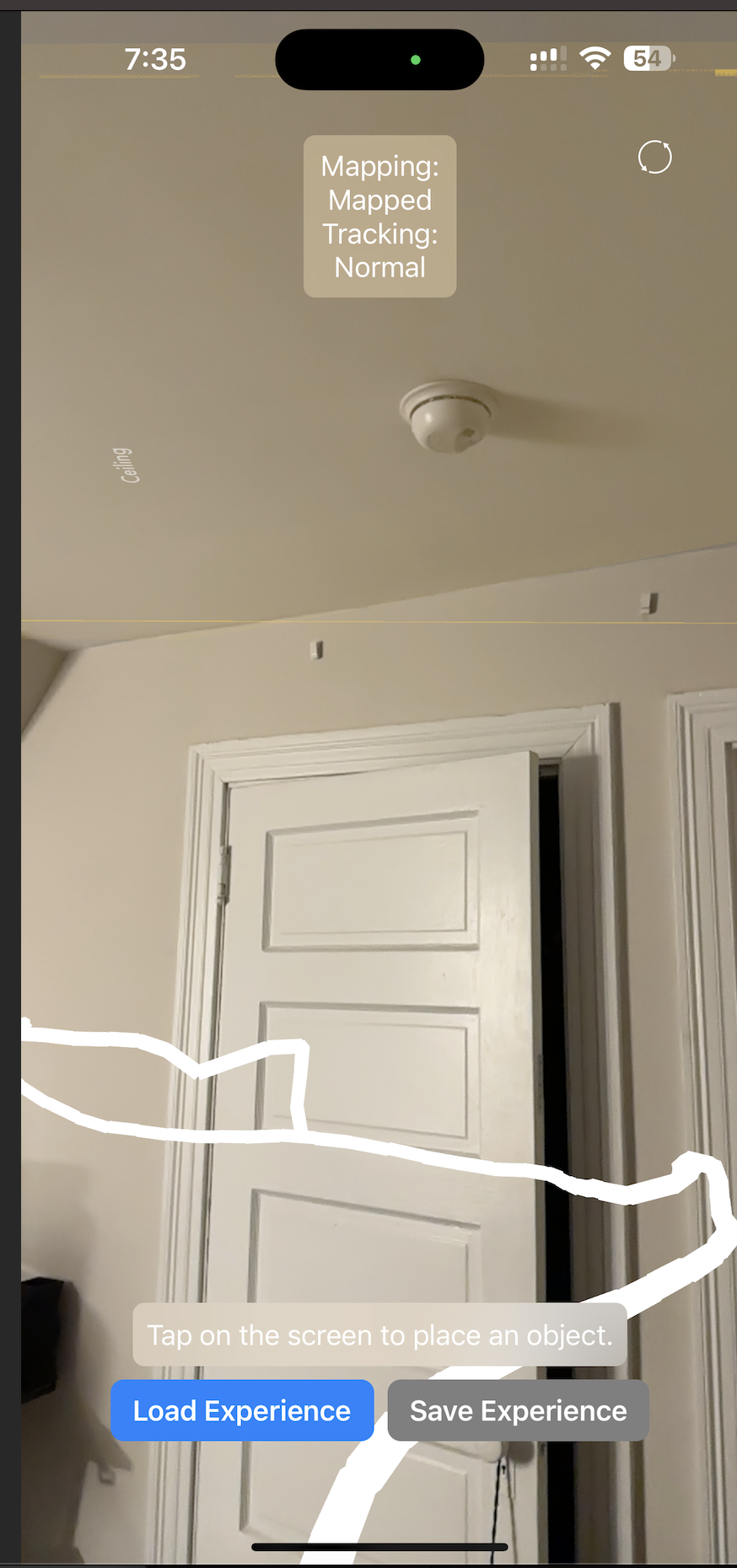

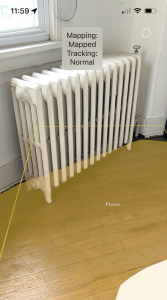

Nathalie and Harshul have been working on tracking the vacuum head in the AR space, and they have also been working on trying to get the coordinate transformation correct. While the coordinate transformations have a dependency on the Jetson subsystem (as mentioned above), the vacuum head tracking does not, and we have made significant progress on that front.

Nathalie has also been working on mounting the phone to the physical vacuum. We purchased a phone stand rather than designing our own mounting system, which saved us time. However, the angle which the stand is able to accommodate for may not be enough for the iPhone to get a satisfactory read, and this is something we plan to test more extensively, so we can figure out what the best orientation and mounting process for the iPhone would be.

System Validation:

From our design report and incorporating Professor Kim’s feedback outlined an end-to-end validation test that he would like to see from our System below is the test plan for formalizing and carrying out this test.

The goal is to have every subsystem operational and test connectivity and integration between each subsystem.

Subsystems:

- Bluetooth (Jetson)

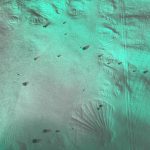

- Dirt Detection (Jetson)

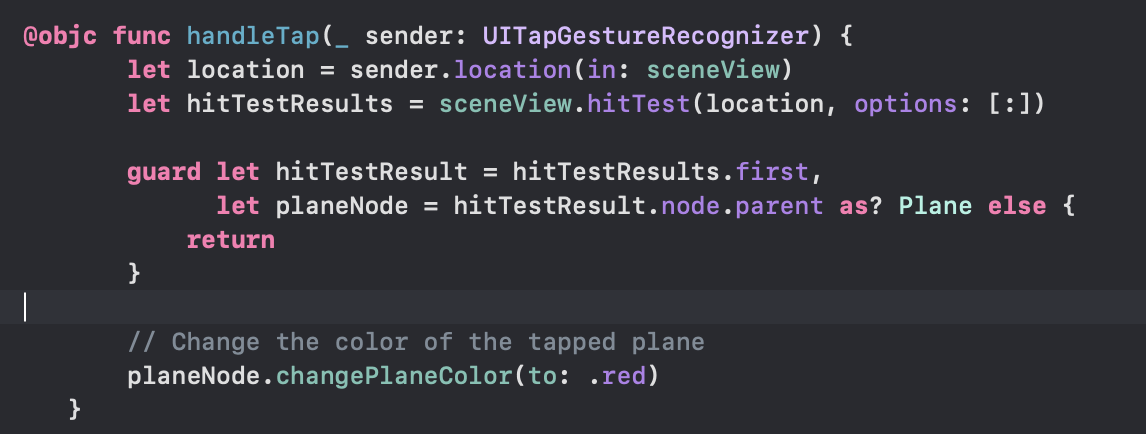

- Plane Projection + Image Detection (ARKit)

- Plane Detection + Ui

- Bluetooth (ARkit)

- Time:position queue (ARKit)

With the room mapped with an initial mapping and the plane frozen, place the phone into the mount and start the drawing to track the vacuum position. Verify that the image is detected and the drawn line is behind the vacuum and that the queue is being populated with time:position points (Tests: 3,4,5,6)

Place a large, visible object behind the vacuum head in view of the active illumination devices and the Jetson camera. Verify that the dirt detection script categorizes the image as “dirty”, and proceed to validate that this message is sent from the Jetson to the working iPhone device. Additionally, validate that the iPhone has received the intended message from the Jetson, and then proceed to verify that the AR application highlights the proper portion of flooring (containing the large object). (Tests: 1, 2)

Schedule

Our schedule has been unexpectedly delayed with the Jetson malfunctions this week, which has hindered the progress on the dirt detection front. Erin has been especially involved with this, and we are hoping to have it reliably resolved soon so that she can instead focus her energy on reducing the latency with regards to Bluetooth communication. Nathalie and Harshul have been making steady progress on the AR front, but it is absolutely crucial for each of our subsystems to have polished functionality so that we see more integration progress, especially with the hardware. We are mounting the Jetson this week(end) to measure the constant translational difference so we can add it to our code and do accompanying tests to ensure maximal precision. A challenge has been our differing free times in the day, but since we are working on integration testing between subsystem it is important that we all meet together and with the hardware components. To mitigate this, we set aside chunks of time on our calendars allotted to specific integration tests.