Ifeanyi’s Status Report for 04/27/2024

This week, I worked on localization accuracy, localization smoothing, and orientation estimation and smoothing. Additionally, I worked with the team testing in various settings around campus. For the localization accuracy, I made a filter for the estimated position that didn’t allow it to change at a rate faster than a maximum speed of 2m/s. That is, if we are estimated to be somewhere, and one second later, the system says we are now 5m away, we will reject this data point. This greatly increased the accuracy/stability of the localization. For the orientation, I programmed a check for how far we’ve moved since our last “sample point”. When this distance reaches a threshold (of 2m), we collect another sample point. Then, we estimate our angle of movement to be the angle of the vector from our previous sample point to the current one. To smoothen the orientation reading even further, we used the gyroscope to rotate the user on the display in-between sample point updates. Finally, to smoothen the localization readings a bit, I added a velocity estimator, that would time the position estimates to calculate a predicted velocity, which we can then use to move the user on the map in-between location updates.

My progress is on schedule as I was to revamp the orientation estimation this week as well as generally test and fix things that were found to be wrong or could perform better (faster or more accurately).

Next week, I plan to complete the final testing with the team, to make sure everything works, especially in the conditions that we expect for the demo.

Weelie’s Status Report for 4/27/2024

- What did you personally accomplish this week on the project?

Last week, I mainly worked on 2 things.

First of all, I prepared for my final presentations. I spent the whole last weekend and monday on preparing it.

Second, I continued to work on optimizing the localzation algorithms. I added a filter that could check the walking speed. If we detected that the walking speed is too fast (unreasonable), the filter will block the location updates. Also, I helped Ifeani to incorperate the rotation to the localization system. Now our system should be able to generate proper rotation.

Besides the above changes, I worked with the whole team to set up the system in a broad area that is similar to the gym. We made sure that signals are covered everywhere.

Github Repo: https://github.com/weelieguo/18500-dw1001

- Is your progress on schedule or behind?

Based on the schedule, we should work on the final testing stage, which is on schedule.

- What deliverables do you hope to complete in the next week?

This week, we will make our poster and do more testing to make sure that our system is stable on the final demo day.

Team Status Report for 04/27/2024

Significant Risks

This week we were able to successfully implement usage of the IMU along with the rest of the localization system, so we are able to constantly display the user’s rotation data along with the user’s position. We were also able to test the localization system in a larger area somewhat similar to the space in the Wiegand Gym to make sure the tag would be able to read the distances between it and each of the anchors. The testing was mostly positive: although the range of the tag and anchor is approximately 34 meters (connectivity beyond this range is still possible, though insufficiently consistent for our localization system), in practice, practice, the range of the device seems to be a little more inconsistent at a range of around 25 meters. The firmware of the tag attempts to switch to connecting to a different anchor if any packets are dropped between the tag and the anchor, and this may happen at a greater frequency at ranges greater than 25 meters, which can cause the anchor connections to become less stable. However, this testing does show that placing anchors in a denser fashion improves the stability of its connections in the network. In conclusion, this week we were able to resolve the outstanding deliverables we had promised to implement as a part of our project.

Changes to System Design

We incorporated the IMU into our localization system this week to display the rotation/heading of the user on our map. This was largely successful; hence there were no system design changes required.

Schedule

This next week our team will be primarily working on the final deliverables for the project. We also aim to do some further testing (optimistically in the Wiegand Gym itself if we can enter at a time where the basketball activity on the courts is not especially high). We will also work on our presentation for demo day.

Progress:

The video below displays the capabilities of the authentication system, with the user being directed to create a new account, as well as being able to edit some aspects of their account and sign out using a profile page.

This Week’s Prompt:

Unit tests:

- Range of the anchors and tag. This was done to ensure that the maximum range of communication is sufficiently large enough so that installation of the system would not be excessively expensive. Our target goal was 25 meters. We measured the furthest distance in a hallway such that our tag could still read distances from an anchor and found that this distance was around 34 meters. This met the test, and so we did not need to change our anchor placement strategy.

- Distance measurement accuracy between an anchor and a tag. How much does distance measurement actually differ between an anchor and a tag? Our target goal was 0.23 meters (the rationale of this number comes from the maximum change in distance in a typical 25×2 meter hallway to cause the localization error from multilateration to differ by 1 meter). The actual observed distance error as measured by taking an average of 10 readings is 0.15 meters, which passes the test. These numbers reveal the localization system is theoretically capable of highly accurate localization.

- Localization Accuracy of the localization system. We compared the average predicted position of the localization system with the actual position we were standing in a hallway and find that the average misprediction distance was approximately 0.2 meters (1 measurement taken in 2-meter increments over a 20 meter stretch of hallway). We tested the difference between using a trilateration and multilateration algorithm on localization accuracy. Overall, both are competitive. However, due to the behavior of the DWM1001’s to sometimes fluctuate from inside of a hallway, we find that the multilateration can improve accuracy by approximately 0.1 meters. These numbers meet our goal of <1 meter accuracy and are sufficient in accurately tracking a student.

- Localization Precision: We want to ensure the predicted user’s position does not sporadically jump on the screen. We stood in a location and measured the frequency/distance of jumps we would see in the localization system. We notice the maximum fluctuations were approximately 2.1 meters, which was larger than the 0.5 meter goal. Because of this, we designed a filter for the final position estimate, that uses the change in estimated position over time to calculate our velocity, and rejects data points that would imply a velocity higher than some maximum (which we specified to be 2m/s).

- Heading Accuracy: We want to make sure the difference in the angle of the user’s estimated orientation aligned with that of reality. To test this, we walked along the hallway, rotating around, before stopping at parallel angles with the hallway (in real life) before comparing the result to that of what was seen on the browser. We took the difference between that angle and the actual angle. The average result over 15 trials reveal the average difference in angle is 20 degrees. This aligns with our goal of 20 degrees.

- Battery life of tag: We tested the battery life of the system by running the raspberry pi with the localization system constantly running. Our battery life lasts for at least 10 hours, which beats our desired goal of 4 hours.

- Position Update Latency: We measure the latency of our multilateration and nelder-mead algorithm and find it is on average 20 ms, which is sufficiently less than the goal of 500 ms to make sure that the latency of the algorithm is fast enough for fast update frequency.

- Distance update frequency: We measure the frequency at which the tag can get values from anchors and find this value is 10.1 Hz. This reveals our localization system can easily provide frequent updates for the user’s position (as long as other factors are not bottlenecking the process).

- Tag to Webapp Latency: We find that the latency of communication between the user’s Raspberry Pi and the server is on average around 225 ms. However, this best case condition relied on the server processing many packets at once (which in some cases it cannot keep up) so we ended up switching to using Websockets, which decreased our average time to 67 ms. Due to our navigation system not requiring incredibly fast latency to avoid disorientation, we find that this is sufficiently fast enough to provide timely updates. Particularly, we learn that this latency makes the rotation update frequency run on the RPi in a fluid manner.

- Total Latency: We measure the total latency between user movement (e.g. past a door) and it being shown on the web application to have an average delay of around 0.84 seconds. We believe this sufficiently in preventing a user from getting lost.

- Navigation Algorithm: We benchmark the speed up the navigation algorithm and find that it produces results in an average of 125 ms, which is sufficiently fast to provide the user with instructions when they are using the application.

- User Experience: We surveyed 4 of our potential clients (students whom were unfamiliar with a building) to use our application to navigate to a specific room. We asked them to fill a quick survey to gather some of their feedback, using the qualitative feedback to improve some aspects of the webserver’s user interface. The user’s quantitative rating of the clarity and helpfulness of the directions was a 4.5/5.0.

Jeff’s Status Report for 04/27/2024

What did I do this week?

I spent this week assisting my team by working on the final presentation and collecting data whilst testing various facets of the verification/validation portion of the project. I also spent time developing user authentication and profiles for the web application portion of the project.

I worked with my team to collect material for the final presentation, doing some additional testing on Sunday to get more solid numbers on metrics regarding localization accuracy and total system latency by testing the system in the hallways of ReH 2 and 3. I also worked on capturing a short video demo to include in the demo presentation.

After the final presentations, I spent time addressing some of the ethical concerns that spawned out of our ethics readings and discussions done previously in the semester. In particular, I wanted to improve the security and privacy of the application by introducing user authentication and profiles. Django conveniently has authentication built into its framework. However, I needed to go through a bit of the documentation to understand what exactly I needed to implement. For example, I learned that Django had prebuilt forms and views specifically for handling logging in and creating accounts. However, I had the option of writing my custom HTML page to display the login form to have the design of the website conform to the rest of the website.

Then, I tinkered with some of the capabilities of the “account registration form”, having a custom inherit the built in form to help me easily create a database model of associating additional information with the default Django User model (such as the person’s name, or the tag ID they were using). I created a view for handling creating new accounts, in which I added some capability of returning errors to the browser in case the user entered in something incorrectly. The video below displays the capabilities of the authentication system, with the user being directed to create a new account, as well as being able to edit some aspects of their account and sign out using a profile page.

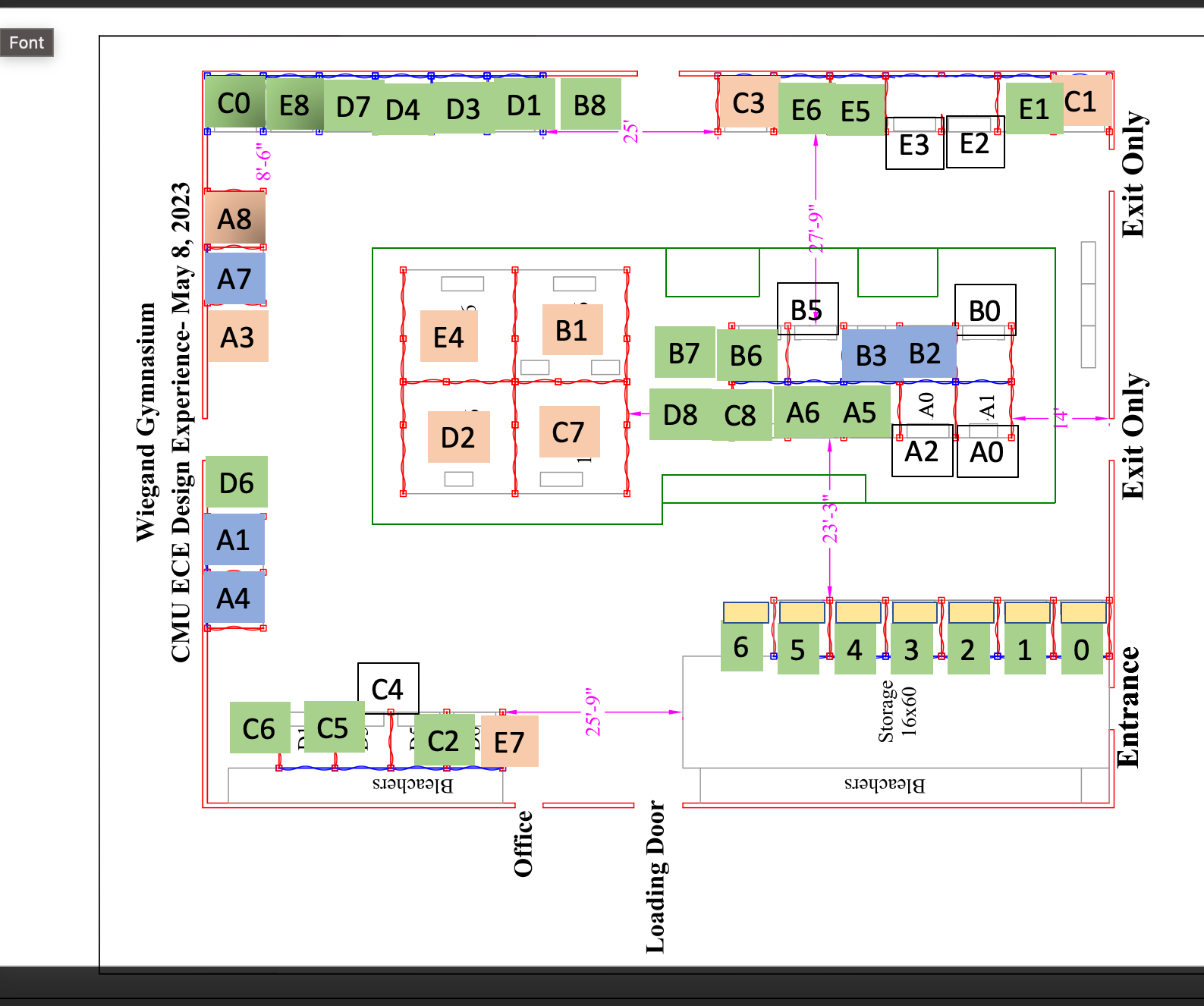

Near the end of the week, I met again with my team to test the rotation capabilities of the device, as testing the localization system in a larger, open area that might somewhat resemble the Wiegand Gym (in this case, the Engineering Quad). Additionally, I worked on mapping out the Wiegand Gym, creating several graphs of areas that we could have the navigation system operate in. These images are shown below (with the assumed available navigation paths shown in green, representing “hallways”). I have previously measured the dimensions of the gym; hence, the former image involves a ~30×15 meter walkable path, and the latter image involves a ~40×30 meter walkable path.

Is my progress on schedule?

From the progress with the user authentication system and the progress with mapping out the Wiegand Gym, my progress aligns with what I have planned to do this week.

Next week’s deliverables:

Next week I will be working on the final deliverables for the project to round out the semester. I will also be doing further testing with my team to ensure that both the navigation and localization systems are ready for demo day. Additionally, there are some aspects of refinement I can still implement before demo day. One particular aspect is the frequency of new navigation system GET requests the browser can post to the server. Currently, the browser’s JS can spam the browser with GET requests with no delay; however, I do wish to increase the minimum delay to ~3 seconds.

Ifeanyi’s Status Report for 04/20/2024

These past two weeks, I mainly worked on using the IMU to integrate the position of the tag device, so that this position estimate and the one given by the anchors could form a complementary filter, and hopefully give us far more accurate localization results. This was to work by calibrating the device at rest to measure the gravity vector, then rotating this gravity vector as the gyroscope measured angular velocity. With a constantly updated gravity vector, we can then subtract this acceleration from that measured by the accelerometer to detect the non-gravity acceleration of the tag device, which we can then double integrate to get the relative position. However, after giving this a lot of work, and trying many different measuring, filtering, calibration, and integration methods, I was unable to find a way to integrate the user’s position in any remotely accurate fashion. the main issue lied with the gyroscope, which produced small errors in the gravity vector rotation, but this even this small error meant the gravity wasn’t always being subtracted properly, and so led to tons of spurious acceleration that built up a humongous error very quickly. Additionally, I helped the rest of the team do quite a bit of testing and benchmarking of the system ahead of the final presentations.

According to the schedule, my progress is on track, since all work on the tag device (my main slice of the project) is done, and we are now solely in the testing phase, trying out the device and tweaking what we can for higher accuracy or greater responsiveness where possible.

In this coming week, I plan to just work with the rest of the team to continue this testing, and continue implementing whatever tweaks we can find to make to help the system work as well as possible for the final demo.

Also, to learn new things that will help with the project, I usually look for the quickest way to “get my hands dirty” with the particular technology or algorithm, whether it be an online demo or some sort of starter code. I run that code over and over again, playing around with it and tweaking different things to see how the technology or algorithm reacts to get an intuitive understanding of how this thing really works and how to control it.

Team Status Report for 04/20/2024

Significant Risks

This week we were able to work on development of the localization system on the Raspberry Pi 4. Through this process, we were able to fix a major issue the speed of our distance acquisition speed on the tag device (overall improving the distance acquisition rate from anchors from approximately 1 Hz to 10.1 Hz), resulting in significantly faster update speeds.

We spent time this week focusing on large scale testing in third floor of ReH. The ~62 meter long hallways in this building are significantly longer than some of the previous tests we have run in the 26 meter hallways of HH A level, which are a closer approximation of what we might find when demoing in a larger environment such as the Wiegand Gym (with dimensions of approximately 32×40 meters). This testing process resulted in us finding that the firmware of the DWM1001 tag devices supports a maximum of communicating between 4 anchors at time–this in itself is not a problem, because multilateration using 4 anchor distances seems to result in relatively accurate results. However, we found that the tag device seems to have difficulties in swapping between anchors to communicate with. From the documentation, it appears that it is supposed to connect with anchors based on their “quadrant” (e.g. connecting to the nearest anchors in each quadrant). We found our tag device to have some difficulties in finding the closest anchors, sometimes preferring to “stick” to a set of anchors that are still in range, yet further away. Our main goal for the following week is to address this issue.

There are several ideas we have began to float around. One is to configure the initial locations of the anchors, which could help the tag better identify which anchors are available for it to connect to at a given time. There are strategies we could implement to reset the connections between the anchors and the tag to force the tag device to reconnect with the closest anchors in its range.

Changes to System Design

This week we were able to resolve an issue with the tag device not being able to update it’s position frequently enough (from 1Hz to 10.1Hz, as described earlier). Hence, we have chosen to remove the IMU position estimation we had planned earlier to include. With our higher update frequency, we find it is no longer necessary to have a reliance on an IMU to affect the user’s position as the “ground truth” of the UWB localization system is more frequently updated.

Schedule

This next week we will be working on improving the localization system so that it adapts better to regions with more than 4 anchors. This is expected to be a team effort, as it takes a considerable man effort to map out buildings, set up the anchor positions in the correct area, as well as do further configuration with the tag/webserver. We also hope to continue working on the final deliverables required, such as the poster.

Progress

The following is a video of the localization system and navigation system working in tandem in HHA level, with the localization system running on the Raspberry Pi. The localization system runs at a higher frequency than before and then the navigation system is in a state such that it is able to provide directions, as well as distance/time estimations for the travel time.

The following is another video of a “simulated” version of the navigation system working, displaying more details including the system providing new directions to the user.

Jeff’s Status Report for 04/20/2024

What did I do this week?

I spent this week focused on developing the navigation system and testing its basic functionality, as well as working with my team to test the localization system over larger areas.

For the navigation system, I built on top of my progress in the previous week to work on displaying instructions and expected time of arrival for the users to view. I created a mock user interface with the header displaying the direction, as well as a footer displaying the time remaining and the total distance left to travel. I added a button in the footer to go between the different modes (navigation vs. searching for a location) and then worked on developing the CSS to improve the graphical interface.

Then, I worked on the development of the front end to improve the efficiency of the navigation system by offloading as much work as possible to the frontend, so that it would not spam the server with new navigation requests. This involved synchronizing the “path” drawn by the navigation system with either the x or y position of the user. Suppose the user were to walk in a direction parallel to the current path direction. In that case, the path will either “grow” or “shrink” to compensate to continue showing the visual indicator of where the user should go. Then, if the user chooses to deviate too far from the path, the frontend would call the navigation algorithm in the backend for updated directions to reroute the user.

Finally, all that was left was to update the backend to provide directions to the user (if they should turn left, right, or arrive at their direction). This was done by parsing the line segments generated by my A star algorithm to determine which direction they should turn in with regards to the cartesian plane. I also needed to add to the database model fields describing the scale of the image, which was calculated with regards the actual mapping I have previously done along with the size of the walkable area on the image.

I was able to test the navigation system by using a script to provide user location to the webserver. This allowed me to verify that I was able to have the client successfully align the user’s position with the path, as well as correctly provide directions to the user. We were also able to briefly test the system in the Hamershlag A level.

The attached video shows the navigation system functioning while using our Raspberry Pi.

I met with my team several times to do further testing of our localization system in Roberts Hall and Hamershlag A. I provided assistance by measuring the dimensions of areas in ReH 3 that the user would be traversing throughout before updating my server with the hallway so that my team would be able to use it or testing.

Is my progress on schedule?

In the past two weeks, I was able to finish the rest of the navigation system (barring any bugs or user interface improvements to be found in further testing). This indicates that I am on track with everything promised on the Gantt chart schedules.

Next week’s deliverables:

This next week I will be testing the localization system with my team to ensure we are able to create an accurate testing environment representative of something similar to Wiegand Gym. Then, I would like to test the navigation system.

This weeks’s prompt:

I have taken 17-437 before (web application development) so I have a working knowledge of Django. However, prior to this project, I have never worked with WebSockets before. To accomplish low latency between the tag and the user’s browser, WebSockets were necessary (decreasing latency from >200 ms to around 67 ms). I had to learn about how to integrate Django Websockets with my project, as well as creating the Redis server to serve the requests. Most of the knowledge acquisition relied on reading the documentation for Django Websockets. Their documentation had several useful examples to help get me started on creating a channel layer for the application. Then, I needed to learn how to configure a Redis server on the EC2 instance to host the Channels layer. The Django Websockets documentation again proved to be useful as a guidance to decide what was necessary. Then, I found some forum posts on StackOverflow as necessary to provide a better idea of what the various configuration settings I was playing with could do.

Weelie’s Status Report for 4/20/2024

- What did you personally accomplish this week on the project?

Last week, I worked on optimizing localization algorithm and distance measuring methods to reduce the lagging.

First of all, I tested on raspberry pi to check the performance. However, I found out that even though the algorithms are much faster, the lagging on Raspberry Pi was still very obvious. I found that the more important problem that cause the lagging is that the tag itself will enable a stationary detection, which even block the measurements when walking in a normal speed. I changed the threshold of the detection and now it worked smoothly on raspberry pi. Another optimization I did was related to PySerial. I found that the input buffer of PySerial module is consuming too slow on Raspberry Pi. I changed the code such that Raspberry Pi will only read the last input line instead of reading the first line in the buffer like a FIFO queue.

I also contributed to the Final Presentation slides and related testing. After I did the general testing listed on the slides with my teammates, I tested the whole system in a larger scale (The whole Roberts floor B). And I found that when the tag getting closer to the network switching area, it will get unstable.

Github Repo: https://github.com/weelieguo/18500-dw1001

- Is your progress on schedule or behind?

Based on the schedule, I should still working on optimizing and testing the localization system. It is on schedule.

- What deliverables do you hope to complete in the next week?

This week, we found that the localization system will get unstable when it goes to areas that needs to switch between multiple anchors. I will try to resolve this problem over the next week.

- What did I learn during the process?

I learned how to use Embedded Studio to write code and debug the DWM1001. I learned to look at the forum and the user manual (development kit) to get useful information. In the forum, I can see others’ posts regarding to different areas. In our case, Qorvo is the forum that I can gain information from, such as multithreading with DWM1001.

Ifeanyi’s Status Report for 04/06/24

This week, I worked on tweaking various aspects of the project for optimal performance ahead of the interim demo. And after the demo, I worked on integration of IMU data into the localization process, as well as coming up with several other measures for increasing the speed and accuracy of our localization over the coming weeks. Our former IMU only had the ability to measure 2 out of 3 axes of rotation and acceleration in all 3 dimensions. Without the last axis of rotation, we cannot subtract gravity from the acceleration readings and therefore integrate our position from a known starting point; something that would be very useful as a form of sensor fusion with our UWB localization. This week I ordered a new IMU (which does measure all 3 degrees of rotation), soldered it up, and coded it to perform this exact location integration.

With these developments, I am currently on schedule with my progress, as I am now in the end loop of testing and implementing changes to increase the accuracy and speed of test results.

Next week, I plan to test this new location integration with the new IMU. I also plan to implement a hand-written gradient descent-based multilateration algorithm which should be significantly faster than the existing Tensorflow version. Additionally, I plan to add progression to this gradient descent, so that it can refine its guess over multiple “frames”, and even adjust its estimate when only receiving readings from 2 anchors. This should make the system more robust to signals from individual anchors periodically “dropping out”.