Significant Risks

This week we spent a significant amount of time testing the localization system for our interim demo. We were ultimately able to demonstrate the accuracy of the system, though the update frequency of the system running on the Raspberry Pi is insufficient. Going forward, one of our main goals is to continue testing the Raspberry Pi to make sure that the updates are more frequent. We have already made several attempts to refactor the code to use alternatives to our gradient descent with PyTorch, and have seen some improvements in performance. However, we have noticed this may have come with the tradeoff of accuracy, and we need to continue doing further optimizations. We would also like to try offloading some of the computation onto the webserver and see if it is powerful enough to do some of the computation required.

Changes to System Design

Originally we assumed an accelerometer/gyroscope would be sufficient for getting the user’s orientation, though Ifeanyi’s testing showed that it would be more accurate to also use a magnetometer as well. We are hoping that this chip may provide more useful readings than our previous chip, assisting with IMU orientation and any location prediction using that chip. Hence, acquired a new imu that had magnetometer features as well. The rest of the system design is unchanged.

Schedule

This week we worked on setting up the interim demo and additional improvements to the localization system, as well as starting on the navigation system. Next week Weelie will be working on improving the navigation system, doing more testing on the RPi and improving the latency of the update frequencies. Ifeanyi will be working on the RPi, programming the IMU and getting rotation data. Jeff will be continuing to work on the navigation system so that it can be used to provide the user with navigation directions.

Progress

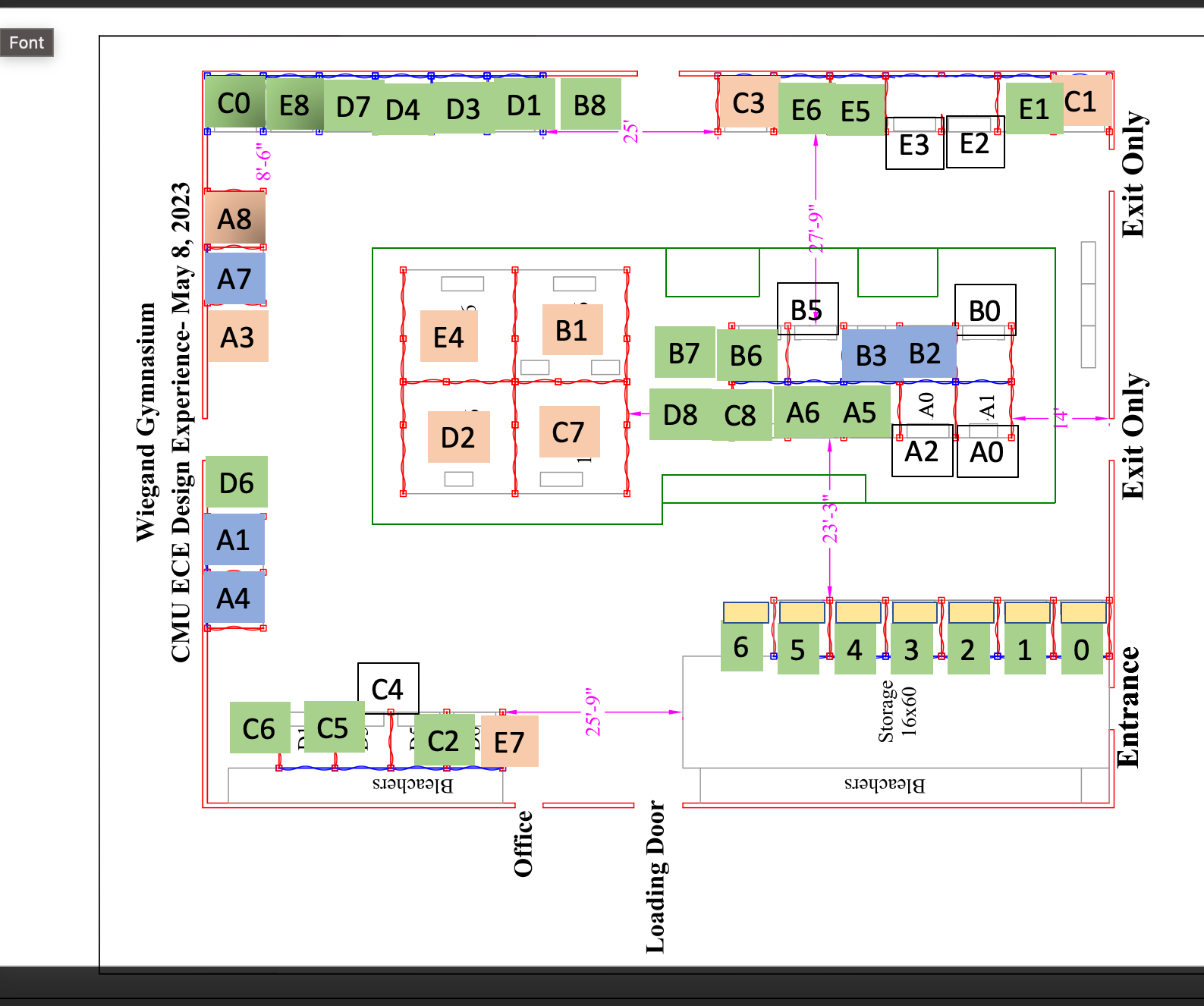

Here is a video of the initial portion of our navigation system. The video shows a demo of the system working, such that the webserver is running on my local machine and I have a script sending in “dummy data” for the user’s position. Entering a new room using the search bar sends the asynchronous request to the server, which responds with the path the user should take, and is drawn on the user’s map.

Video Link

Validation

There are a number of validation tests we need to do to ensure the system satisfies the use case requirements of our clients and properly functions.

Firstly, we need to ensure that the localization system is accurate and provides timely updates. We would like the accuracy of the localization system to be on average within one meter inside of a building. We need to measure this by going to a position in a hallway and comparing the result of the localization system to the user’s actual position, before taking the difference of the distances. This accuracy is sufficient for preventing the user from becoming lost in the building, as well as decreasing the amount of fluctuations in the user’s position.

Additionally, we want the position updates to happen smoothly at a high frequency of greater than 2 Hz, as well as not have too much delay between physical movements and updates on the server. These tests will require more coordination between all team members, because these tests are dependent on how long it takes for both the localization system and user front end to run its algorithms. As long we are able to establish accurate timestamps for communication start/end times, we should be able to obtain these measurements. Also, we need to make sure that the lagging time is less than 1 second. We will verify this by taking 2 points, one is the start and the other is the end. Then, we should walk from the starting point towards the end point. We should make sure that after we reach the end point, our position on the map will update accordingly within 1 second. We should repeat this test with different walking speed to cover different situations.

Furthermore, we need to test our navigation algorithms. We can do this by benchmarking it on some example graphs with known shortest paths, to see if our solution recommends these same paths. It really is as simple as making sure the function always returns the optimal path as it is supposed to. Additionally, if we make the nodes of our graph consist only of space on our map that is walkable (that is, no walls or other fixtures), then we are assured that our returned paths will always follow journeys the are indeed walkable by the user.

Lastly, we need to validate our directions and our user experience. To do this, we need to test our app several times with different starting locations and destinations to ensure that the directions we are given in real time always line up with the path recommended by A*. Then, for the user experience, we will survey a few participants to see if the app works according to its use case statement. That is, given only the purpose of the product, can a new user pick up the device and navigate successfully to their location? We will also survey them about the quality of the directions and the user experience for possible improvements to the interface.