What did I do this week?

I spent this week focused on developing the navigation system and testing its basic functionality, as well as working with my team to test the localization system over larger areas.

For the navigation system, I built on top of my progress in the previous week to work on displaying instructions and expected time of arrival for the users to view. I created a mock user interface with the header displaying the direction, as well as a footer displaying the time remaining and the total distance left to travel. I added a button in the footer to go between the different modes (navigation vs. searching for a location) and then worked on developing the CSS to improve the graphical interface.

Then, I worked on the development of the front end to improve the efficiency of the navigation system by offloading as much work as possible to the frontend, so that it would not spam the server with new navigation requests. This involved synchronizing the “path” drawn by the navigation system with either the x or y position of the user. Suppose the user were to walk in a direction parallel to the current path direction. In that case, the path will either “grow” or “shrink” to compensate to continue showing the visual indicator of where the user should go. Then, if the user chooses to deviate too far from the path, the frontend would call the navigation algorithm in the backend for updated directions to reroute the user.

Finally, all that was left was to update the backend to provide directions to the user (if they should turn left, right, or arrive at their direction). This was done by parsing the line segments generated by my A star algorithm to determine which direction they should turn in with regards to the cartesian plane. I also needed to add to the database model fields describing the scale of the image, which was calculated with regards the actual mapping I have previously done along with the size of the walkable area on the image.

I was able to test the navigation system by using a script to provide user location to the webserver. This allowed me to verify that I was able to have the client successfully align the user’s position with the path, as well as correctly provide directions to the user. We were also able to briefly test the system in the Hamershlag A level.

The attached video shows the navigation system functioning while using our Raspberry Pi.

Video Link

I met with my team several times to do further testing of our localization system in Roberts Hall and Hamershlag A. I provided assistance by measuring the dimensions of areas in ReH 3 that the user would be traversing throughout before updating my server with the hallway so that my team would be able to use it or testing.

Is my progress on schedule?

In the past two weeks, I was able to finish the rest of the navigation system (barring any bugs or user interface improvements to be found in further testing). This indicates that I am on track with everything promised on the Gantt chart schedules.

Next week’s deliverables:

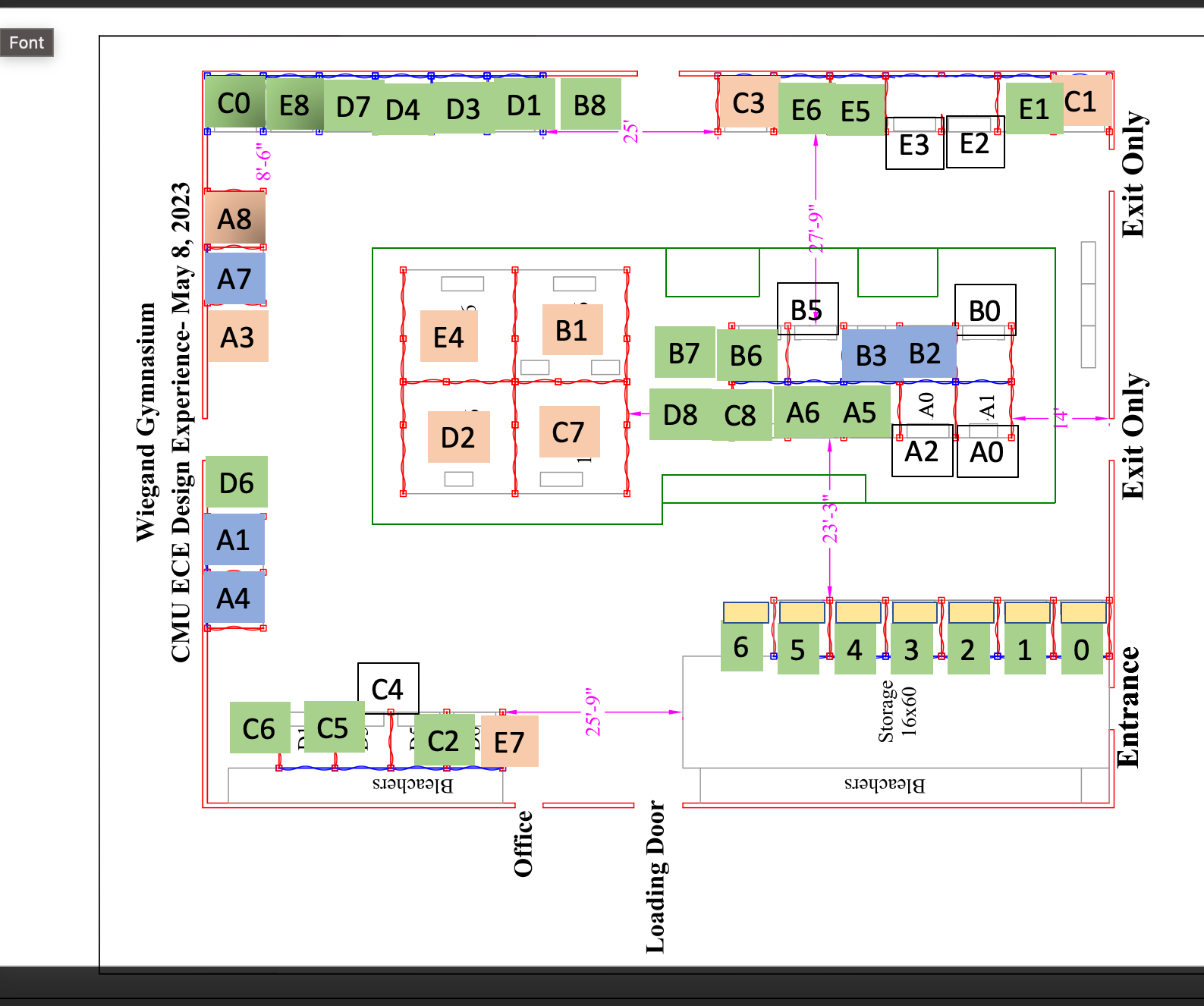

This next week I will be testing the localization system with my team to ensure we are able to create an accurate testing environment representative of something similar to Wiegand Gym. Then, I would like to test the navigation system.

This weeks’s prompt:

I have taken 17-437 before (web application development) so I have a working knowledge of Django. However, prior to this project, I have never worked with WebSockets before. To accomplish low latency between the tag and the user’s browser, WebSockets were necessary (decreasing latency from >200 ms to around 67 ms). I had to learn about how to integrate Django Websockets with my project, as well as creating the Redis server to serve the requests. Most of the knowledge acquisition relied on reading the documentation for Django Websockets. Their documentation had several useful examples to help get me started on creating a channel layer for the application. Then, I needed to learn how to configure a Redis server on the EC2 instance to host the Channels layer. The Django Websockets documentation again proved to be useful as a guidance to decide what was necessary. Then, I found some forum posts on StackOverflow as necessary to provide a better idea of what the various configuration settings I was playing with could do.