The following courses were helpful in the design principles used in our project:

- 18-290: Fourier Transforms, Frequency Domain vs Time Domain Signals, Signal to Noise Ratio.

- 15-112: Object Oriented Programming.

- 17-437: Web Applications.

During this week I mainly focused on trying to find software that will help us developing the frequency processor. Last week I found out that the pitch function from MATLAB would be quite useful to have to test against our own frequency processor. The purpose of this function is when given an audio signal it would return to the user all the frequencies found for that signal in the right order. Therefore, we can compare this to our own frequency processor to determine how accurate our frequency processor is.

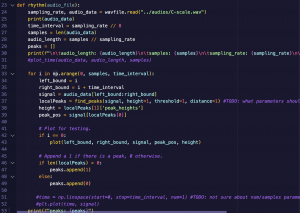

The problem is that we will need to write this code in python since we need to build our web-app utilizing Django. At first, I tried utilizing a public library called CREPE. However, it was really hard to install this library, and I was not successful in doing so. I tried for 2 days to install it, but the library required me to install TensorFlow and it was causing me too much trouble, as well my teammates.

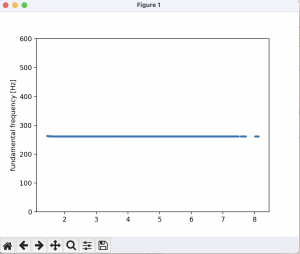

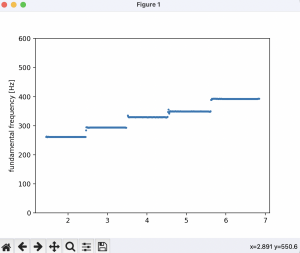

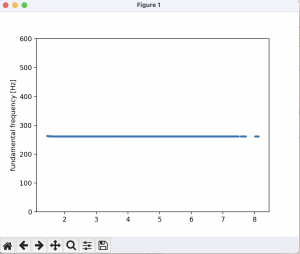

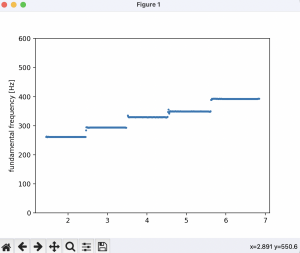

I decided to look for other libraries and I found another one called Parselmouth. This library was easy to install and also has a Pitch function to determine the different pitches given an audio file. The software seemed to correctly detect the frequencies given a couple of files that we had. Therefore, we will be utilizing this for testing our frequency processor.

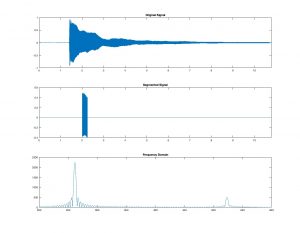

These are the outputs of a C-note and a C-scale:

I also have been working with my teammates in laying out all the content for the Design Review, as well as setting up the database for our django application.

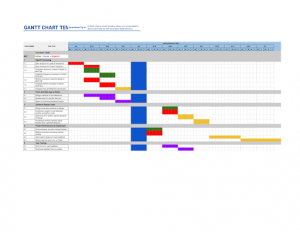

I would say my progress is on-schedule. There have been some modifications to our Gantt chart and I will be focusing this upcoming week on researching how to do the rhythm processor, as well as preparing to give the presentation for the Design Review.