What did you personally accomplish this week on the project?

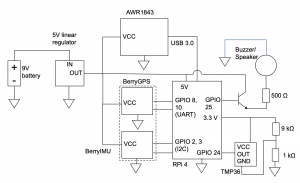

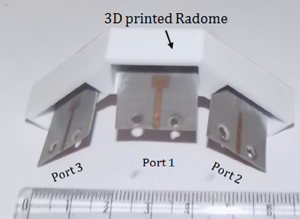

I met with Professor Swarun Kumar and students at CyLab the week before break to discuss and test a 120 GHz radar. Although angular and range resolution were increased, effective range was greatly decreased to about a meter for detecting humans, which is way below our use case requirements. A day later, Matthew O’Toole responded that a DCA1000EVM (the green board) was available for our use, so we are switching back to the AWR1843. With the team, I also helped generate labels of the target location in range-azimuth space for the neural network and contributed to the design report.

I set up real-time data streaming directly from the AWR1843 without the green board by directly accessing the serial data, so I collected a small range-azimuth and range-doppler dataset including me moving at different ranges and azimuths, which clearly shows in the range doppler plot even when partially obscured by 1 cm-wide metal bars spaced 5 cm apart, but very little difference in the range azimuth plot, which only plots zero-doppler returns. A visualization of part of the dataset is shown below:

However, the data available without the green board is not sufficient for our purposes due to:

- Very low doppler resolution compared to similar studies in literature

- Lack of localization for doppler-shifted returns, not just single points of detected objects

- Cannot separate doppler shifts of returns at the same range but different azimuths

Now that we can use the green board, we will collect 3D range-azimuth-doppler maps that mitigate these issues and allow us to use a 3D-CNN architecture as originally intended, without the significant information loss the week before from reconstructing using just the range-doppler and range-azimuth maps which were the only available radar data from the dataset.

Is your progress on schedule or behind? If you are behind, what actions will be

taken to catch up to the project schedule?

Progress is slightly behind due to uncertainty about whether the green board was available, which created uncertainty and extra work on characterizing and comparing two different modules. With the green board, I can iterate quicker and catch up due to the availability of real-time raw data.

What deliverables do you hope to complete in the next week?

- Set up and collect higher resolution 3D data from the AWR1843 with green board

- Test latency of streaming data from all the sensors through WiFi and adjust data rates accordingly

- Obtain a part 107 drone license