This week, I worked on getting the Jetson to send instructions (in the form of opcodes) to the iRobot in order to get it to move. To do this, we have to publish linear and angular speed messages to the command velocity ROS topic. From there, we build a driver to convert these speed commands into opcodes that tell the iRobot how to move. I forgot to take a video, but this will be demo’d on Monday. The next step is creating the path planning algorithm so that these command velocity messages can be published by the path planning (and hence become autonomous), rather than the current method of a keyboard-teleoperated iRobot.

Jai Madisetty’s Status Report for 4/2

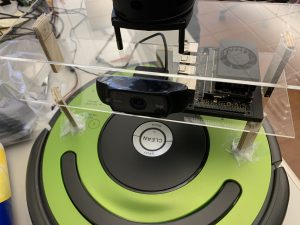

This week, our main goal was to get a working demo ready for the coming week. My job for this week was constructing the mounting for all the devices on the iRobot. We decided we needed 2 levels of mounting, since the LIDAR would need its own level since it operates in a 360 degree space. Thus, I set the LIDAR on the very top and then set the NVIDIA Jetson Xavier and the webcam on the 2nd level. Due to delays in ordering standoffs and the battery pack, we have a very barebones structure of the mounting. Here’s a picture:

Once the standoffs arrive, we will modify the laser-printed acrylic to include screw holes so the mounting will be sturdy and legitimate. This week, I plan to get path-planning working with CV and object recognition.

Keshav Sangam’s Status Report for 3/27/2022

This week I worked on SLAM for the robot. On ROS Melodic, I installed the Hector SLAM package. There are a few problems with testing its efficacy; notably, the map building relies on the assumption that the LIDAR is held at a constant height while maneuvering the world. The next step is to build a mount on the Roomba for all the components so that we can actually test Hector SLAM. On top of this, I have looked into the IMU’s for the potential option of sensor fusion for localization. By using the iRobot’s wheel encoders and a 6-DoF IMU as the input into a fusion algorithm such as Extended Kalman Filtering (EKF) or Unscented Kalman Filtering (UKF), we could potentially massively increase the localization accuracy of the robot. However, things like bumps or valleys on the driving surface may cause localization errors to propogate through the SLAM algorithm due to the constant height assumption mentioned before. We will have to conduct tests on the current localization accuracy when we get the mount working in order to decide if the (E/U)KF+IMU is worth it.

Raymond Xiao’s status report for 03/27/22

This week, I helped set up more packages on the NVIDIA Jetson. We are currently using ROS Melodic and are working on installing OpenCV and Python so that we can use the pycreate2 module (https://github.com/MomsFriendlyRobotCompany/pycreate2) on the Jetson. We have been testing Python code on our local machines and it has been working well, but for the final system, the code will need to be run on a Jetson which has a different OS and dependencies. We ran into some issues with installing everything (we specifically need OpenCV 4.2.0) and are currently working on fixing this. OpenCV 4.2.0 is required since it contains the AruCO package which will be used in place of humans. We are using this form of identification since it is fast, rotation invariant, and has camera pose estimation information as well. I think I am track with my work. For next week, I plan to help finish installing everything we need for the Jetson so that we have a stable environment and can start software development in earnest.

Team Status Report for 3/19

As a team, we feel like we have made good progress this past week (since the iRobot Create 2 arrived). We were able to upload simple code to the iRobot and have it move forwards and backwards with some rotation as well. Additionally, we were also able to get SLAM uploaded and working well. Here’s a video:

This coming week, we plan on getting the Kinect to interface with the NVIDIA Jetson Xavier. We have decided to use the Kinect for object recognition instead of using the bluetooth beacons for path planning. We aim to have this set up and verify that it works by the end of next week as well.

Jai Madisetty’s Status Report for 3/19

This week, our main goal was to get all three main components interfacing with each other (iRobot Create 2, LIDAR sensor, and NVIDIA Jetson Xavier). My main focus was working with Keshav to get SLAM working on the NVIDIA Jetson Xavier. We were able to get the LIDAR sensor interfacing with the Jetson, and we then used the Jetson with LIDAR to create a partial mapping of one of the lab benches. We still have to do some more testing, but it more or less seems like it is working properly. Scheduling-wise, I may be a bit behind as I should be close to done with path-planning. This week, I plan to work with the Kinect to get path-planning working with CV and object recognition.

Raymond Xiao’s status report for 03/19/2022

This week, I focused on reading the iRobot Create 2 specification manual that gives more information on how to program the robot. I also looked more into a GitHub repository that has a package specifically for interfacing with the Create 2 (link: https://pypi.org/project/pycreate2/) through USB. I wrote some test code using this library on my local machine and managed to get the robot to move in a simple sequence. For example, to move the robot forward, a series of four data bytes are sent. The first two bytes specify the velocity while the last two bytes specify the turning radius. To drive forward at 100mm/s, send 100 as two bytes and then send 0x7FFF as the radius bytes. In terms of scheduling, I think I am on track. For this upcoming week, I plan to have code that will be able to also interact with the Roomba’s sensors since that will be necessary for other parts of the design (such as path planning).

Jai Madisetty’s Status Report for 2/26

This week, we mainly worked to finalize some design specs and confirm what components we do and do not need. We’ve decided to use CV to detect targets placed all over our test environment. We scratched our idea of using sensors to detect bluetooth beacons as we are not dealing with smoke filled environments anymore.

In addition, when trying to interface the iRobot with the Jetson, the iRobot gave us an error signal and did not power on. As a result, we ordered a newer version of the iRobot Create 2, and we’re waiting on this before we get started with the programming.

Keshav Sangam’s status update for 2/26/2022

This week was spent preparing for the design presentation, figuring out the LIDAR, and starting ROS tutorials. As the presenter, I created a small script for myself to make sure I covered the essential information on Wednesday (see here). Raymond, Jai, and I installed ROS on the Jetson and got the LIDAR working in the ROS visualizer. Once again, videos are not allowed to be uploaded to this website but the design presentation has a GIF showing the LIDAR in action. We also started looking at ROS tutorials to better understand the infrastructure that ROS provides. Finally, we began writing our design report throughout the week. Hopefully we get the feedback from the design presentation soon, so we can incorporate necessary changes and update the report in time for the submission.

Jai Madisetty’s Status Report for 2/19

This week, I mainly worked with Keshav to set up the ROS environment so we can get started with the coding next week. I’ve continued to look into ROS and getting the fundamentals down. I’ve mostly been watching YouTube videos and I also found a 5-day tutorial I aim to complete by the end of this week (https://www.theconstructsim.com/robotigniteacademy_learnros/ros-courses-library/ros-python-course/).

Keshav and I were not able to confirm that we were getting data from LIDAR due to some technical difficulties (explained in his post); however, we aim to get the LIDAR interfacing with the Jetson and Roomba by the end of next week. Things have been moving pretty slowly due to a change of plans in our design (fully scratching the mmWave technology); however, we’ve finalized our design plans and will definitely get going in the coming weeks.