https://drive.google.com/file/d/16BUmcdUff1Xxrdo6RqtjcvHanefeLMUc/view?usp=sharing

(PDF exceeded the maximum upload size).

ECE Capstone Project, Spring 2022: Jai Madisetty, Keshav Sangam, Raymond Xiao

https://drive.google.com/file/d/16BUmcdUff1Xxrdo6RqtjcvHanefeLMUc/view?usp=sharing

(PDF exceeded the maximum upload size).

This week, I worked on setting up the testing environment. Due to size constraints in HH and lack of materials, we decided to use a 10×16 ft^2 environment. We modified the design a bit due to the large radius of the robot and its difficulty to maneuver the narrow obstacle course. Additionally, I finished up with the DWA path planning algorithm, modifying some of the logic in the frontier search portion of our code. Right now, all that is left is integration and tuning our overall system. Due to our robot’s autonomous nature, it is necessary to test our system with headlessly, which is quite difficult due to network latency. Our SLAM and path planning algorithm aren’t exactly synchronous in real time. As a result, we plan on slowing down our robot’s movement so it will be easier to test.

This week, I worked mainly on getting the path planning software coded up. However, due to a problem with getting a SLAM cartographer integrated with ROS, I was not able to take into consideration inputs from the SLAM subsystem. Thus, I also helped Keshav with the SLAM integration. We did eventually get the cartographer working; however, the localization of the robot within the created map is very inaccurate. We do plan on using sensor readings from the robot (odometer and possibly infrared sensors) to improve the localization. After we improve the localization, I plan on utilizing SLAM inputs (frontier of open areas in map) in the path planning for robustness.

The bulk of this week was devoted towards integration, mainly with SLAM. We were facing many linker files errors when trying to integrate the Google Cartographer system with our system in ROS. Fortunately, we did end up getting this working, but it did end up eating into a lot of our time this week. Overall, we have individual sub-systems working (detecting ArUco markers and path planning); however, we have not yet integrated these into our system due to the bottleneck of integrating the Google Cartographer system with ROS. Thus, it is difficult to say when we can start overall system tests. There may very well be a lot of tweaking to do with the software after the integration of the many parts. We are planning to come into lab tomorrow to work on integration.

This week, our team mainly worked to have all of our components housed on the iRobot to run headlessly. Now that we have the robot ready with all of its sensors fixed, we can start to test the Hector SLAM with the robot as a medium and even try to provide odometry data as an input to the Hector SLAM so we can avoid the pose estimation errors (the loop closure problem). After that, we can integrate the path planning algorithm, and after some potential debugging, from there we can start testing with a cardboard box “room” to map. As a team, we are on track with our work.

The bulk of the work the past week was dedicated to the housing of the devices since getting the right dimensions and parts took some trial and error. I am currently working on the path planning portion of this project. The task is a lot more time consuming than I imagined since there are a multitude of inputs to consider when coding the path planning algorithm. Since our robot solely deals with indoor, unknown environments , I am implementing the DWA (dynamic window approach) algorithm for path planning. If time permits, I would consider looking more into a D* algorithm, which is similar to A* but is “dynamic” as parameters of the heuristic can change mid-process.

Given we only have a week left before final presentations, we are planning on getting path planning fully integrated by Wednesday, and then running tests up until the weekend.

This week, I focused on preparing proper housing for the different components. We are planning on sticking with the same design as the barebones acrylic and tape design we had previously. We are still waiting on the standoffs so we have not yet started fixing components into place. I predict that CADing will be the most time consuming this week.

While waiting for the standoffs to arrive, we are working on getting our webcam integrated and calibrated with the NVIDIA Jetson. We are also planning on starting the path planning implementation this week. I began researching different algorithms that work and integrate well with ROS.

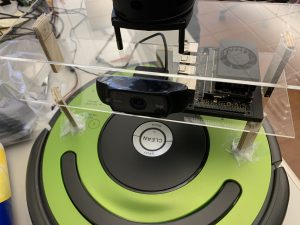

This week, our main goal was to get a working demo ready for the coming week. My job for this week was constructing the mounting for all the devices on the iRobot. We decided we needed 2 levels of mounting, since the LIDAR would need its own level since it operates in a 360 degree space. Thus, I set the LIDAR on the very top and then set the NVIDIA Jetson Xavier and the webcam on the 2nd level. Due to delays in ordering standoffs and the battery pack, we have a very barebones structure of the mounting. Here’s a picture:

Once the standoffs arrive, we will modify the laser-printed acrylic to include screw holes so the mounting will be sturdy and legitimate. This week, I plan to get path-planning working with CV and object recognition.

As a team, we feel like we have made good progress this past week (since the iRobot Create 2 arrived). We were able to upload simple code to the iRobot and have it move forwards and backwards with some rotation as well. Additionally, we were also able to get SLAM uploaded and working well. Here’s a video:

This coming week, we plan on getting the Kinect to interface with the NVIDIA Jetson Xavier. We have decided to use the Kinect for object recognition instead of using the bluetooth beacons for path planning. We aim to have this set up and verify that it works by the end of next week as well.

This week, our main goal was to get all three main components interfacing with each other (iRobot Create 2, LIDAR sensor, and NVIDIA Jetson Xavier). My main focus was working with Keshav to get SLAM working on the NVIDIA Jetson Xavier. We were able to get the LIDAR sensor interfacing with the Jetson, and we then used the Jetson with LIDAR to create a partial mapping of one of the lab benches. We still have to do some more testing, but it more or less seems like it is working properly. Scheduling-wise, I may be a bit behind as I should be close to done with path-planning. This week, I plan to work with the Kinect to get path-planning working with CV and object recognition.