In my last update, the main goal I wanted to tackle was getting Scotty3D to read from a text file of particle positions and be able to step through the simulation frames. I was able to fully accomplish this goal for this week, and additionally gained a good understanding of scene loading code in the codebase. I anticipate this will be handy for nice-to-have features if we have the time.

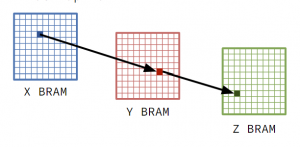

The next major goal for me is to get the FPGA to send the particle position data over UART (serial USB) to Scotty3D and step through the simulation. I realized once I got the simulation working from the text file that a real-time demo will be significantly more compelling, since a pre-computed simulation does not give an accurate visual perception of how much the FPGA can accelerate the fluid simulation. To clarify, real-time demo is not in our MVP, but since Ziyi and Jeremy are still working through build issues I believe this is the most important task that I can be working on right now.

However, since the Vitis HLS project is not working yet (which is necessary for me to do the above), my goal for next week will be to get the Chamfer Distance evaluation script working with the Scotty3D fluid simulation outputs.

I’ve had a really great two weeks so far with progress, and. I am a little concerned that build issues are still taking us this long to work through, but since we’ve stripped down Scotty3D to just the core simulation files on the FPGA I’m feeling OK about us getting the Vitis HLS build completely working in the near future.