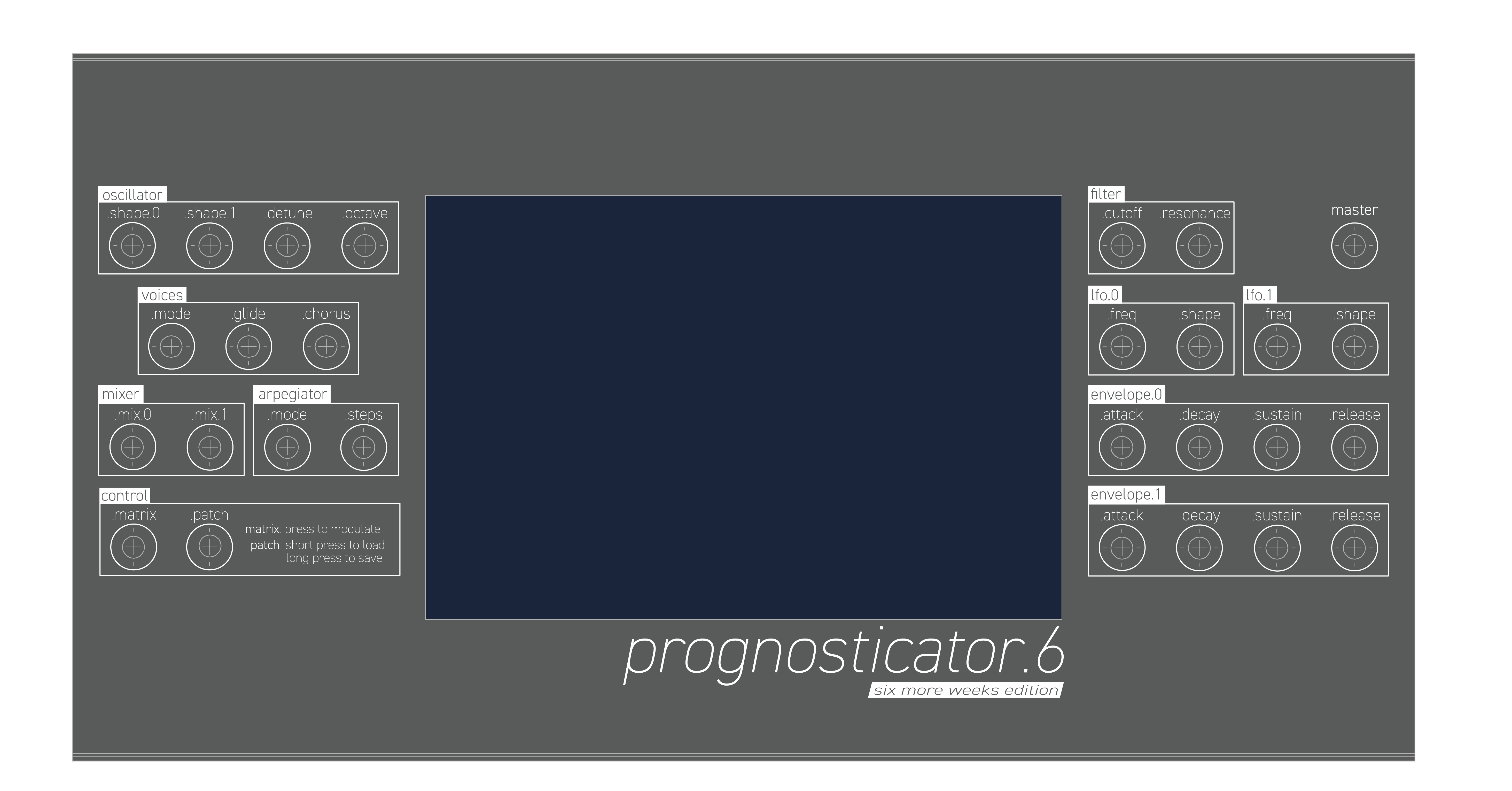

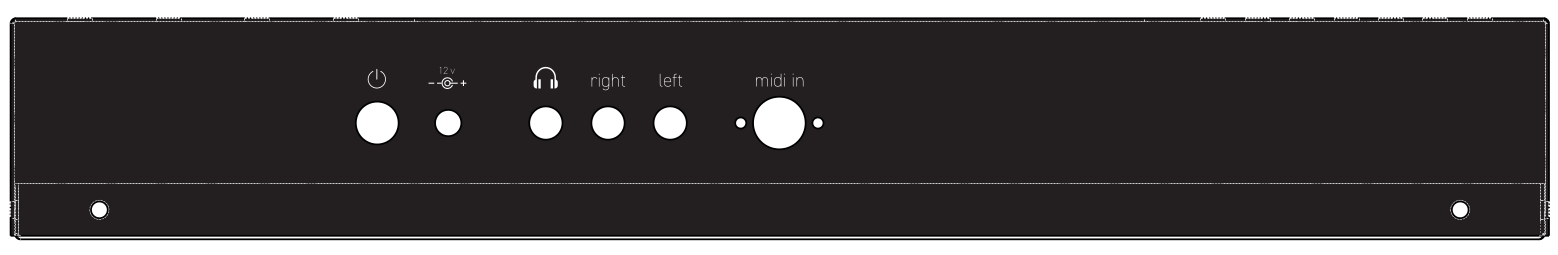

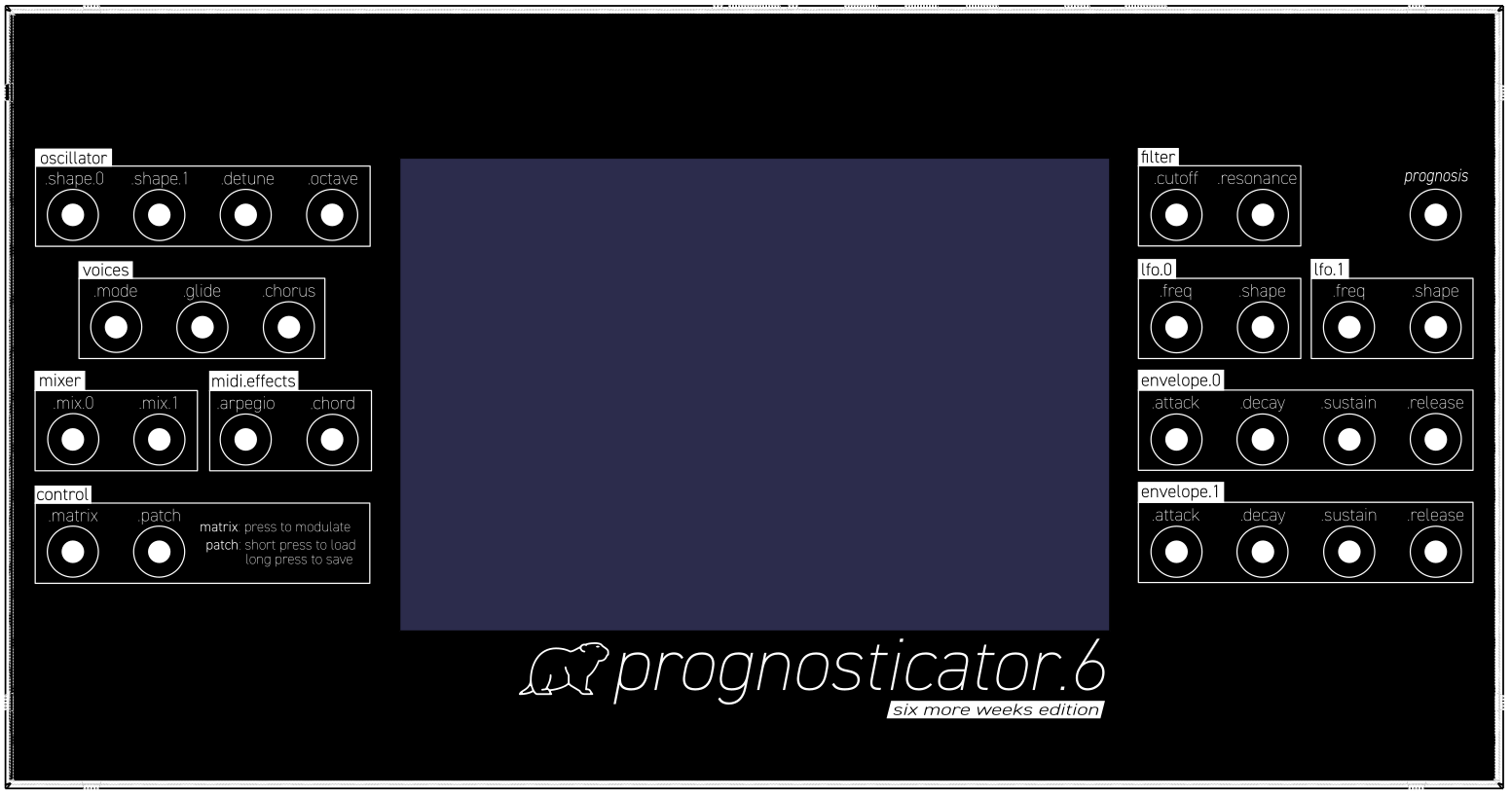

This week I finalized the user interface design for the front panel and began software implementation, including finalizing the software architecture for the primary computer (raspberry pi), co-processor (stm32), and the interaction protocol.

Detailed PDF of the enclosure design: panel_sheetmetal_engraved_textpoly

The design was focused on simplicity and consistency, with an emphasis on sharp corners and circles. The design is intended to be clean and simple, relying on simple shapes that mirror the mechanical design of the enclosure and merge seamlessly with the geometric designs of the actual GUI application.

After production, the cases look like this. They’re black anodized aluminum, so the pattern is just laser etched onto them.

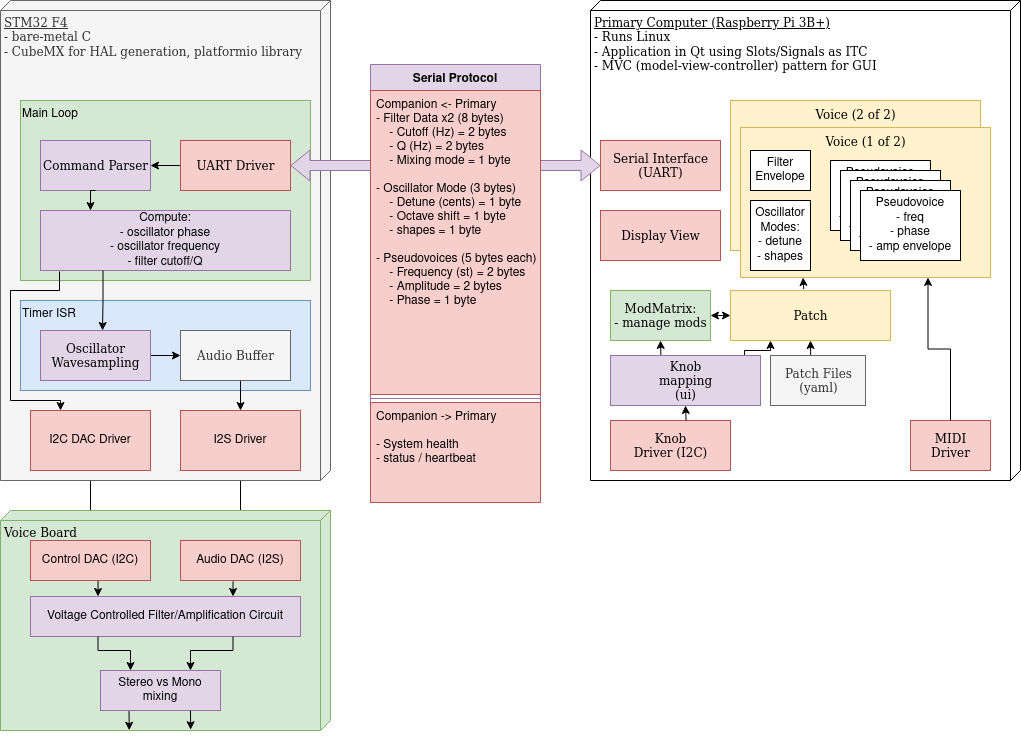

Software architecture is done, and I’m working to implement the system in C++/Qt currently. My goal for this week is to produce audio and finish the core software (everything except the GUI itself).

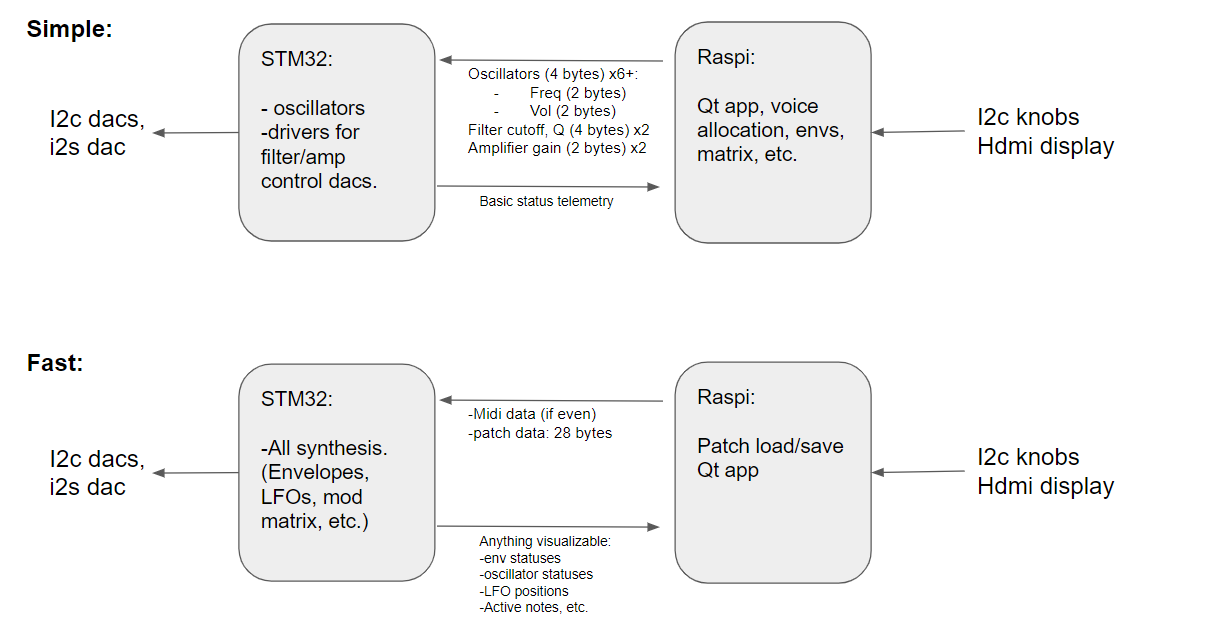

Software architecture:

A significant amount of work needs to happen in the GUI space to finish this. I’ve been working on the voice/pseudovoice/patch architecture, which is the core of the sound synthesis. Voices control voice hardware, including dedicated VCF/VCA circuits (voltage controlled filter / amplifiers.) Pseudovoices are oscillator-pairs with amplifier envelopes, which allow multiple notes to be allocated to the same hardware voice, although they must share VCF/VCA parameters. The voicing modes discussed in the design report discuss how this process works.

This week, I want to finish all of the Primary Computer software except for the GUI software, and put together the synthesizer.