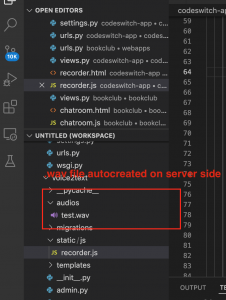

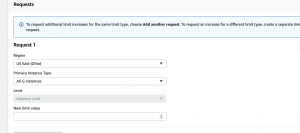

This week I worked on getting AWS configured for the DL language model we intended to deploy. I made resource requests for AWS credits and a limit increase of GPU instance types. We plan to use G-type instances for most development, though we may deploy some p-type instances for especially heavy system-wide training in later stages. I was able to download and setup the latest version of Jupiter Lab for remote development and was able to ssh in properly to my first instances configured with an AWS Deep Learning AMI. I experienced some issues ssh’ing in originally so I spent some significant time re-configuring my AWS security groups and VPC with the correct permission to allow my to access the servers now and with any future instances we may launch.

Progress is on track currently. We are currently ahead of schedule on our implementation as the official period does not begin for at least another week and a half and we’ve already had success with several early steps of development. This week I will also be focusing heavily on making and documenting key design decisions in detail. These will be presented next week at the design presentation which I will be conducting.

There will be several major things I plan to complete by next week. I’d like to have finalized detailed architectures finished for several versions of the LID or ASR models. There are a couple of different formulations which I’d like to experiment with. Marco and I will also need to finalize the actual task division we’d like to use for developing the sub-models of the overall system. This way he and I will also be able to document and finalize the different datasets we may need to compile or augment for module-level training. By next weekend we should have small development versions of both LID and ASR models running on remote instances and completely ready for further training and development.

![]()