This week I focused on researching and devising concrete plans for our web app deployment. The key points that influence our deployment strategy include: (1) there needs to be architecture that supports continuous push of ongoing audio recording in chunks from client to server; (2) the server needs to support single-instance service for our code-switching model, as we only need to load model once on server boot time and create only one instance of code-switching model for future incoming request; (3) we need to temporarily store audio files (.webm and .wav) on server so that they can be fed into our model as inputs.

According to my research, there are two ways of deploying an ASR model on AWS server: deploying the model as a microservice or deploying the model as a python class which gets instantiated and ready to use (by calling the class methods such as “.predict( )”). After reading through tutorials and documentations (https://www.youtube.com/watch?v=7vWuoci8nUk) and (https://medium.com/google-cloud/building-a-client-side-web-app-which-streams-audio-from-a-browser-microphone-to-a-server-part-ii-df20ddb47d4e) of two deployment strategies, I decided to take the simpler strategy of deploying the model as a python class. The greatest advantage of this strategy is its simplicity. Deploying a microservice involves work to build the model into a Docker container and uses AWS Lambda etc. in addition to deploying our app on AWS EC2 server. Also, this strategy still allows great flexibility on the technology used at the client end. Therefore, to accomplish continuous push of new audio chunks from client to server, I can use either RecordRTC and socket.io in Node.js or websocket in Django to emit new audios to server efficiently. From the tutorial, I already familiarized myself with how to achieve single-instance service following this deployment strategy. And lastly, the temporary storage of audio files can simply be done through I/O calls wrapped in some class methods of the model instance.

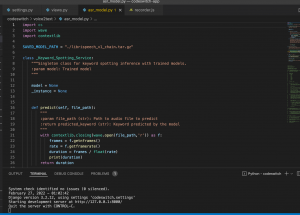

Having devised a detailed deployment strategy, I began deploying our app. I finished writing the model service class including necessary functions like predict( ), preprocess( ) and the instance constructor of the service itself that guarantees only a single instance is launched.

Next week, I will continue researching and deploying our app on AWS server. The goal is to have our app deployed capable of loading a pretrained ASR model and send back prediction outputs to the client frontend.