DrawBuddy Poster

Denise’s Status Report for 4/30/2022

This week I worked on integrating the image cropping code with the rest of the codebase and starting implementing an SVG writer to output the objects on the screen into lines in SVG format, such that modifications can be sent over sockets quicker, parsed through by our SVG parser, and also exported as an SVG file if needed. After our final demo I also began looking into HSL(hue, saturation, lightness) for line detection as opposed to greyscale, since HSL would be more robust against lines of varying widths and within shadows. Currently I am looking at an implementation similar to the one described here.

Next week I will be working on finishing the final poster, the final report as well as the SVG writer. If there is still time available I will implement HSL line detection as well.

Currently we seem to be on schedule but there is quite a bit of integration to be done.

Team Status Report for April 23, 2022

The most significant risks are not being able to get the SVG writer working because in order to integrate all of the vectorization and the communication we need to be able to write the components out in an SVG file to send to connected peers since SVG files send faster than JPEGs and PNGs. This method involves looping through all of components and writing out in the SVG format. We do not project this to be a very high risk since it’s a matter of parsing through information and writing out as a string in a particular format.

We did not change our design much but we did add additional steps that were unaccounted for in our original design including cropping the image to remove the background and adding features for moving points individually as well as grouping lines together.

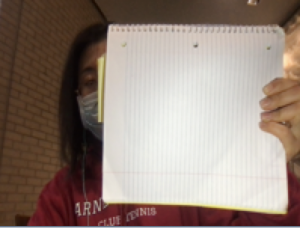

Here is our current schedule.

Denise’s Status Report for April 23, 2022

This week I worked on finishing the image cropping feature such that the background is removed whenever we convert an image to a SVG, such that we will not capture the background objects within our diagram. In order to do so we find the biggest contour within the image and create a bounding box around it. After that we scale the bounding box down to remove the hand that’s holding the paper. Currently I’m working on creating a function to write the contents of the diagram back into an SVG file such that new modifications can be sent over the server. I have also worked on collecting results and updating the slides for the final presentation

Left: Original image; Right: Cropped image

Currently I am on schedule, and am picking up smaller tasks that were not originally planned.

Denise’s Status Report for April 16

This week I worked on implementing a cropping feature to remove the background of the image captured by the camera such that we only vectorize the diagram. My approach was to greyscale the image, apply another filter to make the image black and white, and apply a morphological erosion and dilation to remove noise. After that we will apply the openCV border detection to find the largest contour, fill it, and then use that as a mask to isolate the image.

Currently our approach works however the mask generated is a little too sensitive to the lighting and the hand holding up the paper as shown in the images below. This is likely because we’re using a binary filter to convert the image to black and white that results in very jagged contours. We hope to remedy this by fitting a bounding box around the contour and then filling the bounding box instead of the contour to generate our mask. This is still currently a work in progress.

Left: Original photo; Right: generated mask

I am a little behind schedule but I should be able to wrap this up and get back on track next week. I hope to finish the image cropping and then will help Lisa out with the SVG transformations, and new SVG file generation.

Denise’s Status Report for 04/09/2022

This week I implemented an SVG parser to parse through an SVG file line by line and extract the endpoints of the path in order to produce straight lines. I then modified this feature by having it parse through all the points within a path and extracting only the points that produce an angle of less than 100 degrees such that points that form obtuse angles with their neighbors are removed. This method helps reduce the complexity of the shapes by removing points while also enabling us to produce polygons as opposed to just lines.

I researched different methods of cropping the image captured by the camera such that we only capture the paper and the diagram on it. Currently our approach is to greyscale the image, apply another filter to make it black and white, and apply a morphological erosion and dilation to remove noise. After that we will apply the openCV border detection and then use that to crop the background of the image. It is currently a work in progress.

We had to modify our schedule a little bit this week but we are currently on track. Next week I hope to finish this portion of removing the background image and then help Lisa integrate the SVG parser with the code to render the SVG files onto the whiteboard.

Team Status Report

The most significant risk as of now is identifying an efficient method to display the different components of the .svg file onto the whiteboard. We need to display the components in such a way that it is easy for a user to edit each component individually. Current methods and our backup plan involves taking each independent object and outputting each one as a separate .png file onto our whiteboard. We could also write our own parser to iterate through the objects of a .svg file and draw each component as it’s individual object. More research will need to be done to decide on a method.

The biggest change we may make is that we may implement the goal of straightening lines as a feature on our GUI such that the user can explicitly mark which lines they would like to be straight in order to reduce the complexity of the computer vision algorithm on the pre processing side. This will incur no additional costs.

We are a little behind however there have been no changes to our schedule since we are aiming to get back on track by the end of this week.

Denise’s Status Report for 4/02/2022

This week I finished modifying the use-case requirements, abstract, and testing portions of the design report in preparation for our final report. I also integrated the computer vision component with the vectorization portion such that once the image is captured; it will be written as a .jpeg file to DrawBuddy/vectorization/inputs where it will then be fetched by a call to the VTracer API and vectorized as a .svg file in the DrawBuddy/vectorization/results folder.

I also began looking into ways we can display .svg files not as .pngs onto our whiteboard display since this was a problem we did not account for. We had assumed svgUtils had additional function for displaying .svg files but currently pyQt looks promising. The remaining work to integrate the rest of our project includes taking the .svg file and outputting it onto our virtual whiteboard.

In terms of identifying straight lines, I discussed with Lisa and we’re thinking of pivoting to performing this on the post processing side such that the user can explicitly mark which lines they would like to straighten as opposed to trying to produce and algorithm that could estimate the user’s preferences.

I am a little behind schedule since setting up the whiteboard and displaying objects onto it were setbacks that we did not expect. However this is a minor setback and we should be able to get back on schedule within the next week or so.

Next week we hope to have a fully integrated system for vectorization. such that a user can click our vectorize button and it will take whatever image the user has and output it onto the whiteboard. More work will need to be done to filter the noise from the image and send the images to connected peers.

I also hope to begin working on the translation and resizing features of objects within the image on our whiteboard.

Denise’s Status Report for 3/26/2022

This week I continued updating the design report as per feedback. Specifically I edited the use case requirements section and specifications to help clarify the use case and user interaction of DrawBuddy. This week I also spent more time considering the ethical impacts of our project and discussed in with my group possible ways to make DrawBuddy more secure in order to prevent data leakage and unwanted users from disrupting a session. We will add implementing a kick button to remove unwanted users as well as using encrypted communication as stretch goals.

I am a little behind, since in order to start on the next section with the modifying SVG images, I need to be able to work with the whiteboard interface which is currently in the process of being implemented by Ronald and Lisa.

Ideally next week I will be able to use the whiteboard and implement translations and scaling features.