Jonathan’s Status Report for 04/23/2022

This week I worked with Jean on final integration of EEG and EMG to the frontend interface. We fixed a couple integration code bugs and tested controlling the user interface with our system. We also collected more data for doing ML modeling and I retrained our EEG decisioning system with the new data. This allowed me to create a better model for 3 blinks which is integrated as another signal the user can use to control the system. We still need to conduct some testing on the integrated system, so we are a little behind as of Saturday 4/23, but should be able to do the testing and validation tomorrow.

Team Status Report for 04/23/2022

This week, we were able to collect additional data from more individuals to help with strengthening our ML models. On Wednesday, we tried using EEG with EMG for one arm and the control worked. We also tried to integrate all the EMG and EEG events to test on 4/23, but ran into some difficulties with the EMG sensors reading accurate signals and calibrating so we were unsuccessful. Therefore, we are slightly behind with where we would like to be with integration and plan on testing tomorrow. We also worked on the Final Presentation slides.

Wendy’s Status Report for 04/23/2022

Unfortunately, I tested positive for Covid this week so I was unable to work until Friday or meet with my teammates to test and integrate until Saturday. However, I was able to work on and fix the two problems I identified last week. The bug with the Pynput library and the key press and display of ‘a’ regardless of which uppercase letter or special character is fixed. Now, the keys are released and displayed correctly regardless of what button is pressed on the keyboard. Another issue that I resolved was a clear mapping of all the human actions to events. Cursor mode will be the primary mode that users are in, and to go from cursor to keyboard mode, users will perform a left wink. To shift between horizontal and vertical controls when using both the cursor and keyboard, users will perform a right wink. I also added a right click option, and that is accessed via a triple blink. A table of mappings are included in this status report.

I also spent some time preparing for delivering our final presentation. I am still on schedule. This week, I plan on adding a different screen for when we first open up the keyboard application. This will be simpler and only have four buttons: cursor, page up, page down, and keyboard. When users want to go to the keyboard, they will move the cursor over that button and access the keyboard screen that we currently have. Users will also be able to scroll up and down the page. I hope to have this completed and tested so that these features can be shown in the demo as well as the final video.

| Human Action | Application Event |

| Double blink | Left click in cursor (mouse) mode

Key press in keyboard mode |

| Triple blink | Right click in cursor (mouse) mode |

| Left wink | Toggle between cursor (mouse) mode and keyboard mode |

| Right wink | Toggle between horizontal and vertical controls |

| Left EMG | Moves cursor left or down depending on which toggle is set in cursor (mouse) mode

Moves focus key on keyboard left or down depending on which toggle is set in keyboard mode |

| Right EMG | Moves cursor right or up depending on which toggle is set in cursor (mouse) mode

Moves focus key on keyboard right or up depending on which toggle is set in keyboard mode |

Wendy’s Status Report for 04/16/2022

This week, I created the listener class that binds to the events from the EMG that Jean had worked on. With this, the shoulder events will be connected to the frontend and the user will be able to perform more actions. I also added functionality to simulate key presses and mouse clicks so that the application can work as a standalone keyboard and mouse for all applications (eg. Chrome, Notes). There is a small bug with the Pynput library and the key press and release of uppercase letters and special characters, and I will work on fixing that this week. I changed the layout of the keyboard to have the ‘Cursor’ button be the focus key when the application first starts so the user can use the EMG to move the mouse to the area they would like to select to start typing. I ran into a slight problem about how to change the EMG mode from moving the cursor to moving keys on the keyboard and will work on fixing that problem after consulting with my team this week. For now, I made it so that the mouse click maps to a triple blink. There will be an updated mapping to all the actions we plan to include. This was a change from my plan to create an extension from last week because creating a Chrome extension would mean porting the existing Python code to Javascript. I am currently on track and will be working on the two problems I identified this week.

Wendy’s Status Report for 04/09/2022

This week, I still mostly completed my research on how to connect the Python application with the OS so it can be accessed on any Mac application. There doesn’t seem to be a way to do this easily so if I am unable to do this, I will look into building out a system that won’t be on the OS but is local to the computer. Currently, I am behind schedule because I did not work on what I planned to accomplish this week due to Carnival. However, I already started looking into connecting the EMG events with the keyboard interface and with the new sensor having arrived, it will be done early this week.

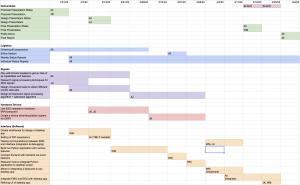

Updated Schedule

We have made some changes to our schedule. This is what we plan on achieving the last three weeks of this course.

For more clear viewing, our schedule can be found in the under the sheet labeled ‘Gantt.’

Team Status Report for 04/02/2022

This week, our team worked on integrating our whole system with the EMG data. We ran into some bugs, and we are working to fix those bugs so that it is good for the demo next week. There were some bugs in the EEG processing code as well and we fixed it and cleaned the code up. On the EMG side, we worked on a bluetooth module that just arrived and with writing a calibration method. We also mapped the correct actions from the user to the keyboard. We discussed the minor problems that each of us faced and helped think of the solution. One of them was brought up after our weekly meeting and it was about the signal detection edge cases with the sliding window algorithm. To solve this problem, we decided to use FSM logic to account for all the possible cases. On the app interface side, it was deciding where and whether we should have a blinking typing bar when the user first opens up an application. We discussed as a team and are ready to implement the next steps. Overall as a team, we are on track and should be very close to getting a product that is ready for demo.

Wendy’s Status Report for 04/02/2022

This week, I worked on mapping user events to keyboard actions like we had originally planned (eg. left wink maps to Siri activation and right wink changes the mode of the arrows from left/right to up/down). For this week, I had planned on moving the keyboard from just a Python window to something accessible on laptop applications, including the Notes app or on Chrome. However, this is a more difficult task than I originally thought and I was not able to get that working this week. I am going to have to think about how I would set the keyboard to start inserting keys based on simulated key presses (in other words, whether the user can start typing immediately after opening an application or if they have to use the cursor to move to a typable location, double click to get the blinking started, and then hover over the keyboard to start typing). I have looked into ways to make the keyboard accessible on any laptop application, including using Pynput to simulate mouse clicks and keyboard strokes. Therefore, I am a little bit behind schedule but I plan on getting a keyboard to appear and work with the user events by next week. For now though, what I have done with the keyboard interface is good for our demo in a couple days.

Team Status Report for 03/19/2022

This week, we tested some different arrangements for getting the EMG sensors to display meaningful data that could be parsed into left and right shoulder movement events. While there is a clear distinction between a relaxed and raised shoulder movement, as evident in the plot in the first image below, where the EMG sensors need to be placed differ between users, including two of our group members. That is something we need to keep in mind moving forward and will need to be noted in our user calibration stage. We also did some more work on the EEG signal sensing, including creating a test bench for working live with the models we constructed. This will help us more easily test our models as we collaborate on them in the future. We all work on our individual tasks and things are on track with what we are supposed to do. However, as we haven’t integrated everything all together yet we may have to spend some effort in the upcoming weeks after each of our tasks this week is accomplished.