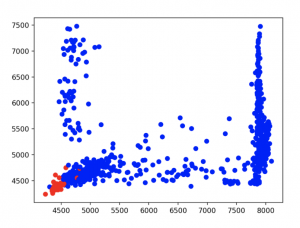

This week I’ve been trying out experiments with the EMG sensor to see how different postures and locations of the EMG can display the activation of the muscle signals that are detected by the sensor. I have planned out that we will have to develop a calibration state which is simply measuring the baseline of the resting state of the muscle of the individual before using the device. This should take a couple of minutes and we will average that to be the baseline that helps us figure out when the arm has been raised. I have gotten the arduino to send information to python (pyfirmata) properly now. So we will integrate this data with the program that Jonthan and Wendy have connected to use the data from EMG to control the keyboard/ mouse on the interface.

I also have moved on to looking into the Bluetooth module that we will be using for the wireless communication of the EMG. That is the next step for the EMG part on my plan. Then I will move back to what I had paused on which is to help Jonthan figuring out the fft implementations and the feature extractions for better accuracy on the detection.