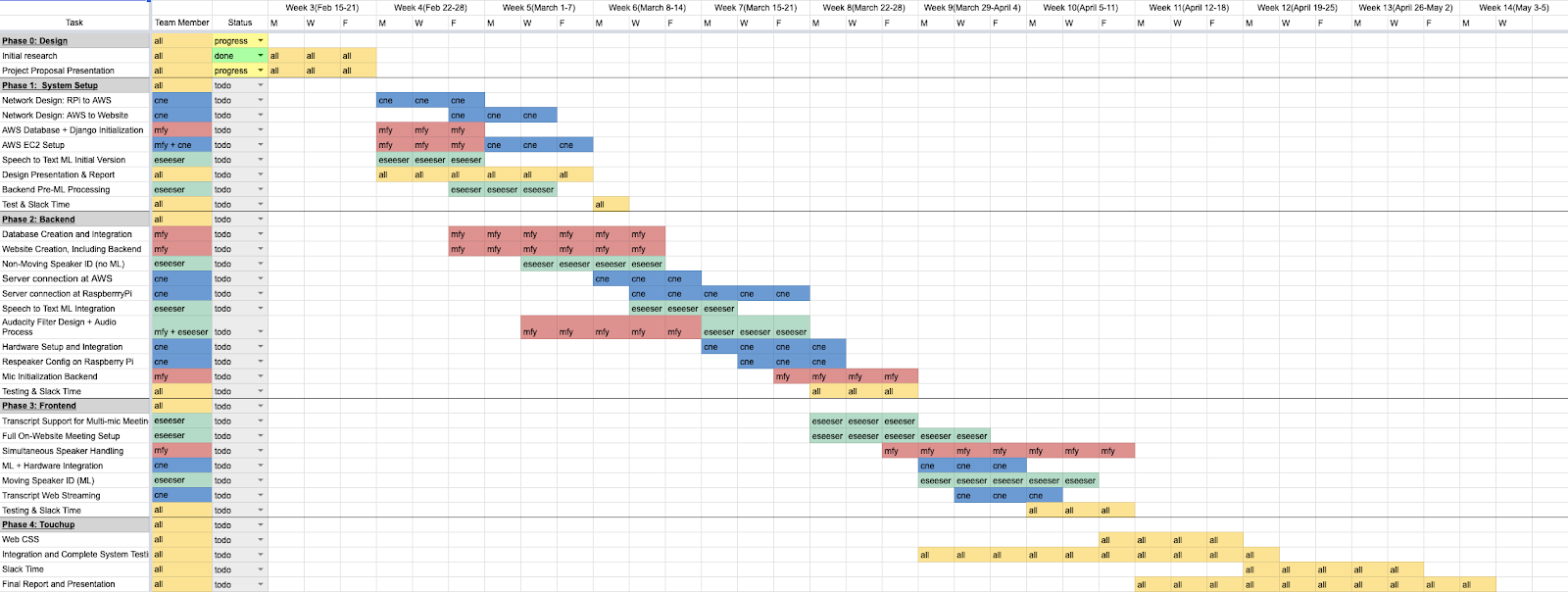

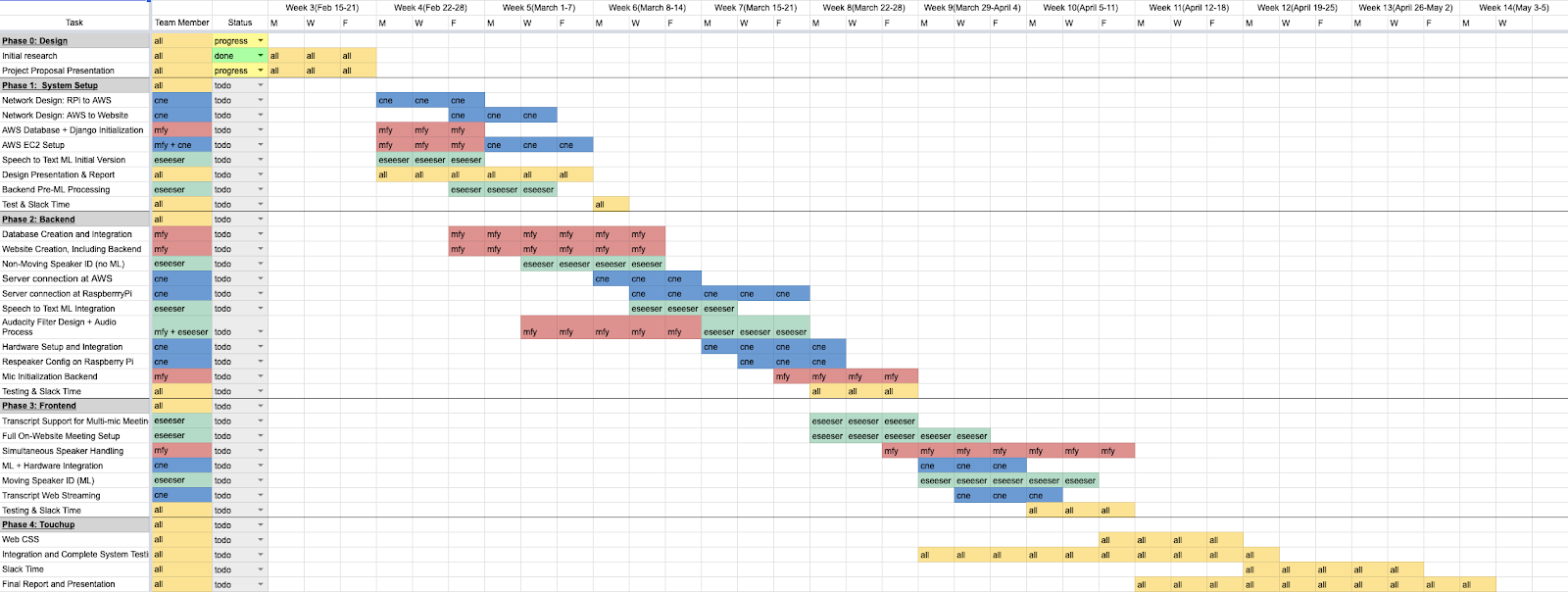

This week our team worked on design and planning in order to prepare our project proposal. We researched our requirements and technology solutions, divided work, made presentation slides, and drew up a schedule in the form of a Gantt chart. There are a couple risks that arise from this. First, there’s the risk that, not understanding how much work some aspects of the project might entail, we divided work in an unbalanced way. Here, we just have to be flexible and prepared to change up the division of labor if such issues arise. Second, there’s the risk that our schedule is unrealistic and doesn’t match what will actually happen — but this is counteracted by the nature of the document as something that will be constantly changing over time.

Since we were creating our design this week, we can’t really say that it changed compared to previously; but our ideas were solidified and backed up by the research we did. Some of the requirements we outline in our proposal are different from those in our abstract because of this research. For example, in our abstract we highlighted a mouth-to-ear latency of one second, but after researching voice-over-IP user experience standards, we changed this value to 150ms.

We’ve just finished drawing up our schedule. You can find it below. We’ll point out ways that it changes in subsequent weeks.