This week the team members were busily taking care of our individual programming responsibilities. Cambrea was working on networking, Mitchell was working on audio processing and website stuff, and Ellen was working on transcription. We also worked towards completing the design report document that’s due on Wednesday.

The risk we’ve been talking about recently is mismatched interfaces. While we write our separate modules, we have to be aware of what the other members might require from them. We have to discuss the integration of the individual parts and, if we discover that something different is required, we have to be ready to jump in and change the implementation. For example, Ellen made the transcript output a single text file per meeting. However, when Cambrea starts writing the transcript streaming, she might discover that she wants it in a different format; so we just have to recognize that risk and be prepared to modify the code.

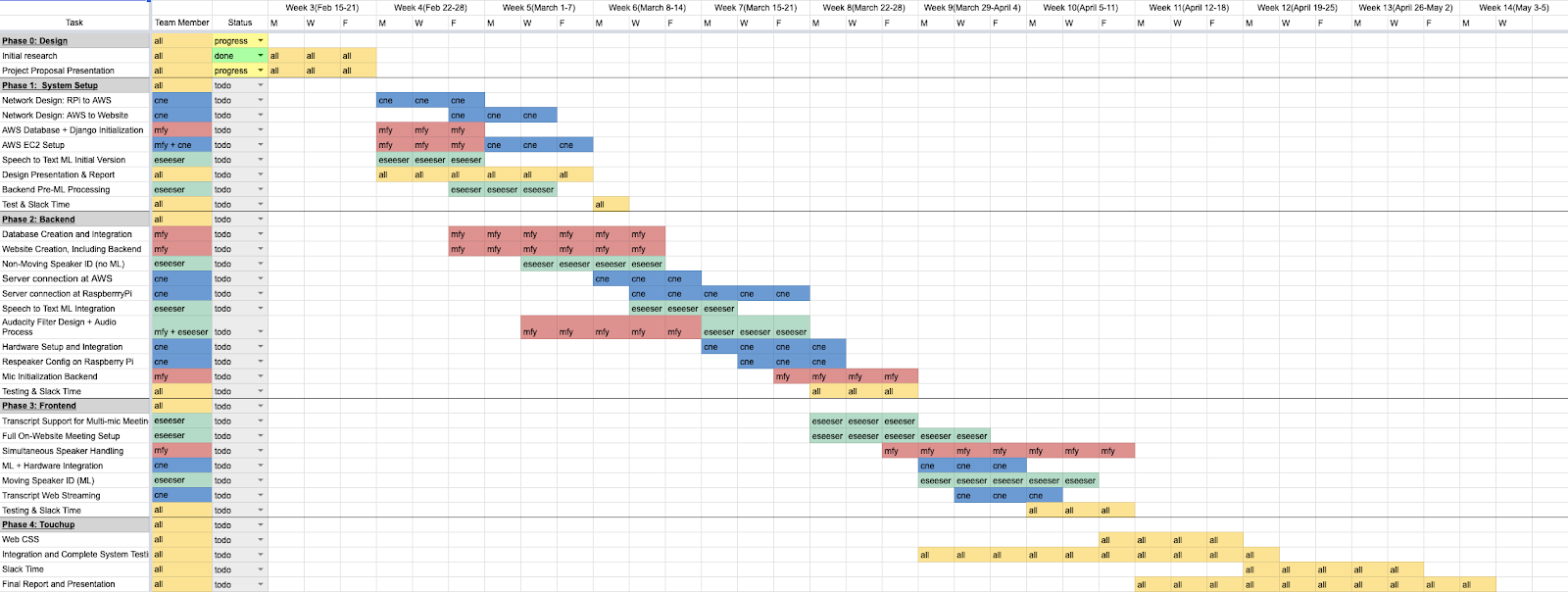

Our schedule hasn’t changed besides our making progress through its components.