This week we focused on debugging our device and also assembling the belt and attaching it to our Raspberry Pi + camera. We tested it out at the stoplight again and found that in some cases it was having problems, so debugged those.

Risks and Mitigations

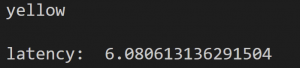

Current risks include the latency of our device and the accuracy. We had to settle for a minimum for the accuracy, but the latency is currently too long for a user to safely use it at a traffic light. We are looking into what else could be done to cut that time down.

Schedule

We need to just wrap up our final presentation requirements such as the video, poster, and final report. Last minute things include maybe any adjustments to the belt to make it more comfortable.